- VMware Technology Network

- :

- Networking

- :

- VMware NSX

- :

- VMware NSX Discussions

- :

- What are the largest road blocks to wider adoption...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are the largest road blocks to wider adoption of NSX and SDN generally?

At my company there's an objection of the total amount of throughput that can be processed from the

NSX Edge to/from the Internet. What does the road map for that piece look going forward in terms of

maximum throughput? And what are other concerns that potential adopters raise in going to

NSX over traditional switches and routers?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We see an increase in East/West traffic, while North/South traffic is decreasing. When using the DLR, East/West traffic can be greatly optimized. This, together with the Distributed Firewall - which works at the vNIC level - provides pretty good optimization for traffic flows. Now, looking at North/South traffic, I don't think throughput should be a very big issue. Using ECMP, you can do North/South traffic through 8 Edge Services Gateways. And should that not be sufficient, then you simply deploy more ESGs (provided that you also deploy more DLRs of course). Why is there an objection at your company regarding NSX edge to/from the Internet (also I think you mean to the LAN?)?

The only objection I can think of is cost. NSX doesn't come cheap (though I believe they're working on that), so that does slow down adoption a bit. However, when customers see the many benefits that NSX has to offer, pricing tends to become less of an issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the North-South (to Internet) throughput, it would be depends on your environment

vRealize Network Insight would be helpful to capture this so you can understand what will be the requirements

As mentioned in other reply above, you can always use ECMP up to 8 NSX Edge if that is an option

Other concerns will also depend on use cases.

One of the roadblocks in my customer is they have an application that runs on physical hardware servers that need to sit on the same L2 network with VMs and stretched across Datacentre.

MTU over WAN can be a roadblock if there's a constraint there.

Then stretched VXLAN (Universal Logical Switch) doesn't support L2 bridge as far as I know at the moment.

So my customer use other L2 stretched network technologies (EoMPLS or Cisco OTV or something else) that runs on defaut MTU 1500 and works with physical hardware servers

The customer also does not deploy VXLAN and only use NSX DFW for that particular application

Author of VMware NSX Cookbook http://bit.ly/NSXCookbook

https://github.com/bayupw/PowerNSX-Scripts

https://nz.linkedin.com/in/bayupw | twitter @bayupw

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

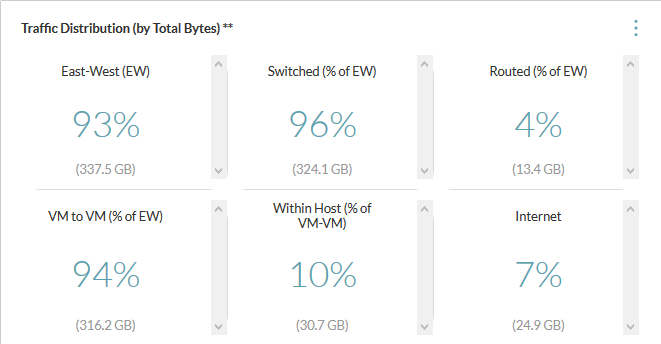

somehow the vRNI screenshot in my previous reply didn't show up

see below screenshot, vRNI would tell you how much traffic in the North-South Internet

Author of VMware NSX Cookbook http://bit.ly/NSXCookbook

https://github.com/bayupw/PowerNSX-Scripts

https://nz.linkedin.com/in/bayupw | twitter @bayupw

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DLR, as pointed uses a distributed architecture, thus may scale out to Terabits/Sec through many Esxi hosts in a Cluster. On contrary, ESR (Edge Services Router) is VM form factor, thus has VM limitations 10Gbps. For design purpose they have different roles with DLR used for East West Intra DC traffic, while Edge is used for North Sound traffic going outside the DC.

10Gbps of N-S traffic may be sufficient for most deployments and with usage of ECMP it may go up to 8x10=80Gps (Although services may not be available in this design). For Multitenant environments, replicating the same architecture there may be many multiples of this bandwidth of 10 or 80Gbps. Although rare if a tenant with services needs more than 10Gbps then this point may need to be addressed through a large scale design, and this may depend on the design requirements and use cases.

Reference Design Guide page 147 there is example for Large Scale design:

VMware® NSX for vSphere Network Virtualization Design Guide ver 3.0

Previously I think there were physical edge devices that were installed directly on the physical server but don't know still supported through NSX-MH or Transformers train.

In the future If the 10Gbps/VM increases, then Edge bandwidth may automatically increase to this value. (Don't know the theory but this may depend on CPU, Memory or PCI-Bus architecures ) Also technologies as Intel Dpdk are increasing the throughput of Intel architectures, so it may be good to guess this may increase in the future.

NSX, compared to traditional switches and routers may have advantages with:

- Microsegmentation - being able to use stateful Firewall rules between VMs

- Integration and service chaining with a large Ecosystem of Partners providing complementary solutions such as AV, IPS, Advanced Load Balancing, L7 Application Firewalls

- Independent of underlying physical hardware, thus no need to replace existing hardware for SDN since it is completely software based. Any physical hardware meeting best practices of reference design may be used. So if there are different brands across Data Centers NSX may be used simultaneaously across many (max 8 ) data centers with Cross-Vcenter architecture.

- Many use cases around Security, Business Continuity, VDI, Multitenancy, Network Services

- Architetures of Hybrid Cloud as VMC (Vmware Cloud), Hybrid Cloud designs NSX is used to extend same subnets

- NSX provides ease of manageability through Vcenter and Automation tools as (VRA, VRO, Loginsight, Vcloud, Openstack)

- More than a switch or router, it provides services such as Load Balancing, Firewall, NAT, VPN (SSL Vpn, Site to Site IPSec Vpn), so it may be used for these features.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vmmedmed,

To take a non-technical approach to answer your question regarding 'largest roadblocks to wide adoption', I would have to people and process, not so much technology. Many organizations have not adopted a converged team, or cloud role or full-stack position within their IT departments. Maintaining silo's IMHO is one of the largest roadblocks to a fully automated and software defined data center. On the other hand, if organizations are open to creating cross-silo multidiscipline teams that would support all three legs of the SDDC stack (storage, network/security, and compute), then SDN adoption would see more mass market adoption. I truly feel this transition is inevitable but legacy IT models will have a long tail and will take some time to catch up to an Amazon style IaaS and developer/application friendly private/public cloud architect approach.

2 cents

./Chuck