- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSphere Storage Appliance

- :

- vSphere™ Storage Discussions

- :

- SAN / RAID / vCenter issue

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting day today.

We have a virtualised 5.1 environment mixed with Solaris 10 and Sunray thin clients. We have load balanced terminal servers and the vm running the load balancing software was non responsive as was the DC. In fact, 4 of our 10 hosts were non responsive. Nobody could log on so we set about trying to ascertain what the issue was. First and foremost was trying to get the hosts back in the datacenter. After much troubleshooting and some reboots we managed to get the hosts all back in and things up and running. Except we found a number of servers were greyed out and 'inaccessible'. Thankfully we had VMDK backups so they were restored and more troubleshooting took place.

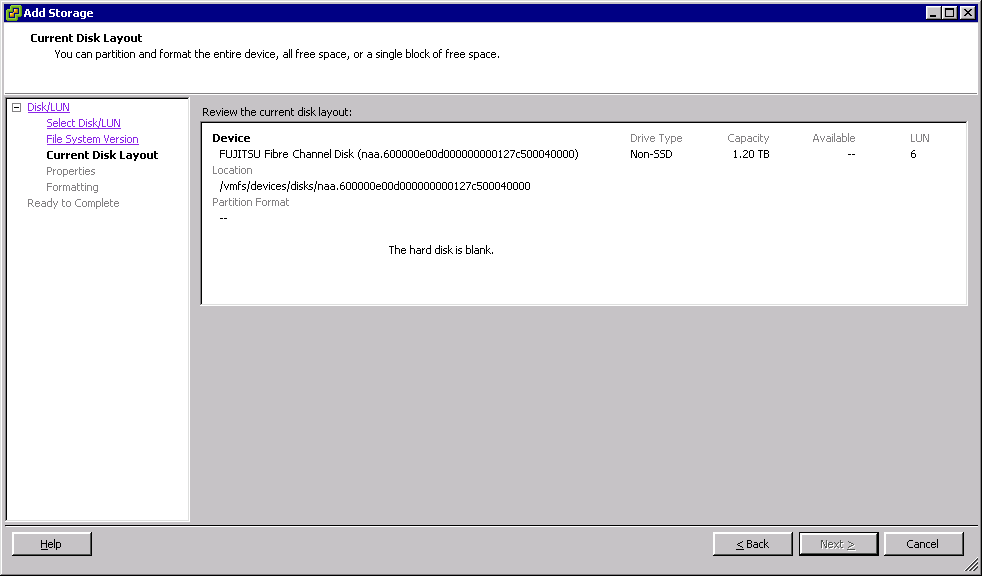

It would appear that the datastore containing the load balancer and the DC had gone offline at 03:30 and some time later re-presented itself as an empty disk. I rescanned the storage and HBA's and then tried to readd and this was the screen I was met with:

..and after checking the events I found this:

Our SAN is a Fujitsu DX90 and I have a ticket open with Fujitsu but am I right in assuming this is an issue with the RAID on the SAN? I'm pretty sure it's not FC related as we'd be seeing a wealth of other conenction issues. I've been following this KB about identifying disks and trying to access the volume via SSH: http://goo.gl/QHJV1 - but so far I've been unsuccessful in accessing the volume. Which leads me to believe that it is in fact a RAID issue.

My other question is should the SAN be intelligent enough to do something about this if an entire RAID goes down? Shouldn't there be some kind of redundancy? And if not is this something you might find on a newer SAN?

Or, has all of this been caused by vCenter and something errored at 03:30 thereby wiping the RAID?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem solved. I think we had a SCSI reservation.

Rebooted the hosts, released the LUN reservation on the SAN gui, rescanned and we're back in business.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note: Discussion successfully moved from VMware vCenter™ Server to VMware vSphere™ Storage

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Have you been able to fix things together with Fujitsu?

If your virtual environment loses access to its storage, its basically one of the wort things that could happen.

That your host lost access to the datastore can have multiple reasons, cables, switch, storage controllers etc.

To avoid downtime due to a broken RAID, because of faulty controllers, double disk failure or whatever you need a mirrored SAN (DataCore, StoreVirtual, 3par remote copy, EMC VPLEX etc. etc.) so your hosts could have failed over the the second storage node.

Regards

Patrick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Patrick,

I've got a ticket open with VMware support who should be able to shed some light on what's happened. I'm leaning towards storage controller, though.

And we've got the ball rolling with looking at purchasing a new SAN in the near future.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No SAN in general is going to magically handle a RAID array failure. A specific answer depends on what exactly happened.

If there's a concern that RAID5 isn't enough because two disks may fail - RAID6 / RAIDMP is often the answer. Most modern SANs will reserve spare capacity in one or more disks allowing for hot rebuilds. It's easy to setup a RAID5 array, sit around and do nothing when a disk fails, and then get upset when a second one fails and the RAID is offline. More than one person on this forum has recommended the use of RAID0. In short, any SAN is only as intelligent as you are.

You certainly have a few options, but unless you involve "buy a second SAN and replicate", it's only marketing hype. HP's Lefthand promise full array redundancy with a single SAN but that's only because one "SAN" is purchased as a cluster of two mirrored units.

In your case, if you suspect a RAID issue, can't you logon to the SAN's interface and have a look? Any complete RAID collapse should be splattering your screen in red lights and failure alarms.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Morning,

The SAN front end was one of the first places I looked after we had managed to get everything back up again. All lights were green but on further investigation there were 'some' disk errors but nothing that could lead to data loss, apparently. Hence the ticket open with VMware.

Our SAN is a Fujitsu DX90 S1 which is struggling with our environment. It does have features such as remote copy but it's not licensed and if we were to spend money on licensing something like that then we may as well get a newer bit of kit - that's our way of thinking anyway. Something which is well within it's life expectancy, is still supported and carries those sorts of features.

Also RAID 0 is a little bit too scary for us. We have a number of RAID 10 datastores but it just so happened that all of this took place on one of the RAID 5's.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Stop with what you are doing. Contact VMware support and let them analyze it. It could be something as simple as flipping a bit on the partition table or it could be a form of corruption. It might also be something on the array side... who knows, but in this case DO NOT take any risks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I should probably plug those SAS cables back in then ![]()

Don't worry, it's hands off. We've been able to restore the vms to other datastores (we have 14 other shared datastores, thankfully) so it's business as usual. And I've already had a call from VMware support and it's been escalated to Storage.

I'll post here when we know what's happened or what we did to resolve it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spoke to a very nice and knowledgeable person from VMware support yesterday and she confirmed what I thought I already knew. vSphere can't see the LUN due to an APD issue:

/var/log # cat /var/log/vmkernel.log | grep naa.600000e00d000000000127c500040000

2013-05-23T18:38:20.336Z cpu6:10283)ScsiDeviceIO: 2316: Cmd(0x4124007885c0) 0x1a, CmdSN 0x13b92 from world 0 to dev "naa.600000e00d000000000127c500040000" failed H:0x5 D:0x0 P:0x0 Possible sense data: 0x0 0x0 0x0.

2013-05-23T18:39:00.342Z cpu7:10363)ScsiDeviceIO: 2316: Cmd(0x4124007885c0) 0x28, CmdSN 0x13b93 from world 0 to dev "naa.600000e00d000000000127c500040000" failed H:0x5 D:0x0 P:0x0 Possible sense data: 0x0 0x0 0x0.

2013-05-23T18:39:00.342Z cpu6:93551)Partition: 414: Failed read for "naa.600000e00d000000000127c500040000": I/O error

2013-05-23T18:39:00.342Z cpu6:93551)Partition: 1020: Failed to read protective mbr on "naa.600000e00d000000000127c500040000" : I/O error

2013-05-23T18:39:00.342Z cpu6:93551)WARNING: Partition: 1129: Partition table read from device naa.600000e00d000000000127c500040000 failed: I/O error

I'll be getting in touch with Oracle later who support our FC switches to see if they can shed any light from a zoning point of view. I'm suspecting though that the data is already gone and I'll have to bite the bullet and format that datastore and present it again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ouch that is painful... sorry to hear that,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem solved. I think we had a SCSI reservation.

Rebooted the hosts, released the LUN reservation on the SAN gui, rescanned and we're back in business.