- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSphere vNetwork

- :

- vSphere™ vNetwork Discussions

- :

- VM on same vSwitch can not communicate

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VM on same vSwitch can not communicate

Dear,

We have about 10 ESXi servers. Most of them are in the same version (ESXi 4.1 build 721871).

I have a problem with only one of them:

The VMwares on a same VSwitch can not communicate with each other.

They can communicate with VMwares on an another VSwitch on the same ESXi or with physical equipments or even with other VMwares on other ESXi.

i have 2 VSwitches on the problematic ESXi:

- DMZ (with equipments on range 192.168.0.x/24):

Machine1

Machine2

Machine3

- LAN (with equipments in range 10.0.x.x/16)

Machine4

Machine5

==> Machine 1, 2, 3 can not communicate or ping with Machine 2, 3, 1

Machine 4, 5 can communicate or ping with Machine 1, 2, 3 (there is a router between the 2 networks)

Machine 4, 5 can not communicate with Machine 5, 4

Ping answers : "Destination host unreachable"

Machine 1, 2, 3 can communicate with computers in DMZ that runs on another ESXi or physical computers

Machine 4, 5 can communicate with computers in LAN that runs on another ESXi or physical computers

Network configuration on the VMwares are good (i already migrate them from another ESXi without have to change the network configuration inside the VM)...

Do you have any idea ? what should i looking for ?

Best regards,

J.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just a guess. Did you already verify the port configuration for the uplinks on the physical switch?

Which vSwitch/Port group policies do you use?

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Welcome to the communities.

Seems vswitch not configured properly .

Please check Port Groups

vSwitches can be divided into smaller units called port groups, there

are three types of port groups

Virtual Machine

Service Console

VMKernel (for vMotion, VMware FT logging and IP storage)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello André and Ethan,

Thank you for your answer.

As i said before, Virtual machines on a same VSwitch can not communicate with each other --> Why ??

But they can communicate with physical or virtual machines (on another ESX) connected to the same network.

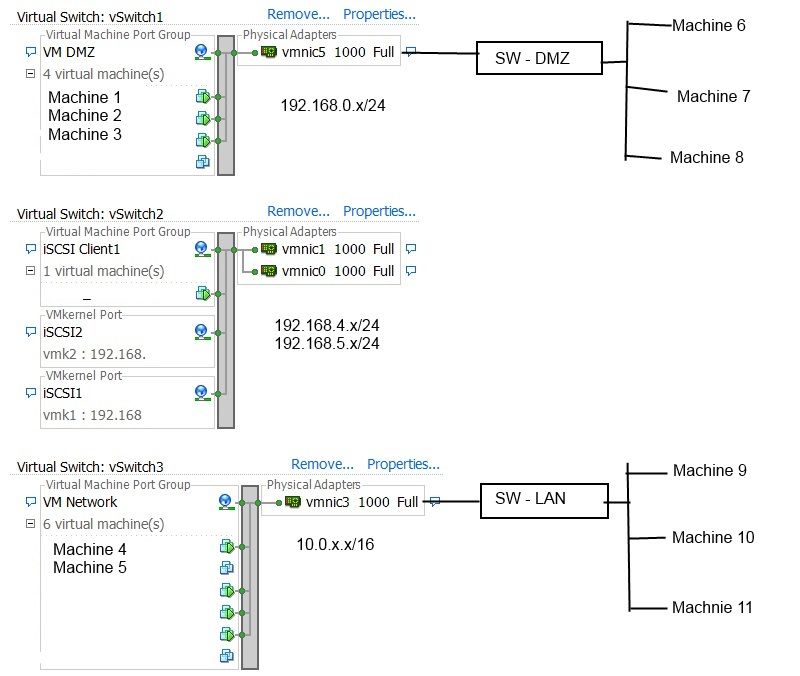

Here is a printscreen of my network configuration on the problematic ESXi.

André,

The configuration fo port on my physical switch impacts the communication between virtual machines ?

nothing configured on physical switches

what can be policies ?

Ethan,

My VSwitches are configured for VMwares.

J.

Message was edited by: actl - 2013-03-08 Modification of network layout

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm looking for an idea, or anything else that can help me solve this problem...

Is there debug options for network ? tcpdump does not work on VM VSwitches.

Maybe this is more clear ?

Machine 1, 2, 3 can not communicate with each other.

Machine 1, 2, 3 can communicate with Machine 4, 5 (through a router / firewall)

Machine 1, 2, 3 can communicate with Machine 6, 7, 8 (virtual or physical)

Machine 4, 5 can not communicate with each other.

Machine 4, 5 can communicate with Machine 1, 2, 3 (through a router/firewall)

Machine 4, 5 can communicate with Machine 9, 10, 11 (virtual or physical)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If i take a look deeper in the ESXi configuration, i found this, but don't know if it is relevant to my problem

on the problematic ESXi :

~ # vsish -e get /net/portsets/vSwitch3/ports/67108868/status

port {

portCfg:VM Network

dvPortId:

clientName:Machine 4

world leader:16830

flags:port flags: 0x1003a3 -> IN_USE ENABLED WORLD_ASSOC RX_COALESCE TX_COALESCE TX_COMP_COALESCE CONNECTED

Passthru status:: 0x8 -> DISABLED_BY_HOST

ethFRP:frame routing {

requested:filter {

flags:0x0000000b

unicastAddr:00:0c:29:7c:xx:xx:

LADRF:[0]: 0x0

[1]: 0x0

}

accepted:filter {

flags:0x0000000b

unicastAddr:00:0c:29:7c:xx:xx:

LADRF:[0]: 0x0

[1]: 0x0

}

}

filter supported features:features: 0 -> NONE

filter properties:properties: 0 -> NONE

}

On another ESXi (and others are the same) - these are ok and can communicate with each other:

~ # vsish -e get /net/portsets/vSwitch1/ports/33554441/status

port {

portCfg:VM Network

dvPortId:

clientName:Machine 9

world leader:18894

flags:port flags: 0x100023 -> IN_USE ENABLED WORLD_ASSOC CONNECTED

Passthru status:: 0x1 -> WRONG_VNIC

ethFRP:frame routing {

requested:filter {

flags:0x0000002b

unicastAddr:00:0c:29:15:xx:xx:

LADRF:[0]: 0xc00000

[1]: 0x400000

}

accepted:filter {

flags:0x0000002b

unicastAddr:00:0c:29:15:xx:xx:

LADRF:[0]: 0xc00000

[1]: 0x400000

}

}

filter supported features:features: 0 -> NONE

filter properties:properties: 0 -> NONE

}

Does somenone have information about this ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

more and more strange...

i add a new Vmware VSwitch (named VM Network2") and connect it to another physical NIC.

I connect this physical NIC to another port of my switch.

For all the VMs on "VM Network" VSwitch, I change the connection to VM Network 2".

All the VMs can ping each other.

After some minutes, communication is lost again between the vmwares on the same VSwitch.

If i take a look to ARP table of each VMwares, i can see is that mac address becomes "invalid".

it looks like this problem (but on VMware platform)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like you have coalescing on for the NICs on the problem hosts. What kind of NICs are they? Have you tried turning coalescence off?

What's may be happening is that in the higher-datarate situation of VMs communicating on the same vSwitch, the rapid rate is triggering a race condition in the vNIC in use by the VMs.

In order to turn off interrupt coalescing for a VM:

Go to VM Settings →Options tab →Advanced General →Configuration Parameters and add an entry for ethernetX.coalescingScheme with the value of disabled.

To turn it off for a host:

Click the host go to the configuration tab → Advance Settings → networking performance option CoalesceDefaultOn to 0 (disabled).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello daboochmeister,

thank you for your answer.

I tried to configure 2 of our VMware guests with your settings, but it did not help.

VMwares guests on the same vSwitch can not communicate together on the ESXi.

VMwares guests can communicate (ping) with physical computers or with virtual machines located on another ESXi host.

On this ESXi, i have 2 NICs:

- Intel Corporation 82576 Gigabit ET Quad Port (http://accessories.euro.dell.com/sna/productdetail.aspx?c=uk&l=en&s=dhs&cs=ukdhs1&sku=540-10793) connected to our iSCSI storage only

- Broadcom NetXtreme II BCM5716 (included on our Dell R210) and connected to our network switch

We have about 8 R210 servers with the same ESXi version & configuration (we don't have any problem with them).

The only difference is the Intel 82576 NIC. On the other ESXi servers i have a Broadcom NetXtreme quad port nics for iSCSI storage.

Intel vmnic 0 to 3 (for iSCSI):

~ # ethtool -i vmnic1

driver: igb

version: 3.2.10

firmware-version: 1.5-1

bus-info: 0000:03:00.1

~ # vmkchdev -l |grep vmnic1

000:003:00.1 8086:10e8 8086:a02c vmkernel vmnic1

Hardware compatibility list seems ok:

~ # vmkload_mod -s igb | grep Version

Version: Version 3.2.10, Build: 249663, Interface: 9.0, Built on: Sep 22 2011

Broadcom vmnic 4 to 5 (for Network)

~ # ethtool -i vmnic4

driver: bnx2

version: 2.1.12b.v41.3

firmware-version: 6.4.5 bc 5.2.3 NCSI 2.0.11

bus-info: 0000:05:00.0

~ # vmkchdev -l |grep vmnic4

000:005:00.0 14e4:163b 1028:02a5 vmkernel vmnic4

~ # vmkload_mod -s bnx2 | grep Version

Version: Version 2.1.12b.v41.3, Build: 00000, Interface: ddi_9_1 Built on: Oct 28 2011

Hardware compatibility list seems ok:

Any idea that could help ?

kind regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm. I had high hopes on the coalescence being the issue. Did you do another vsish after the config settings changes, to make sure that the problematic host wasn't showing the coalescence settings anymore?

I'm losing the bubble, but are you using the vmxnet3 driver in your VMs? If so, one thing to try would be switching to the E1000 vNIC on the VMs on the problematic host. While vmxnet3 has a lot of advantages, it IS still less tested than the native E1000 drivers that come with the guest OSes (e.g., we just stumbled across a quite interesting defect in vmxnet3 concerning > 32 multicasts concurrently).

If E1000 "fixes" it, then it becomes a question of whether you want to stick with the E1000, or do more work to nail down exactly what feature/capability is problematic in the vmxnet3 driver, so you can try to configure it differently or turn it off. I don't know how much the advantages of vmxnet3 (lowered CPU, performance increase) matter in your case.

Also to consider - are you at the most recent vSphere version that you can be? the NIC drivers used at the host level get regularly updated, and there might be something at that level.