- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSphere Storage Appliance

- :

- vSphere™ Storage Discussions

- :

- Re: Change in the IOPS limit calculation between 5...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everybody,

Some of my users complained about degraded performances after I migrated the host from 5.1 to 5.5. After a bit of poking around, I just had to remove the IOPS limits in order to restore their previous performances...

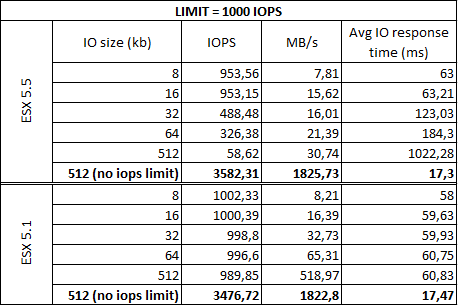

So, I've done a really simple test, on 2 vms. Here are the infos about the test :

A host running vSphere 5.1, with SSD card plugged into it (OCZ ZDrive R4). One W2k12 R2 vm running on it, vm version 9, vmtools build 9221. 2 disks, limited to 500 iops each, so 1000 iops in total

A host running vSphere 5.5, same SSD card plugged in. One clone VM of the first, upgraded vm version to 10, vmtools build to 9349. IOPS limit is the same

Storage I/O control is disabled on the 2 hosts, but I did some tests with sioc enabled and it wasn't conclusive

Test procedure :

1. Took this file http://vmktree.org/iometer/OpenPerformanceTest32.icf

2. IOMeter v1.1.0 Iometer project - Downloads, prebuild binaries for x86-64

3. Launched the "Max Throughput-100%Read" Specification with no change, aside from the "Transfer Request Size" . I changed this param from 8Kb to 512Kb

Here are the results :

As you can see, the limitation is handled completely differently from one version to the other... Moreover, the average response time is completely insane on the ESX 5.5 !

I searched around for an explanation about this change, but I didn't find anything...

So, how can I revert to the previous behaviour ? Because we have different types of workload and different types of IO size, I can't have a simple formula to get a proper limit. I must have a hard IOPS limit, regardless of their sizes...

Thanks all,

-Vincent.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

After an opened case and dozens of tests, I found these links, explaining this "weird" beahviour :

New disk IO scheduler used in vSphere 5.5

vSphere 5.5 and disk limits (mClock scheduler caveat)

In brief, the new disk IO scheduler introduced in vSphere 5.5 consider an I/O with a maximum of 32kb. If your IOs are bigger, when you specify an IO limitation, the calculation will be in multiples of 32KB... Please, read these links, Duncan explains it really well... Kudos to him !

I am just annoyed that VMWare don't communicate enough on these changes, as they are pretty big !

Regards,

Vincent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kydo201110141,

Have you had any luck finding a solution for this issue other than disabling the limits?

I have a customer who now has the exact same issue and it is causing them quite a lot of pain.

Regards

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steve,

atm, no solution ![]() But I opened a case with the support and we are investgating this issue... I'll let you know about the progression of this issue...

But I opened a case with the support and we are investgating this issue... I'll let you know about the progression of this issue...

Regards,

Vincent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

After an opened case and dozens of tests, I found these links, explaining this "weird" beahviour :

New disk IO scheduler used in vSphere 5.5

vSphere 5.5 and disk limits (mClock scheduler caveat)

In brief, the new disk IO scheduler introduced in vSphere 5.5 consider an I/O with a maximum of 32kb. If your IOs are bigger, when you specify an IO limitation, the calculation will be in multiples of 32KB... Please, read these links, Duncan explains it really well... Kudos to him !

I am just annoyed that VMWare don't communicate enough on these changes, as they are pretty big !

Regards,

Vincent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I found a post dealing with this problem recently, and thought it was worth sharing it with you all.

http://anthonyspiteri.net/esxi-5-5-iops-limit-mclock-scheduler/

Long story short, there is an advanced setting which can be set to 0 to revert to the previous iops calculation. I tested it and it worked ![]()