- VMware Technology Network

- :

- Cloud & SDDC

- :

- VMware vSphere

- :

- VMware vSphere™ Discussions

- :

- Re: vSphere with Tanzu - Guest cluster deployment ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vSphere with Tanzu - Guest cluster deployment stuck at Control Plane VM

I have master vCenter and 3x nested ESXi with VSAN on which I tried to test Tanzu , VDS switch (not NSX-T), HAproxy as LB.

All MGMT are on existing network, deployed 2x new VLAN, one for Frontend and one for Workload.

Managed to deploy SupervisorCluster up and running, Namespaces. When I try to deploy Guest cluster with simple .yaml I got stuck.

Control Plane VM gets deployed, powered on, HAproxy .cfg populated with frontend/backend IPs, IP set up (unpingable) but then stuck before Wokers deployment.

kubectl get events -w

LAST SEEN TYPE REASON OBJECT MESSAGE

0s Warning ReconcileFailure wcpcluster/simple unexpected error while reconciling control plane endpoint for simple: failed to reconcile loadbalanced endpoint for WCPCluster tanzu-ns-01/simple: failed to get control plane endpoint for Cluster tanzu-ns-01/simple: VirtualMachineService LB does not yet have VIP assigned: VirtualMachineService LoadBalancer does not have any Ingresses

0s Normal CreateVMServiceSuccess virtualmachineservice/simple-control-plane-service CreateVMService success

0s Warning ReconcileFailure wcpcluster/simple unexpected error while reconciling control plane endpoint for simple: failed to reconcile loadbalanced endpoint for WCPCluster tanzu-ns-01/simple: failed to get control plane endpoint for Cluster tanzu-ns-01/simple: VirtualMachineService LB does not yet have VIP assigned: VirtualMachineService LoadBalancer does not have any Ingresses

0s Warning ReconcileFailure wcpcluster/simple unexpected error while reconciling control plane endpoint for simple: failed to reconcile loadbalanced endpoint for WCPCluster tanzu-ns-01/simple: failed to get control plane endpoint for Cluster tanzu-ns-01/simple: VirtualMachineService LB does not yet have VIP assigned: VirtualMachineService LoadBalancer does not have any Ingresses

0s Warning ReconcileFailure wcpcluster/simple unexpected error while reconciling control plane endpoint for simple: failed to reconcile loadbalanced endpoint for WCPCluster tanzu-ns-01/simple: failed to get control plane endpoint for Cluster tanzu-ns-01/simple: VirtualMachineService LB does not yet have VIP assigned: VirtualMachineService LoadBalancer does not have any Ingresses

0s Warning ReconcileFailure wcpcluster/simple unexpected error while reconciling control plane endpoint for simple: failed to reconcile loadbalanced endpoint for WCPCluster tanzu-ns-01/simple: failed to get control plane endpoint for Cluster tanzu-ns-01/simple: VirtualMachineService LB does not yet have VIP assigned: VirtualMachineService LoadBalancer does not have any Ingresses

0s Warning ReconcileFailure wcpcluster/simple unexpected error while reconciling control plane endpoint for simple: failed to reconcile loadbalanced endpoint for WCPCluster tanzu-ns-01/simple: failed to get control plane endpoint for Cluster tanzu-ns-01/simple: VirtualMachineService LB does not yet have VIP assigned: VirtualMachineService LoadBalancer does not have any Ingresses

0s Normal Reconcile gateway/simple-control-plane-service Success

0s Normal Reconcile gateway/simple-control-plane-service Success

0s Normal SuccessfulCreate machinedeployment/simple-workers-kszj2 Created MachineSet "simple-workers-kszj2-d4c6b6f49"

0s Normal SuccessfulCreate machineset/simple-workers-kszj2-d4c6b6f49 Created machine "simple-workers-kszj2-d4c6b6f49-m6sg9"

0s Normal SuccessfulCreate machineset/simple-workers-kszj2-d4c6b6f49 Created machine "simple-workers-kszj2-d4c6b6f49-795l5"

0s Normal SuccessfulCreate machineset/simple-workers-kszj2-d4c6b6f49 Created machine "simple-workers-kszj2-d4c6b6f49-5z5zg"

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

1s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm is not yet created: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm does not have an IP address: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm does not have an IP address: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm does not have an IP address: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Warning ReconcileFailure wcpmachine/simple-control-plane-hfrm7-7twzk vm does not have an IP address: vmware-system-capw-controller-manager/WCPMachine/infrastructure.cluster.vmware.com/v1alpha3/tanzu-ns-01/simple/simple-control-plane-hfrm7-7twzk

0s Normal Reconcile gateway/simple-control-plane-service Success

0s Normal Reconcile gateway/simple-control-plane-service Success

0s Normal Reconcile gateway/simple-control-plane-service Success

0s Normal Reconcile gateway/simple-control-plane-service Success

1s Normal Reconcile gateway/simple-control-plane-service Success

Cluster API Status:

API Endpoints:

Host: 172.16.97.194

Port: 6443

Phase: Provisioned

Node Status:

simple-control-plane-rb7r6: pending

simple-workers-kszj2-d4c6b6f49-5z5zg: pending

simple-workers-kszj2-d4c6b6f49-795l5: pending

simple-workers-kszj2-d4c6b6f49-m6sg9: pending

Phase: creating

Vm Status:

simple-control-plane-rb7r6: ready

simple-workers-kszj2-d4c6b6f49-5z5zg: pending

simple-workers-kszj2-d4c6b6f49-795l5: pending

simple-workers-kszj2-d4c6b6f49-m6sg9: pending

Any ideas?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

---

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

--

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

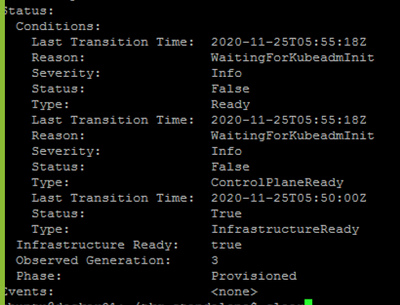

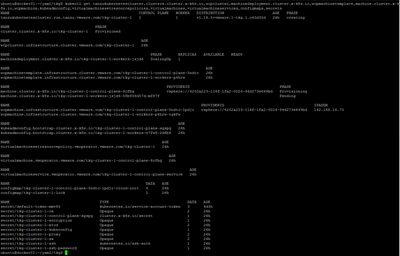

Hi, with vSphere w Tanzu using HAProxy Im stucked during TKG cluster deployment. kubectl describe kubeadmcontrolplanes.controlplane.cluster.x-k8s.io tkg-show-01-control-plane is showing me WaitingForKubeadmInit. Anyone? Here is configuration:

HAProxy configuration

Frontend CIDR setting in HAProxy

Here is Workload Management part

This part is describing state after I initiated TKG guest cluster creation:

kubectl describe kubeadmcontrolplanes.controlplane.cluster.x-k8s.io tkg-cluster-1-control-plane

kubectl get tanzukubernetescluster,clusters.cluster.x-k8s.io,wcpcluster,machinedeployment.cluster.x-k8s.io,wcpmachinetemplate,machine.cluster.x-k8s.io,wcpmachine,kubeadmconfig,virtualmachinesetresourcepolicies,virtualmachines,virtualmachineservices,configmaps,secrets

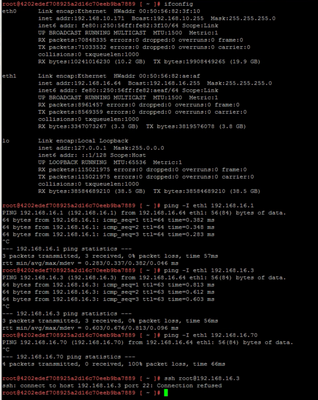

from Supervisor VM I can ping Workload Network GW, also can ping HAProxy Workload IP. But cant ping TKG Cluster IP on Workload Network.

SSH to HAProxy IP on Workload Network is refused.

From TKG Control Plane VM I can ping Workload Network GW but cant ping HAProxy Workload IP. Also cant ping Supervisor IP on Workload Network.

SSH to HAProxy IP on Workload Network is not responding at all.

From TKG Control Plane VM on Workload Network I can ping and SSH Supervisor VM IP on Management Network. Also can ping Supervisor Cluster IP On Frontend Network.

Also can ping HAProxy IP on Management Network also can ping HAProxy IP on Frontend Network.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ferdis,

Did you find what the underlying issue is? I'm having exactly the same issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gabor hi, by using TCP dump in Supervisor Control Plane VM we have found issue that ping TCP request was going from haproxy IP on Workload Network 192.168.16.3 / 00:50:56:82:aa:51 to Supervisor control plane VM IP on Workload Network 192.168.16.64 / 00:50:56:82:ae:af but Supervisor control plane VM does TCP reply to HAProxy's gateway IP 192.168.10.1 / MAC address 7c:5a:1c:83:27:aa. So assymetric communication there.

Also I have redeployed both HAProxy and Supervisor Cluster using two networks instead of three. I have used same management network and fresh new VLAN for Workload Network. And result was exactly the same as with three networks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ferdis,

Thanks for sharing that with me.

I have tried both HAProxy v0.1.9 and HAProxy v0.1.10 but the issue wasn't resolved.

It looks like the issue with the asymmetric routing still exists on 0.1.10 HA proxy and I'm suspecting that it's probably not necessarily only the HA proxy's fault. VMware will probably have to fix the communication from the control plane VM to be working as required.

https://techie.cloud/blog/2020/11/26/issues-encountered-when-setting-up-vmware-tanzu/

I came across with this article which describes how to enable asymmetric routing on a pfsense, however I'm not using pfsense and it's not really a lab so I suspect I have to wait until there's further releases from VMware.

Please let me know when you managed to get any further.

In the meantime, I will setup a small nested lab on a spare host to test this in an isolated environment...potentially with a pfsense.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I too ran into problems with 7.0U1 and haproxy-v0.1.8.

I'm Using William Lam's nested script to deploy vSphere Tanzu basic with haproxy. Ive got a N5K core with direct connected vlans, no router as such.

I then switch to 7.0u3 and haproxy-v0.2.0 and had things up and running.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mikey hi, thanks for update. Good to know 🙂 Byt the way here is interesting statement regarding HA Proxy in article vSphere With Tanzu - NSX Advanced Load Balancer Essentials by Michael West:

“HA Proxy while advantageous for PoC and Lab environments has some limitations for production deployments. NSX Advanced Load Balancer Essentials in vSphere with Tanzu provides a production ready load balancer. Currently, you can choose to deploy the HAProxy appliance or a fully supported solution using the NSX Advanced Load Balancer Essentials."

Link: https://core.vmware.com/blog/vsphere-tanzu-nsx-advanced-load-balancer-essentials

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you manage to set up some Pods inside TKC? I have problems setting up for example nginx, don't know if it's DNS/internet access?

# deploy nginx for testing

kubectl run nginx --image=nginx

Error:

message: 'rpc error: code = Unknown desc = failed to pull and unpack image

"docker.io/library/nginx:latest": failed to resolve reference "docker.io/library/nginx:latest":

failed to do request: Head https://registry-1.docker.io/v2/library/nginx/manifests/latest:

dial tcp 54.161.109.204:443: i/o timeout'

reason: ErrImagePull

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @ferdis, yeah there are a couple of limitations with HA Proxy, no vsphere pods, harbour registry as well I think. Working through William's Tanzu deployment with NSX-T now. Deployment 1 already has issues - feels a bit like groundhog day!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @kastrosmartis - yep, had no issues after shifting to 7.0u3. I haven't tried nginx - but you could try # 1 below. It comes up pretty quick

- "hipster shop" - kubectl apply -f https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/demo-hipstershop.yaml

- Portworx for my persistent storage requirements

- and then the "hipster shop" with pcv using a new storage class pointing back to Portworx - kubectl apply -f https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/demo-hipster-pvc.yaml

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I tried your suggestion (with HAproxy) and got errors indicating that NSX-T should be used???

Any ideas?

PS C:\Install> kubectl apply -f https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/demo-hipstershop.yaml

service/emailservice created

service/checkoutservice created

service/recommendationservice created

service/frontend created

service/frontend-external created

service/paymentservice created

service/productcatalogservice created

service/cartservice created

service/currencyservice created

service/shippingservice created

service/redis-cart created

service/adservice created

Error from server (workload management cluster uses vSphere Networking, which does not support action on kind Deployment): error when creating "https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/demo-hipstershop.yaml": admission webhook "default.validating.license.supervisor.vmware.com" denied the request: workload management cluster uses vSphere Networking, which does not support action on kind Deployment

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kastrosmartisI'm just starting out with Tanzu so no expert - but I'm wondering if you are trying to deploy the "hipster shop" in the supervisor cluster. That being the case it would be a vSphere Pod, and this type of deployment requires NSX-T, this would explain your error.

When using HAPorxy you need to first deploy a TKG Guest Cluster, login to that and then deploy your app.

Here is the process I followed

- Created a new Namespace called tkgs-ns-01 in the vSphere UI

- Login to the namespace and switch to the context

kubectl vsphere logout

kubectl vsphere login --server=10.226.242.153 -u administrator@vsphere.local --insecure-skip-tls-verify --tanzu-kubernetes-cluster-namespace tkgs-ns-01

kubectl config use-context tkgs-ns-01 - Deploy my TKG GuestCluster called px-demo-cluster to namespace tkgs-ns-01

kubectl apply -f .\tkc_px.yml

PS E:\tanzu> cat .\tkc_px.yml

apiVersion: run.tanzu.vmware.com/v1alpha1

kind: TanzuKubernetesCluster

metadata:

name: px-demo-cluster

namespace: tkgs-ns-01

spec:

distribution:

version: 1.21.2+vmware.1-tkg.1.ee25d55

topology:

controlPlane:

count: 1

class: guaranteed-medium

storageClass: tanzu-gold-storage-policy

workers:

count: 3

class: best-effort-large

storageClass: tanzu-gold-storage-policy

settings: #all spec.settings are optional

storage: #optional storage settings

defaultClass: tanzu-gold-storage-policy

network: #optional network settings

cni: #override default cni set in the tkgservicesonfiguration spec

name: antrea

pods: #custom pod network

cidrBlocks: [100.96.0.0/11]

services: #custom service network

cidrBlocks: [100.64.0.0/13] - Monitor the deployment

Kubectl get tkc -w

kubectl get machines - Once complete, login to the TKG Guest Cluster and switch to the TKG Guset Cluster Context

kubectl vsphere logout

kubectl vsphere login --server=10.226.242.153 -u administrator@vsphere.local --insecure-skip-tls-verify --tanzu-kubernetes-cluster-namespace tkgs-ns-01 --tanzu-kubernetes-cluster-name px-demo-cluster

kubectl config use-context px-demo-cluster - Deploy your app

kubectl apply -f https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/demo-hipstershop.yaml

Hope that helps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vMikeyC You are correct, I forget to logout and login to new TKG cluster, hence the error about NSX-T requirement.

In the meantime I replaced HAproxy with AVI, its working OK.

Can you explain some more about Step 6, deployment of application? It seams that deployment was success, but don't know how to test, check,...?

I managed to deploy test Pod and got it working:

P.S. I am not a developer 🙂

Thanx

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kastrosmartis - glad you got it deployed! I believe this deployment is an older version of this

https://github.com/GoogleCloudPlatform/microservices-demo

A multi tier microservices application forming a web-based e-commerce app

Once the microservice app is deployed, you'll have a webapp front end that you can browse to, view items, add them to the cart, and purchase them.

So you can use the following to check the status of your deployment - kubectl get pods

And this one to get the External IP of the hipster shop, and then browse to the IP - kubectl get svc

or

kubectl get service frontend-external | awk '{print $4}' if you running kubectl from linux

This one works from powershell

$fe = kubectl get service frontend-external

foreach ($line in $fe.split([Environment]::NewLine)){

if($line -like "frontend-external*"){

$line.split()[9]

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had problems with Security, all pods had error creating:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: psp:privileged

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames:

- vmware-system-privileged

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: all:psp:privileged

roleRef:

kind: ClusterRole

name: psp:privileged

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:serviceaccounts

apiGroup: rbac.authorization.k8s.io