- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSAN

- :

- VMware vSAN Discussions

- :

- vSAN cluster : Slack space for 3-Node - need help

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vSAN cluster : Slack space for 3-Node - need help

Hello All,

I am new with vSAN and I got lost with the slack space, as I know VMware recommends (25-30%) a slack space, but checking some of the clusters at my work I can see there are a different percentage of the slack space, which is why I got lost, an example from one of the clusters

vSAN Cluster A :

Total 16 VMs and the storage for these VMs are (4,556.0 GB) let’s say 5TB, the setting is RAID-1 and FTT-1 policy so storage will be 5 TB*2 = 10 TB

Checking vROps I can see the slack space is (1,77.002 GB) which is less than 20%, so I would like to know how the slack space works?

Why it’s not (25-30%)?

Please, can someone give me the full explanation for that?

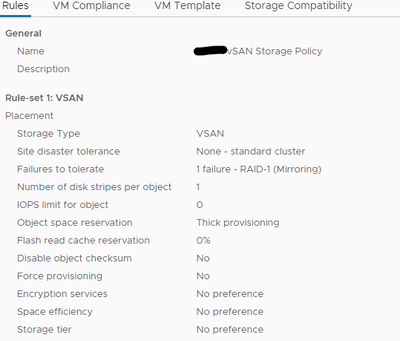

Storage Policy

vSAN cluster

Looking forward to hearing from you.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VMbkota, how much slack space is advised or required in a cluster varies on a lot of factors.

Some main points that you should consider:

- The capabilities and/or limitations of your cluster layout.

- What type of scenarios do you need or want the data to be able to heal back to a redundant state (and how many times and in what timespans etc.).

- The current and expected future footprint of the storage space (and accounting for any temporary or transient usage).

This is a 3-node cluster - if a node fails then nothing stored as FTT=1 will get repaired back to a redundant state and thus putting aside any space for rebuild from this won't be beneficial, this is why an N+1 configuration e.g. a 4-node (or more) cluster is preferable. If you want the cluster to be able to repair all data from a disk or Disk-Group failure then how much space you should be leaving unused depends on the size of the disks and Disk-Group configuration and also the size and placement of the largest objects in the cluster.

All your data (in the cluster in screenshots anyway) is stored with a 100% thick-provisioned storage policy, if you don't expect to fill and expand or add any more vmdks than current then that has already reserved space for these at their full size. This cluster would be ~25% storage used with thin-provisioned and the current data.

3-node clusters don't have many options for changing storage policies here so you don't really have to worry about slack space for these needing deep reconfiguration of objects (other than changing Stripe-Width).

You have a 19.65TB vsanDatastore there with 6.23TB free, that is ~31.5% free so not sure where vROps is getting '1,77.002 GB' from.