- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSAN

- :

- VMware vSAN Discussions

- :

- VM Write Latency

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VM Write Latency

Hello,

I have lot of "VM Write latency" on a new vSAN infrastructure :

- Version 7.0

- Full Flash

- 7 hosts (Dell poweredge 740xd)

- de-duplication and compression are off

Actually, the no traffic on the infrastructure.

I use this command to generate benchmark :

[root@a ]$ dd if=/dev/zero of=/test_io/test-24Go bs=1G count=24 iflag=fullblock oflag=direct;

24+0 records in

24+0 records out

25769803776 bytes (26 GB, 24 GiB) copied, 45.7959 s, 563 MB/s

The VM where I launched the command is configured like this :

- Centos 8

- 2 CPU

- 2 Go RAM

- NVME Controller

- RAID 1

The graphs :

On the backend :

Host network :

If I configure the VM in RAID 0, I have no issue and it's more fast :

[root@a ]$ dd if=/dev/zero of=/test_io/test-24Go bs=1G count=45 iflag=fullblock oflag=direct

45+0 records in

45+0 records out

48318382080 bytes (48 GB, 45 GiB) copied, 63.9457 s, 756 MB/s

Any idea ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What about the network configuration?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

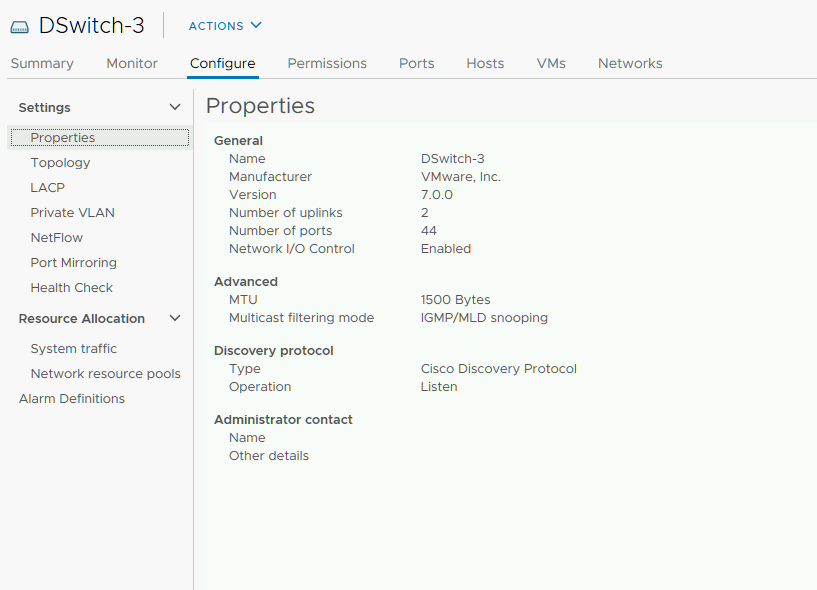

Everything is in 10G with LACP and Jumbo frame.

VM-ADMIN and vMotion distributed switch :

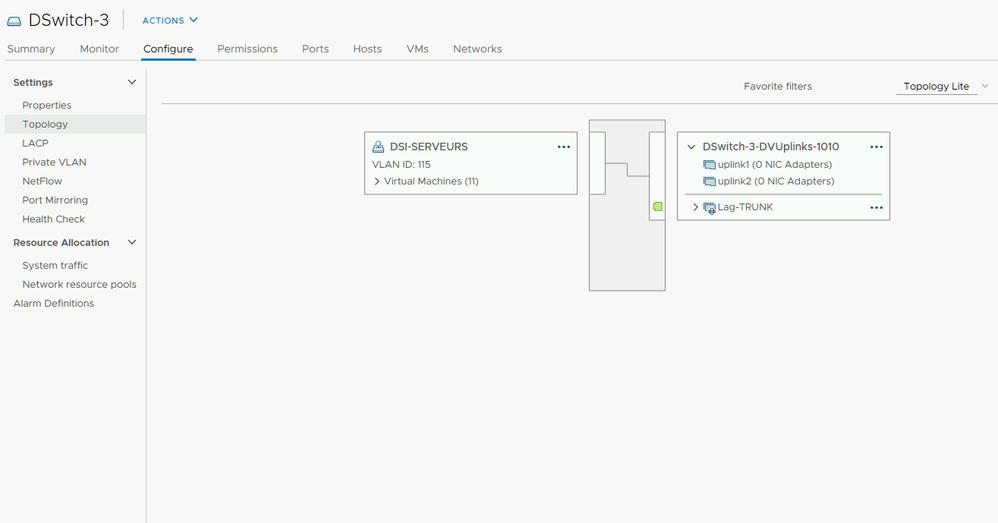

vSAN Distributed Switch :

VM (Trunk) distributed switch (no jumbo Frames) :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi enclume. I've some questions:

- Did you tried changing storage policies to Raid 1 and adding disk stripes and see if performance improves?

- Are VMware tools installed and running?

- Did you separate vSAN, vMotion and MGMT traffic? What about NIOC and priorities?

- Have you checked controllers firmware and drivers versions? It's very important uin vSAN environments.

- Did you try with another OS like windows?

- Can you run vSAN proactive tests?

- Any warning on vSAN skyline health?

- Can you disconnect one NIC and leave it with only one? that way you can be sure there is not a problem with LACP

- Did you run any HCI Bench benchmarks in your environment? It's an awesome tool for vSAN to measure IOPS, troughput, latency, etc.

- From a switch perspective, do you notice any dropped packets or some kind of retransmission? Some switches as DELL PowerConnect needs MTU set to 9124.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

lucasbernadsky :

- Did you tried changing storage policies to Raid 1 and adding disk stripes and see if performance improves?

See my first post, when I switch to Raid 0, I have better performances.

- Are VMware tools installed and running?

Yes VMware Tools are installed and running. It's not VMware tools from VMware, it's from Centos repository open-vm-tools-11.0.5-3.el8.x86_64 but normally there is no problem with that.

- Did you separate vSAN, vMotion and MGMT traffic? What about NIOC and priorities?

If you look my last post, I have 3 switchs to sperate traffic (vSAN, vMotion+MGMT, Trunk).

- Have you checked controllers firmware and drivers versions? It's very important uin vSAN environments.

Yes. Our 's Poweredge R740xd work with "PERC H730P" and they have the last firmware (25.5.7.0005). Server is up to date too (Bios, iDrac, ...)

- Did you try with another OS like windows?

Yes, but "dd for windows" is very slow and doesn't did the same benchmark for me. I tried CrystalDiskMark and the result is :

But no latency on VMware graphs.

- Can you run vSAN proactive tests?

Yes I can run proactive tests. I ran "VM Creation Test" and "Network Performance Test" successfully for example.

- Any warning on vSAN skyline health?

No, evreything is greeen.

- Can you disconnect one NIC and leave it with only one? that way you can be sure there is not a problem with LACP

We launched a lot of iperf3 command to validate sucessfully LACP between ESX. Also When I ran the "dd command", We have saw 200 MB/s of traffic on only one interface. It's less than 10 Gb and only one interface is used, also we excluded a LACP issue...

- Did you run any HCI Bench benchmarks in your environment? It's an awesome tool for vSAN to measure IOPS, troughput, latency, etc.

I Know this tools, but actually it's impossible to use it because with have an issue with the appliance.

- From a switch perspective, do you notice any dropped packets or some kind of retransmission? Some switches as DELL PowerConnect needs MTU set to 9124.

We use "cisco Nexus9000 93180YC-EX".

On switch we have no error.

On vcsa we can see this :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With 83 IOPS your VM generate around 82MB/s throughout. Impressive 1GB blocksize.... and there it is in your "dd" command. Seeing 335ms latency is no wonder with such a unrealistic blocksize i think.

I have a similar setup but way smaller which means

- 2x R740 (older with Intel DC P3600) and 2x R740 with SAS SSD WI

- HBA330 and not the PERC730/740*

- One Diskgroup with only 4 capacity SSDs

With the standard policy all your writes goes to one buffer device and than be mirrored over the wire. Your reads are comming from a single SSD as long as you dont use "spanning" in your vSAN Policy.

So same number as yours when choosing the 1GiB Blocksize. If iam selecting only 16Mbit i got 5 time higher values.

Btw. The H730/H740 is not the Dell preferred HBA when dealing with a vSAN setup. Consider to use the HBA330(real passtrough HBA ) next time (not mixing with the H330!) .

If i use the vSAN inbuild performance benchmark i can saturate my 10Gbit network with 9.295Mb/s

Yes... as old school i prefer Intel IOmeter because its more granular and you can specify real workload numbers and not something like 1G.

When saying RAID0 you mean a policy like FTT=0?

Regards,

Joerg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the problem here indeed is that you are using 'benchmarking' tools which are not really designed for benchmarking distributed object storage systems. If you want to figure out what your cluster can do, then look at something like HCIBench instead. That will give you a better view.

In your case you are saying a performance impact simply because half of the IO will need to go across the network, and there's a cost to that. You compare it to RAID-0, which has no protection whatsoever.