- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSAN

- :

- VMware vSAN Discussions

- :

- Re: High Component Usage

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

High Component Usage

Hello All,

I have a strange issue, where I have 2 clusters (8 node and a 10 node), both have Cloud Director on top, and the Component usage is extremely high.

there is only around 800 VMs on the cluster, and I can't understand how the component usage is spiralling out of control.

I use FTT1 default

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

any ideas as to how this is happening?

thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@LordofVxRail You have ~77,000 components in this cluster, these comprise the 11,877 Objects you have in this cluster - I am going to make an educated guess that you are storing these mostly as PFTT=1,SFTT=1,SFTM=RAID5 (e.g. a RAID5+RAID5 storage policy) - such a Policy uses a minimum of 9 components per Object.

Thus if you are using such a storage policy then this is completely expected behaviour.

I would also advise you redact hostnames better as from how you have there it is fairly trivial to get their names.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hey, thanks for the reply

my storage policy is FTT1, default, nothing complex at all, which is why I am finding this so strange.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

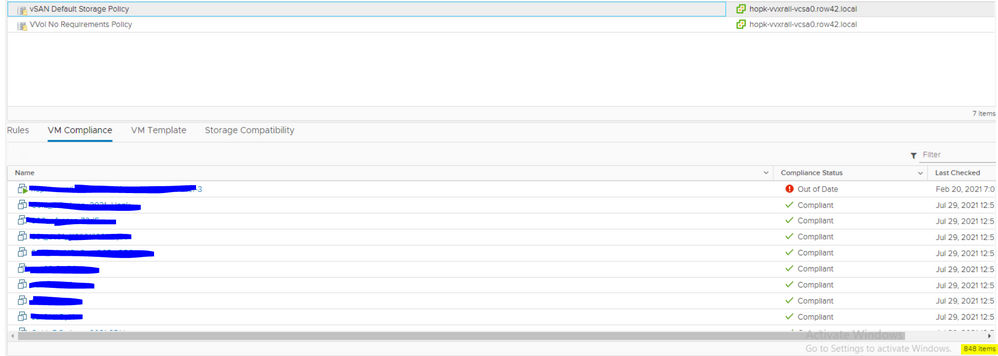

all vms are compliant

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please check the layout of some Objects from the CLI:

# esxcli vsan debug object list --all > /tmp/objout123

Then just a case of looking at the output and the layout of the Objects to confirm are they stored as regular RAID1 (e.g. 3 components per Object).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sure I can check that, here is vsan.obj_status_report

/localhost/DC02/computers> vsan.obj_status_report xxxxxxxxxxx

2021-07-29 13:09:44 +0000: Querying all VMs on vSAN ...

2021-07-29 13:09:49 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-01.xxxxxxxxx ...

2021-07-29 13:09:49 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-02.xxxxxxxxx ...

2021-07-29 13:09:49 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-04.xxxxxxxxx ...

2021-07-29 13:09:50 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-10.xxxxxxxxx ...

2021-07-29 13:09:50 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-06.xxxxxxxxx ...

2021-07-29 13:09:50 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-09.xxxxxxxxx ...

2021-07-29 13:09:50 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-08.xxxxxxxxx ...

2021-07-29 13:09:52 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-03.xxxxxxxxx ...

2021-07-29 13:09:52 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-05.xxxxxxxxx ...

2021-07-29 13:09:52 +0000: Querying DOM_OBJECT in the system from xxxxxxxxx-07.xxxxxxxxx ...

2021-07-29 13:09:53 +0000: Querying all disks in the system from xxxxxxxxx-01.xxxxxxxxx ...

2021-07-29 13:09:54 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-01.xxxxxxxxx ...

2021-07-29 13:09:54 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-02.xxxxxxxxx ...

2021-07-29 13:09:54 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-04.xxxxxxxxx ...

2021-07-29 13:09:54 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-10.xxxxxxxxx ...

2021-07-29 13:09:54 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-06.xxxxxxxxx ...

2021-07-29 13:09:55 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-09.xxxxxxxxx ...

2021-07-29 13:09:55 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-08.xxxxxxxxx ...

2021-07-29 13:09:55 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-03.xxxxxxxxx ...

2021-07-29 13:09:56 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-05.xxxxxxxxx ...

2021-07-29 13:09:56 +0000: Querying LSOM_OBJECT in the system from xxxxxxxxx-07.xxxxxxxxx ...

2021-07-29 13:09:57 +0000: Querying all object versions in the system ...

2021-07-29 13:09:59 +0000: Got all the info, computing table ...

Histogram of component health for non-orphaned objects

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

| 6/6 (OK) | 1543 |

| 5/5 (OK) | 8318 |

| 8/8 (OK) | 304 |

| 7/7 (OK) | 1297 |

| 4/4 (OK) | 113 |

| 3/3 (OK) | 297 |

| 12/12 (OK) | 2 |

| 75/75 (OK) | 1 |

| 36/36 (OK) | 2 |

+-------------------------------------+------------------------------+

Total non-orphans: 11877

Histogram of component health for possibly orphaned objects

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

+-------------------------------------+------------------------------+

Total orphans: 0

Total v10 objects: 11877

/localhost/DC02/computers>

/localhost/DC02/computers>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok so I guess this is not "RAID 1"

most objects have more than 3 components.....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this might be a better example 40.00 GB VM, "RAID 1", 5 components...which is assumed is an indication of some issue with vSAN policy?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

well, here is the issue I guess:

hostFailuresToTolerate: 2

hostFailuresToTolerate: 2

[root@zzzzz:~] grep "hostFailuresToTolerate: 2" /tmp/objout123|wc -l

11426

[root@zzzzz:~]

even tho VC shows FTT1 for policy.....oh well, I guess the best thing is to create a new FTT1 policy and apply it to all VMs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If that's the Storage Policy you want applied to them then yes.

Hold up - re-read your Storage Policy description - that is FTT=2, change it to FTT=1 if that is what you intended to do here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yep, that's what I'm hoping to achieve 😉

a bit of history of this cluster, I did originally set FTT2 (over a year ago) , then reverted to FTT1 about 6 months ago, as you can see from VC screenshots, the SPBM looks ok, but at the lower level, FTT is still 2...... weird.

I'll go ahead and make a new FTT1 policy and apply it across the estate and cross my fingers & toes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for all your suggestions so far, it's appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@LordofVxRail Just to clarify what is seemingly misunderstood here: There are two aspects at play here, FTM (Fault Tolerance Method) e.g. RAID1/RAID5/RAID6 and FTT - if you assign a FTT=2,FTM=RAID1 Storage Policy (as you have here) this is basically saying store 3 replicas of the data (+ 2 Witness components for quorum as need an odd number of total components where each component has a single vote) and thus the compliance view of the policy is indeed correct.

From the output you shared though:

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

| 6/6 (OK) | 1543 |

| 5/5 (OK) | 8318 |

| 8/8 (OK) | 304 |

| 7/7 (OK) | 1297 |

| 4/4 (OK) | 113 |

| 3/3 (OK) | 297 |

| 12/12 (OK) | 2 |

| 75/75 (OK) | 1 |

| 36/36 (OK) | 2 |

+-------------------------------------+------------------------------+

Total non-orphans: 11877

It looks like a load of different policies are actually applied e.g. a 6/6 Object might be FTT=2,FTM=RAID6, a 5/5 Object FTT=2,FTM=RAID1, anything with more components could just be auto-striped (due to size being >255GB per component) and thus using more components.