- VMware Technology Network

- :

- Cloud & SDDC

- :

- vCenter

- :

- VMware vCenter™ Discussions

- :

- Re: HP Proliant DL360 Gen9 + VMware ESXi, 6.5.0, 8...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HP Proliant DL360 Gen9 + VMware ESXi, 6.5.0, 8294253 = Host hardware fan status error reading 0 RPM for empty fan bays

This issue does not show up on VMware ESXi, 6.5.0, 7967591 (U1). Once I upgraded to U2 the erroneous issue shows up.

iLO shows Fan 1 & 2 are NOT installed

host (and vcenter) shows them as installed with 0 RPM reading

HP support keeps pointing me to the URL (Using HPE Custom ESXi Images to Install ESXi on HPE ProLiant Servers | HPE ) which I reassured them I used to update 6.5 U1 to 6.5 U2. Reset logs/sensors doesnt work as well.

I checked HCL and the server build is most definitely on it but i guess thats just a grey area until its verified.

Oh happy days.

Anyone having the same issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

as has been mentioned, VMware is working on a fix for the problem described.

As this issue is possibly related to an internal problem record, the following KB has been mentioned:

I've also asked the KB-Team to get the KB updated.

Unfortunately, there is no ETA as to when the fix will be available and I am sorry if this causes any issues.

In case I have an update on the ETA, I will post it here.

Thanks for your input on this,

Rick

Cloud Customer Success Architect - VMware Cloud

VMware Inc.

http://cloudsuccess.blog

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FYI:

Issue is present in 6.7.0, 8169922.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rick,

could you confirm that the issue is related to ESXi kernel and that a bugfix is planned to be released in October?

Thanks,

G.Di Vaio

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same problem with ProLiant DL380 Gen9 servers, ESXi 6.5.0 8294253, vCenter 6.5.0 8307201

Please keep us informed as this problem tricks our monitoring system.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

unfortunately, I do not have an update on this yet - and hence I can not confirm any "fixed by" date.

However, I know that this issue is actively being looked into.

I'll have an eye on this and will update you all here, as soon as I have valid Information I can share.

Thanks for your patience,

Rick

Cloud Customer Success Architect - VMware Cloud

VMware Inc.

http://cloudsuccess.blog

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem also presists on DL360 and DL380 Gen9s, all sporting VMware ESXi, 6.7.0, 8169922.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Exact same issues on DL360 G9 Running HP Customized 6.7.0 8169922.

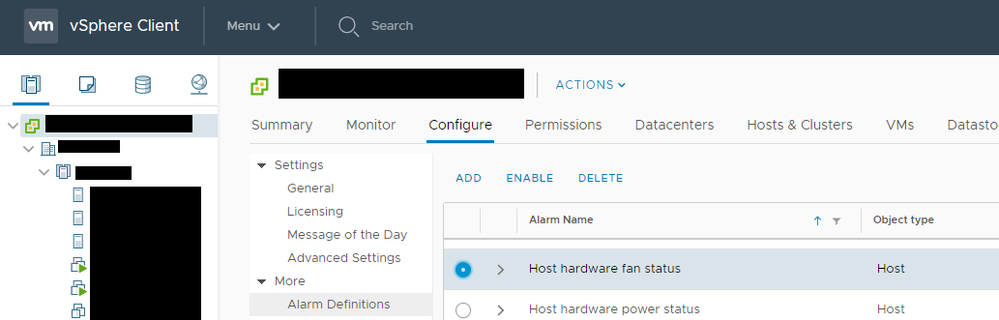

Edit: You can disable the "Host Hardware Fan Status" Alarm definition to stop it from triggering constantly. Not ideal, but the definition is useless while this bug exists.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey, where it can be disabled?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Configure Tab -> Alarm Definitions -> Sort Alarm Names so you can find it easier -> Host hardware fan status -> Select it -> Hit the disable button above it (my screenshot says enable...since it already is disabled)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Anyone receive any update? Have same issue with Dell OEM image.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to Re: HPE 380 Gen 9 FAN Status in vSphere 6.7 this will be fixed in an upcoming update for ESXi 6.7. Assuming that the issue is the same with other versions too, there's a good chance for a fix within the next few months.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All,

This is a known issue with the false alarm, after upgrading to 6.5 U2.

This issue will be resolved in 6.7 U1.

Thank You

Mohammed Younus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What about those that are not able to update to 6.7, for example my SAN is not supported in 6.7 only 6.5? I assume there will be a fix for 6.5 as well?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was checking this

https://kb.vmware.com/s/article/57171

Does this mean that we have a fix?

Is there a way we can disable monitoring for only the sensors it's complaining about?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think we have the fix in the coming version of 6.7. Not sure if it has been fixed in 6.5 tree. And we will not be able to disable monitoring for specific sensors. You might have to disable the respective alarm itself.

Please consider marking this answer as "correct" or "helpful" if you think your questions have been answered.

Cheers,

Supreet

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All,

I have an ML 350 Gen 9 - Single CPU with redundant fan configuration experience the same glitch with fan warnings.

According to HP documentation when ML350 Gen 9 is configured with single CPU and redundant fans ; fan slot 5 is empty by design and installing a fan in that slot is unsupported.

As a temporary test I have adjusted the `Host hardware fan status` alarm trigger with the following adjustment.

Open Alarm Definitions.

Select Host Hardware Fan status

Edit

select Triggers

Select the Alert trigger

Add a second condition

Trigger Argument

Fan Device 5 Fan 5

Operator

Not Equal To

Value

Warning

During early testing this configuration suppresses the warning state for system fan as it forces the alert to ignore the missing fan in slot 5.

With that said I am not happy with this configuration as I fear it will miss a failure of any other installed fan.

I intend to perform some further testing the next time I can arrange an outage window for the server ( time frame unknown )

Testing will involve migrating all VM's off the server

Placing it into maintenance mode

Removing one of the installed fans and monitoring the alert status.

For each other person / server you will likely need to perform similar steps and adjust the `not equal to` warning to reflect the fan that vSphere is complaining about.

If we can find a combination of alert triggers we might be able to provide a community fix / workaround until vmware / HP can release a patch

Please test in a Dev environment first as you don't want an outage by accident.

Cheers,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not a fix i is a workaround and VMware listing this as expected behaviour is a bit of a joke. As mentioned in earlier posts VMware are aware of this and hopefully in a few months time this will be fixed.

I have only ever noticed this in HP servers that support 2 cpus but with only 1 cpu installed. as mentioned by another member this is alerted based on the missing fans. 2 CPU configured hosts seem to appear fine. It is not a show stopper just a warning you need to ignore for now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems that VMware released some fixes...

Please apply the latest VCSA updates as well as the latest ESXi patches via VUM.

Unfortunately I cannot test it anymore by myself because I downgraded to vSphere 6.0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if one think this will fix the issue of showing a critical issue about 0 rpm of fans which do not exist on purpose, even demanded by HPE if only one CPU is applied, then dont bother to install that new updates.

It wont go away.

Worst:

another not going away warning (only a warning) will arise. In that fix is some sort of a CPU Kernel Patch for one of that problems with cpu-gate (spectre/meltdown) thingies.

you will have to change hyperthreading settings and lower your performance on your hosts, or ignore the warning.

Since vmware is totally okay with those permanent warning and critical notifications and is training us all to ignore this red or yellow little signs (which make it actually slowly completely obsolete to have this monitoring mechanism), I am actually not that annoyed anymore... (after now 5 years of ignoring this signs: something about DRS Problems I had 4 years to ignore, waiting for the promised fix in every next Ux)

I am really considering as a philosophy to shutdown the monitoring alerts, which vmware doesnt get into control and makes no effort at all, to fix those.

As I always say, I would change to another hypervisor, but I cant: with all that flaws, they still the best of all.

BR,

Andre

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Still there on VMware ESXi, 6.5.0, 9298722