- VMware Technology Network

- :

- Networking

- :

- VMware NSX

- :

- VMware NSX Discussions

- :

- Cluster Design Question

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a question regarding the cluster design for NSX. We have a medium sized deployment (>10 hosts) and have a management cluster, and an edge/compute cluster. This was designed so that our management VM's would be isolated from NSX enabled workloads. The design guide mentions that collapsing the Edge and Compute clusters is not recommended, due to the fact that Edge workloads are CPU-centric. My question is, are there any issues in my current setup? We actively monitor our hosts, and are prepared to scale out when necessary. As long as we monitor growth, there shouldn't be any issues in this design correct?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a general design recommendation seperation of Management, Control and Data planes may be considered as recommended Best Practice. Also from a Vsphere Perspective it may be a good idea to keep Management and Compute clusters seperated because of these explanined pros and cons:

http://www.settlersoman.com/why-to-create-the-vmware-management-cluster/

Since it is Scale out Architecture, it is possible to deploy NSX in 5 hosts upto 1000s of hosts, so design criteria may be very different according to the physical environment and applications.

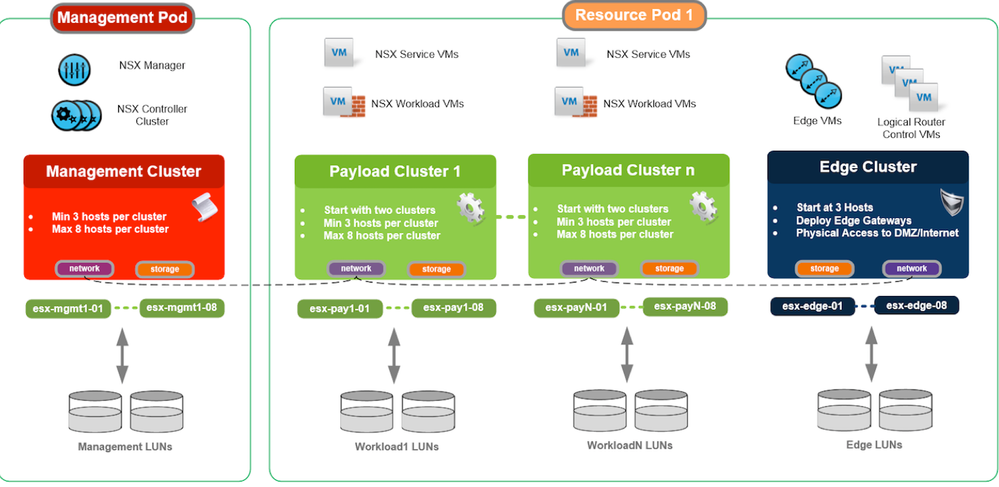

For Management Cluster, due to the Redundanncy of 3 Controlers 3ESXi hosts are required. If also the Edge Cluster is seperated, then again redundancy requires at least 2 ESXi hosts. The thread off of this design is just to dedicate Edge Cluster Hosts to Edge VMs, and not utilize the CPU and RAM resources for the Compute VMs that serve the Business Applications. So for 10 Hosts using 5 hosts for Management+Edge reduces the size of the Compute Cluster, so this may not be always possible. As below even single cluster for all Compute+Edge+Management may be chosen. But if there are 100 Hosts, seperating 4 Hosts for Edge Cluster may be preferred.

So if 2 Clusters seems best choice according to design constraints, then there are 2 Combinations due to where to put the Edge VMs

- Management+Edge and Compute

- Compute+Edge and Management

- One point to note may be Since Edge VMs talk to Physical world such as Routers they need Vlan Uplinks to route VM traffic to the Physical Wan or Internet. So according to the Physical Data Center design, extending these Vlans may not be possible or desirable to either the Management Pod, or Resource Pods.

- Total Bandwidth needed for North-South traffic. If ECMP is used Edge Cluster of 8 VMs may scale up to 80Gbps. If the Applications require these kind of high throughput, then consuming this amount of traffic on 3 Host Management Cluster may not be possible due to the Number of 10Gbps Physical Uplinks on the host. If Management Vlan and the Uplink Vlan coexist on same uplink, Network I/O Control may be needed. Since the Management VMs are not the destination of Compute traffic, this amount of High throughput should use the VTEPs of the Management hosts as VXLAN traffic of DLR-Edge Logical Switch increasing the number of hops and latency. Instead if the Edge VMs reside on the Compute Cluster delivering this kind of high throughput traffic may be easier.

- Again total number of Edge VMs in the design may be important. 2 Edges may be enough for a wide variety of Applications and number of VMs. Or there may be many edges if NAT or Duplicate IPs is required for seperation of tenants in a Cloud Environment.

- The Size of the Edge VMs and vCPU requirements may change according to which services such as Load Balancing, Firewall, NAT, VPN is delivered.

- If the scale of traffic is planned to increase to high amounts, then putting Edge VMs to Compute Cluster may be a good idea.

- In both options Resource Scheduling may be useful between the Edge and Manamgent VMs on 1st option, and Edge and Compute VMs for the 2nd Option.

This thread there are links about when to use 1, 2 or 3 clusters according to the size of the environment.

Basically the Choice of Clusters may be:

- 3 Clusters Seperate for Compute,. Edge and Management for Large Enviroment (Where does the distinction between Large and Medium starts may be a Design Constraint)

- 2 Clusters consisting of Compute and Edge+Management for Medium Enviroment

- Single Cluster for Compute+Edge+Management for Smaill Environments. (Resource Scheduling may be needed for coumpute not oversubscribing of Compute VMs the CPU, Memory resources of NSX components)

Basically the choice depends on the total Scalability of the Vsphere Environments. For example for Management Cluster in which NSX Manager and Controllers exist, 3 Seperate ESXi hosts are recommended

http://stretch-cloud.info/2015/03/a-separate-nsx-edge-cluster-or-not

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a general design recommendation seperation of Management, Control and Data planes may be considered as recommended Best Practice. Also from a Vsphere Perspective it may be a good idea to keep Management and Compute clusters seperated because of these explanined pros and cons:

http://www.settlersoman.com/why-to-create-the-vmware-management-cluster/

Since it is Scale out Architecture, it is possible to deploy NSX in 5 hosts upto 1000s of hosts, so design criteria may be very different according to the physical environment and applications.

For Management Cluster, due to the Redundanncy of 3 Controlers 3ESXi hosts are required. If also the Edge Cluster is seperated, then again redundancy requires at least 2 ESXi hosts. The thread off of this design is just to dedicate Edge Cluster Hosts to Edge VMs, and not utilize the CPU and RAM resources for the Compute VMs that serve the Business Applications. So for 10 Hosts using 5 hosts for Management+Edge reduces the size of the Compute Cluster, so this may not be always possible. As below even single cluster for all Compute+Edge+Management may be chosen. But if there are 100 Hosts, seperating 4 Hosts for Edge Cluster may be preferred.

So if 2 Clusters seems best choice according to design constraints, then there are 2 Combinations due to where to put the Edge VMs

- Management+Edge and Compute

- Compute+Edge and Management

- One point to note may be Since Edge VMs talk to Physical world such as Routers they need Vlan Uplinks to route VM traffic to the Physical Wan or Internet. So according to the Physical Data Center design, extending these Vlans may not be possible or desirable to either the Management Pod, or Resource Pods.

- Total Bandwidth needed for North-South traffic. If ECMP is used Edge Cluster of 8 VMs may scale up to 80Gbps. If the Applications require these kind of high throughput, then consuming this amount of traffic on 3 Host Management Cluster may not be possible due to the Number of 10Gbps Physical Uplinks on the host. If Management Vlan and the Uplink Vlan coexist on same uplink, Network I/O Control may be needed. Since the Management VMs are not the destination of Compute traffic, this amount of High throughput should use the VTEPs of the Management hosts as VXLAN traffic of DLR-Edge Logical Switch increasing the number of hops and latency. Instead if the Edge VMs reside on the Compute Cluster delivering this kind of high throughput traffic may be easier.

- Again total number of Edge VMs in the design may be important. 2 Edges may be enough for a wide variety of Applications and number of VMs. Or there may be many edges if NAT or Duplicate IPs is required for seperation of tenants in a Cloud Environment.

- The Size of the Edge VMs and vCPU requirements may change according to which services such as Load Balancing, Firewall, NAT, VPN is delivered.

- If the scale of traffic is planned to increase to high amounts, then putting Edge VMs to Compute Cluster may be a good idea.

- In both options Resource Scheduling may be useful between the Edge and Manamgent VMs on 1st option, and Edge and Compute VMs for the 2nd Option.

This thread there are links about when to use 1, 2 or 3 clusters according to the size of the environment.

Basically the Choice of Clusters may be:

- 3 Clusters Seperate for Compute,. Edge and Management for Large Enviroment (Where does the distinction between Large and Medium starts may be a Design Constraint)

- 2 Clusters consisting of Compute and Edge+Management for Medium Enviroment

- Single Cluster for Compute+Edge+Management for Smaill Environments. (Resource Scheduling may be needed for coumpute not oversubscribing of Compute VMs the CPU, Memory resources of NSX components)

Basically the choice depends on the total Scalability of the Vsphere Environments. For example for Management Cluster in which NSX Manager and Controllers exist, 3 Seperate ESXi hosts are recommended

http://stretch-cloud.info/2015/03/a-separate-nsx-edge-cluster-or-not

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the reply cnrz, that helps a lot. I actually came across the same article, which made me question our current design.

One point to note may be Since Edge VMs talk to Physical world such as Routers they need Vlan Uplinks to route VM traffic to the Physical Wan or Internet. So according to the Physical Data Center design, extending these Vlans may not be possible or desirable to either the Management Pod, or Resource Pods.

This is one of the reasons kept our Edge/Compute cluster separate from the Management cluster. All of our hosts use the same uplinks to get out to the Internet. The difference with our Compute/Edge cluster is the addition of the VTEP for accessibility for the Edge's.

Total Bandwidth needed for North-South traffic. If ECMP is used Edge Cluster of 8 VMs may scale up to 80Gbps. If the Applications require these kind of high throughput, then consuming this amount of traffic on 3 Host Management Cluster may not be possible due to the Number of 10Gbps Physical Uplinks on the host. If Management Vlan and the Uplink Vlan coexist on same uplink, Network I/O Control may be needed. Since the Management VMs are not the destination of Compute traffic, this amount of High throughput should use the VTEPs of the Management hosts as VXLAN traffic of DLR-Edge Logical Switch increasing the number of hops and latency. Instead if the Edge VMs reside on the Compute Cluster delivering this kind of high throughput traffic may be easier.

Per my last comment, do you think I should enable NIOC on my Compute/Edge cluster? We multiple uplinks that are shared amongst our different portgroups.

Again total number of Edge VMs in the design may be important. 2 Edges may be enough for a wide variety of Applications and number of VMs. Or there may be many edges if NAT or Duplicate IPs is required for seperation of tenants in a Cloud Environment.

This was actually another question I had. In our design, we have separate dedicated Edge VM's for clients. So if we have 50 customers, we may have 50 Edge VM's. This is due to the fact that a majority of our customers take advantage of the different options available on the Edge, such as NAT, SSL VPN, etc. Is this a normal configuration?

If the scale of traffic is planned to increase to high amounts, then putting Edge VMs to Compute Cluster may be a good idea.

The bulk of our traffic is in our Compute/Edge cluster, which is another reason why we put our Edge's there.

Thank you again for your assistance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NIOC may be needed when there is contention for different types of traffic on the same Physical Uplink. For example if Management traffic and Edge, Vxlan VTEP , Vmotion or other traffic types use the same uplinks. Generally may be good idea to keep seperate, but if not possible then Port groups may be designed according to traffic usage. So NIOC for a well designed uplink teaming policies that does not produce saturation, NIOC will just add complication to the design and configuration. NSX adds VTEP type of traffic, so general NIOC guidelines without NSX should be valid here.

On the Reference Design Guide QoS is mentioned for Robust Physical Network, but NIOC not referred:

https://www.vmware.com/files/pdf/products/nsx/vmw-nsx-network-virtualization-design-guide.pdf

The following design NIOC is used (This designs use Cisco N7K switches and UCS servers).

https://www.vmware.com/files/pdf/products/nsx/vmware-nsx-on-cisco-n7kucs-design-guide.pdf

http://blog.igics.com/2015/12/end-to-end-qos-solution-for-vmware.html

Although not a must, If there are different Tenants or Projects or Environments (Test, Development, Production), then Edge Gateway for each of them may be a good idea as there may be IP Overlap and different requirements for common Network services. Though one tradeoff may be the resource usage of these Edges, but theoritically there can be 2000 Edge devices per NSX Manager:

https://www.vmguru.com/2015/03/vmware-nsx-v-configuration-maximums/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the helpful insight cnrz.