- VMware Technology Network

- :

- Cloud & SDDC

- :

- VI 3.X

- :

- VI: VMware ESXi™ 3.5 Discussions

- :

- Virtual Networking with 6 pNICs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This article by Edward Haletky shows how to best use the NIC ports on a server with 6 ports:

pNIC0 -> vSwitch0 -> Portgroup0 (service console)

pNIC1 -> vSwitch0 -> Portgroup1 (VMotion)

pNIC2 -> vSwitch1 -> Portgroup2 (Storage Network)

pNIC3 -> vSwitch1 -> Portgroup2 (Storage Network)

pNIC4 -> vSwitch2 -> Portgroup3 (VM Network)

pNIC5 -> vSwitch2 -> Portgroup3 (VM Network)

The article talks about ESX. Should this be different for ESXi?

Also, this might be stupid, but I don't understand how any data can get to the production LAN in this scenario. Shouldn't at least one of the pNICs be connected to the LAN?

I am currently working on a visio diagram, I'll try to attach it.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't use vmotion and management on the same iSCSI switches or you have to create 4 VLANs (2 for iSCSI and 1 for Vmotion and 1 for Management), and trunk port between switch for Vmotion and Management VLANs.

In any case for BEST storage perforormance use dedicated switches only for iSCSI.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can follow the same for ESXi. With ESXi there is no service console port, but instead you created a VMkernel port for management. So vSwitch0 would be the same but with 2 vmkernel port (and you'll want to specify that the port be active on one NIC and standby for the other and vice versa for the other vmkernel port). For the storage network you'll only need one vmkernel port. For vSwitch2 you'll only have one virtual machine port group.

Also, this might be stupid, but I don't understand how any data can get to the production LAN in this scenario. Shouldn't at least one of the pNICs be connected to the LAN?

The NICs for vSwitch2 should be connected to your LAN. vSwitch0 should be on a private management network if possible and vSwitch1 should be ideally be on an isolated storage network.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This article by Edward Haletky shows how to best use the NIC ports on a server with 6 ports:

One of the many experts we have, but he also wrote a book about ESX, so it's probably good advice ![]()

The article talks about ESX. Should this be different for ESXi?

No

Also, this might be stupid, but I don't understand how any data can get to the production LAN in this scenario. Shouldn't at least one of the pNICs be connected to the LAN?

It is. That's the 'Storage Network'. Or SAN. Redundant ports of course.

I am currently working on a visio diagram, I'll try to attach it.

Also you may consider using jpeg or gif's rather than document viewers, I for one don't have Visio, and yes I can download a VViewer, but I don't want to clutter up my system with programs I MAY use once a year. It's easier to see on the web with pictures, so we don't have to download attachments, we can see it inline with the web.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

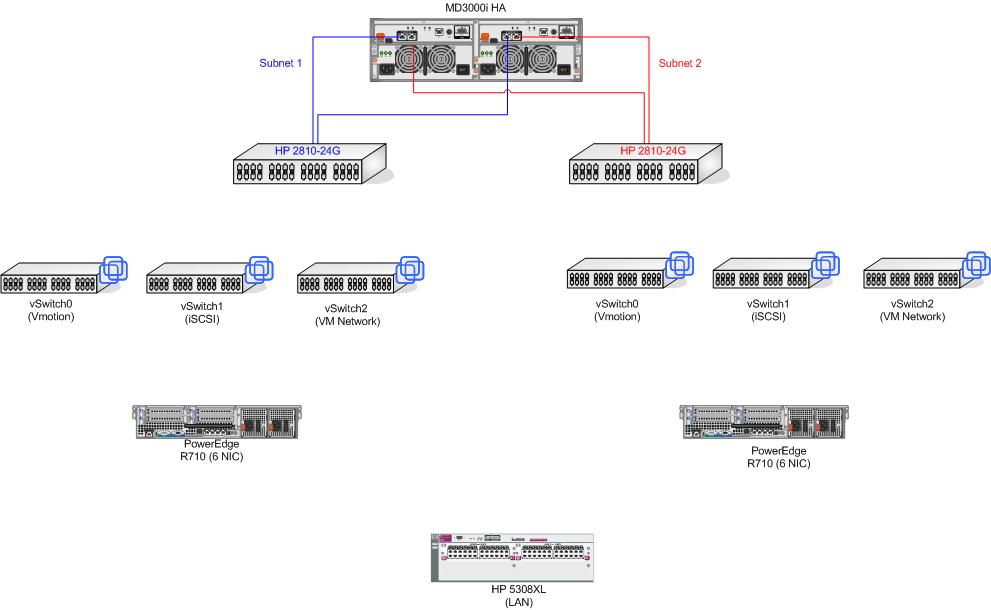

Sorry about attaching a Visio drawing!

It is in the early stages, as I am not sure how the cabling will be configured. That is why I'm here :smileyblush:

I'm getting conflicting information as to which ports should connect to the production LAN. My understanding is that vSwitch1 (Storage Network) is a vKernel for iSCSI, and should be isolated.

I also thought the VM Network meant an isolated network for traffic between VM's, not traffic from the LAN to VMs and vice versa. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vSwitch 2 - vm network should connect to the production LAN. That is what the vm's will use to talk to your LAN. This should be separate from your management VLAN, as you want to keep vm's and service console/vmotion networks separate from each other.

vSwitch1 should be as isolated as possible to minimize throughput / bandwidth issues as well as provide security and low latency as that is the basis for all of your vm's.

Just reiterating what's already been said above.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok so it should look like this:

pNIC0 -> vSwitch0 -> Portgroup0 (VKernel management) (Failover pNIC for Portgroup 1)

pNIC1 -> vSwitch0 -> Portgroup1 (VKernel VMotion) (Failover pNIC for Portgroup 0)

pNIC2 -> vSwitch1 -> Portgroup2 (VKernel iSCSI)

pNIC3 -> vSwitch1 -> Portgroup2

pNIC4 -> vSwitch2 -> Portgroup3 (VM Network)

pNIC5 -> vSwitch2 -> Portgroup3

vSwitch2 should have an IP address from my production LAN, and connect to it

vSwitch1 should have an IP address from one of the iSCSI subnets, and connect to one of the dedicated HP 2810 switches.

vSwitch0 - I don't know :_|

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vSwitch0 will have 1 IP for management, should be on its own VLAN, and 1 IP for vmotion, which would ideally be on its own subnet. The VLAN requirements are ideal situations. You can combine as needed, based on your own network configuration limitations.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And to add each vmkernel port group that you create will have to have a unique IP address. So the management IP address and vmotion IP and isCSI will all have to be on different subnets. For vmotion only the ESXi hosts have to be able to connect on that IP range so you can use some subnet that isn't in use and you won't have to worry about routing it.

vSwitch2 should have an IP address from my production LAN, and connect to it

This vSwitch won't have an ESXi IP address (nor a VMkernel port group) . Only the VMs will have an IP address.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very important: the MD3000i works with 2 different iSCSI network (for good HA).

You have to use 2 diffent vSwitches for each network, each with a single physical NIC, each witch a service console port (for iSCSI initialization) and a vmkernel port.

With this configuration you will also see the multipath feature under you storage.

The other LAN/management/Vmotion vSwitches can inseat use 2 physical NIC to have network failover.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, I'm throughly confused now :smileygrin:

How about opinions on the dedicated switches for iSCSI? I'm considering going with Dell PowerConnect 5424's, but they aren't stackable. Will that make a difference? I can use either a single or double subnet configuration. If you look at pages 208 and 209 from here:

You'll see the two different configuration options. In the single subnet configuration, the switches have to be linked; in the dual subnet configuration they don't. Which one provides both failover and load balancing? Does it solely depend on the capabilities of the switches?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The dual subnet gives you additional network resilience, although not required. You also don't need the switches to be stackable unless you're thinking of an ether channel, which you don't necessarily need here. You can have each interface going to a separate switch, either on the same subnet or not, typical config is one subnet spread over both switches. The switches would need to have a connection to each other as well, but this would give you the ability to use both NICs, as long as your controllers each have their own IP address.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The dual subnet gives you additional network resilience, although not required. You also don't need the switches to be stackable unless you're thinking of an ether channel, which you don't necessarily need here. You can have each interface going to a separate switch, either on the same subnet or not, typical config is one subnet spread over both switches. The switches would need to have a connection to each other as well, but this would give you the ability to use both NICs, as long as your controllers each have their own IP address.

-KjB

VMware vExpert

I think I'd rather go with the Dell switches, connect them tegether, use a single subnet. That will save me some money and time. Is connecting the two switches together as simple as cable between them?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes. Are you going to to uplink these switches to your other network gear, or are these just for iSCSI. If they're just for iSCSI, then no you should be set.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm considering going with Dell PowerConnect 5424's, but they aren't stackable.

For the dedicated iSCSI switches you DO NOT need to stack them.

You must have TWO separate network. Is multipath that wil select the right network.

More info on Dell ESX Storage Deployment Guide (page 5, figure 1b)

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes. Are you going to to uplink these switches to your other network gear, or are these just for iSCSI. If they're just for iSCSI, then no you should be set.

-KjB

VMware vExpert

They will be for iSCSI only - I feel like the setup is complicated enough already. :smileygrin:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

bq. I'm considering going with Dell PowerConnect 5424's, but they aren't stackable.

For the dedicated iSCSI switches you DO NOT need to stack them.

You must have TWO separate network. Is multipath that wil select the right network.

More info on Dell ESX Storage Deployment Guide (page 5, figure 1b)

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

Ok so they don't need to be stacked, if dedicated. Thank you for pointing me to that diagram - I've looked at it before and it makes sense visually. What is the reasoning for having 2 subnets? Both the single and double subnet configurations seem to have failover, does it have something to do with load balancing?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is my new diagram. Please take a look and let me know what you think. A couple of questions - Shouldn't the management IP's be on my production VLAN (they are in the drawing) so I can manage things from my workstation?

AndreTheGiant said to use 2 different vSwitches for each iSCSI network. My drawing does not reflect that, but I'd like more opionions on that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Two different vSwitch is a bit extreme, but if it fits your requirement. . . .

If you're using two vSwitch to limit your security footprint, then all and good, but you don't need them for load balancing or multipathing. The only requirement in this case is multiple target IPs, form which to base your load balancing algorithm and split the traffic over as many physical NICs that you have available to you in that particular team. Again, the main reason you use to use multiple vSwitch is to create another aggregation point where traffic is split over physical uplinks to your network. Since a vSwitch is a memory based object, all portgroups on that vSwitch are "sharing" that memory space. So, different vSwitch is different memory space.

Ultimately, it's up to you.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't use vmotion and management on the same iSCSI switches or you have to create 4 VLANs (2 for iSCSI and 1 for Vmotion and 1 for Management), and trunk port between switch for Vmotion and Management VLANs.

In any case for BEST storage perforormance use dedicated switches only for iSCSI.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't use vmotion and management on the same iSCSI switches or you have to create 4 VLANs (2 for iSCSI and 1 for Vmotion and 1 for Management), and trunk port between switch for Vmotion and Management VLANs.

In any case for BEST storage perforormance use dedicated switches only for iSCSI.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

Ok, I understand what you're saying. So I should connect the vmotion/management vSwitch to my production LAN switch, and create a VLAN for the VMotion traffic?

Thanks a bunch for looking at the diagram!