- VMware Technology Network

- :

- Cloud & SDDC

- :

- VI 3.X

- :

- VI: VMware ESXi™ 3.5 Discussions

- :

- Re: VI3 Network Diagram - your opinion/input pleas...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

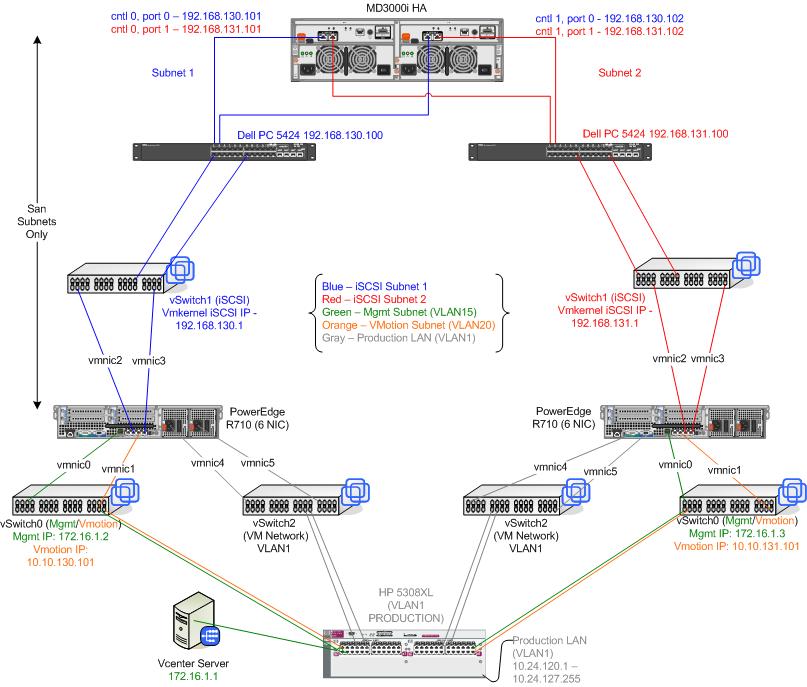

VI3 Network Diagram - your opinion/input please

I am new to VMware Infrastructure, and have been working on this drawing for a while. Please take a look and let me know of anything that is wrong or could be improved. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you attach to the post, as opposed to another link?

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks familiar. ![]()

Your iSCSI connections are not redundant. Right now, if you lose either iSCSI switch, your connection from your server to storage dies. Your physical NICs should have a leg on each switch.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you using class B for VMotion?

I'd go C and keep all the VMotion in a small yet perfectly formed private network.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm curious if this is a new design for iSCSI solution, are you using 1GBe or thought of using 10GBe, since you're definitely need it in the future for expansion/growth demands. Even though it costly but saves you to redesign your network a year from now!

If you found this information useful, please consider awarding points for "Correct" or "Helpful". Thanks!!!

Regards,

Stefan Nguyen

VMware vExpert 2009

iGeek Systems Inc.

VMware, Citrix, Microsoft Consultant

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, I have that corrected now ![]()

Looks familiar.

Your iSCSI connections are not redundant. Right now, if you lose either iSCSI switch, your connection from your server to storage dies. Your physical NICs should have a leg on each switch.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you using class B for VMotion?

I'd go C and keep all the VMotion in a small yet perfectly formed private network.

Good idea, I could go with 192.168.100.x 255.255.255.240 and that would give 14 to work with.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm curious if this is a new design for iSCSI solution, are you using 1GBe or thought of using 10GBe, since you're definitely need it in the future for expansion/growth demands. Even though it costly but saves you to redesign your network a year from now!

If you found this information useful, please consider awarding points for "Correct" or "Helpful". Thanks!!!

Regards,

Stefan Nguyen

VMware vExpert 2009

iGeek Systems Inc.

VMware, Citrix, Microsoft Consultant

Yes, it is a new implementation. Yes I will be using 1GBe. 10GBe is way too expensive for my budget.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your iSCSI connections are not redundant. Right now, if you lose either

iSCSI switch, your connection from your server to storage dies. Your

physical NICs should have a leg on each switch.

True for some storage but NOT for Dell MD300i (it seem this storage), AXi, CX in iSCSI, ...

This kind of storage use the SAME fabric topology of FC, and use multipath for link/switch failover.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same for production. Is that one switch or two? Is there at least two blades within the switch you can connect to? If you have to use the same switch, then you should also leverage an ether channel (or trunk in HP speak) and use NIC teaming with ip hash load balancing policy.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

bq. Your iSCSI connections are not redundant. Right now, if you lose either

+ iSCSI switch, your connection from your server to storage dies. Your+

+ physical NICs should have a leg on each switch.+True for some storage but NOT for Dell MD300i (it seem this storage), AXi, CX in iSCSI, ...

This kind of storage use the SAME fabric topology of FC, and use multipath for link/switch failover.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

You're right - the way the switches are connected to the MD3000i should allow for failover, even if both cables from each server are going to one switch.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The diagram has 2 nics for iSCSI, both going to one switch. Meaning, if the switch goes down, there is no storage on that server, unless we're talking wirelss networking. ![]()

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Typical FC SAN config would have one NIC to one fabric switch, and second NIC to second fabric switch. Both HBAs to one switch means storage loss to the server. It's nice to have the switches crossed to each storage device, but typically you would not do this in a SAN environment. One controller would have multiple PATHS from one switch, and the other controller would have multiple paths to a 2nd switch. You want to split your connections over the switches, and have the switches connecting directly to one controller or the other. In this case, if you lose a switch, you lose a path, but the server can get to the other storage controller. If you lose a NIC, then you have a 2nd nic to a 2nd switch. Both nics/hba's into one switch creates a single point of failure, the switch. Nothing to failover to here.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same for production. Is that one switch or two? Is there at least two blades within the switch you can connect to? If you have to use the same switch, then you should also leverage an ether channel (or trunk in HP speak) and use NIC teaming with ip hash load balancing policy.

-KjB

VMware vExpert

Yes, there is only one switch for the production LAN. You lost me when you started talking about a trunk/NIC teaming...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The diagram has 2 nics for iSCSI, both going to one switch. Meaning, if

the switch goes down, there is no storage on that server, unless we're

talking wirelss networking.

True. Two cable in the diagram are in a wrong place.

Each iSCSI NIC must go to a different switch.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Typical FC SAN config would have one NIC to one fabric switch, and second NIC to second fabric switch. Both HBAs to one switch means storage loss to the server. It's nice to have the switches crossed to each storage device, but typically you would not do this in a SAN environment. One controller would have multiple PATHS from one switch, and the other controller would have multiple paths to a 2nd switch. You want to split your connections over the switches, and have the switches connecting directly to one controller or the other. In this case, if you lose a switch, you lose a path, but the server can get to the other storage controller. If you lose a NIC, then you have a 2nd nic to a 2nd switch. Both nics/hba's into one switch creates a single point of failure, the switch. Nothing to failover to here.

-KjB

VMware vExpert

Ok I understand now - it's obvious that if both cables from Server A go to the same switch, and that switch dies, = no storage for Server A. Attached is the revised diagram, does it look better? At this point, I'm not sure why there needs to be 2 subnets on the iSCSI network...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure why there needs to be 2 subnets on the iSCSI network.

Is a requirement to make multipath work on MD3000i. You have 4 target (and 2 initiator for each ESX) and 4 different path.

Andre

**if you found this or any other answer useful please consider allocating points for helpful or correct answers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, I think we'll need to send you a consulting fee after this one. ![]() I guess some points will have to do.

I guess some points will have to do.

Since you are using 2 NICs in the same vSwitch, you can team or bond them together. They can either be teamed to provide active/passive redundancy, or they can be active/active. To make the best active/active use, the connections should go to the same switch and be configured in a trunk or ether channel. That way, a vm can use both NICs at the same time. But, that's a story for another day, I think.

-KjB

VMware vExpert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't understand the vmotion network, or the management network. In another thread, Dave Mishchenko said the following about them:

Let's say your managemnet network is 172.16.1.0/24. The vcenter server could be at 172.16.1.1, ESX1 at .2 , ESX2 at .3, etc. For the ESXi hosts that would be the managemnent IP addresses. For the vmotion subnet, you can pick any subnet that you don't currently use and only the ESXi hosts would have an IP address on that subnet. The vCenter server doesn't have to have an IP on that subnet.

I'm sorry Dave, but I still don't get it. ?:| If the management address for the host is in the 172.16 range, how will it also have an IP on the VMotion subnet?

And for that matter, how would I connect to the VCenter Server from my workstation, when it is on a separate subnet? Do I have to put a second NIC in my workstation or something?