- VMware Technology Network

- :

- Digital Workspace

- :

- Horizon

- :

- Horizon Desktops and Apps

- :

- Re: Why Linked Clone disk so slow?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hopefully someone have the answer or some advice to get better performance with VMware View Linked Clone VM. I'm just trying to figure out why disk performance is so slow with Linked Clone disk if compare with normal disk. Just compare the two simples tests done with Iometer with the same config (4K, 80% write, 100% random). The first test is inside the Linked Clone disk (C:\) and the second one is through normal disk added to the same VM on the same datastore.

RESULTS with C:\ (Linked Clone)

iops: 376

Throughput: 1,6MBps

Latency: 25ms

CPU: 62%

RESULTS with D:\ (normal disk added on the same datastore - Thin Provisionning)

iops: 3369

Throughput: 13,8MBps

Latency: 3,0ms

CPU: 13,5%

The latency is almost 10 times longer and the IOPS is almost 10 times less with Linked Clone disk. I have attached the Iometer results here combine with the ESXTOP results. The ESXTOP shows the same poor results but strangely the VM do a lot of reads with the Linked Clone test. The CBRC is activated on this pool but I'm not thinking it could have an effect since the Iometer disk is on the "delta" so not in the digest disk.

I'm pretty sure the problem is not with hardware but just in case, here the setup:

ESXi 5.5 (Dell Poweredge R620)

Equallogic PS6100XV

4 X iSCSI 1Gbs paths

View Horizon 5.3.1

vCenter 5.5

Windows 7 x64 base image, 2 vcpu

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solved!

Finally the problem comes from the parent image. The hardware version was v8. After upgrading to hardware version 10 and recompose the pool, the problem is gone.

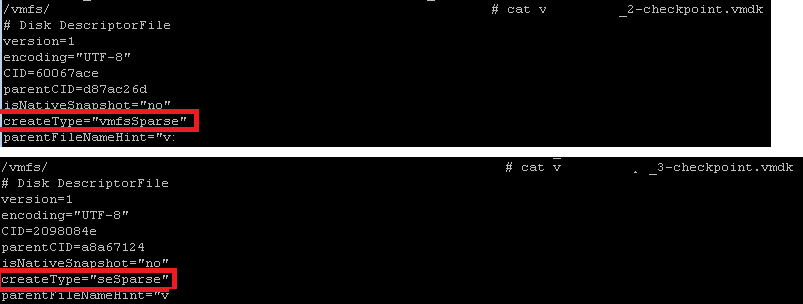

Since View 5.2, there is a new disk type for Linked clone disk: "SE Sparse (Space-Efficient) disk". It supposed to be better for storage efficiency, particularly for storage reclamation but there is a better handling of writes IO with addressing alignment issues. This is the default disk type for Linked clone BUT only when parent image is on hardware version 9 or greater...

The results are now OK with the same VDI test. The %VMWAIT metric is now normal:

We can see the different disk type for delta disk between hardware version 8 (first) and 10 (second):

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A couple observations:

- Your %VMwait time on the linked clone image is way high. 90% means that most of the time something is choking out CPU availability. Notice on your normal run it's a nice lean 1%.

- Are you sure you're using the exact same IO meter profile? On your linked clone run the HBA is 60 Read 40 write and your "normal disk" is 20 Read 80 write. That makes no sense if they're the same. Can you post the exact profile you're using?

- You have multiple VMs running on this host at least as ESXTOP reveals. Are these on the same LUN and host you're testing on? If so, they may be inducing load and muddying your test results. I usually run these tests with a single VM with many workers. Check out this iometer profile from the Atlantis guys for a standardized VDI run. VDI Performance - Using Iometer to Simulate a Desktop Workload | Atlantis Computing Blog

It sounds to me like you're getting bitten by something in your testing configuration. Isolate the test to a single host and datastore and double check the iometer profile.

Edit: Make sure the datastore no other load on it if you're using IOmeter to measure the total available performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your questions help me to digg deeper on this. I've never really use ESXTOP but that's the good point, %VMwait is very high with Linked clone disk.Why?

I did more tests but this time on completely isolated environment: non-production ESXi server, new VmWare View plateform (vCenter, composer, connection server). The share datastore is on isolated SAN, without others connections on it.

I used this IOmeter config (below I've attached the completed config):

| VDI,NONE |

'size,% of size,% reads,% random,delay,burst,align,reply

| 4096,100,20,100,0,1,4096,0 |

The results are identical: the Linked clone disk is really slower and the metric %VMwait is very high. There is something under the hood about Linked clone mecanism that slow the I/O transfer. The problem comes from writes I/O, not read. There is something happenning when the Linked clone VM writes to disk. For sure the I/O can't go on the read-only replica so the write is redirected to "delta" disk and this seems to consume a lot of resource. Or I misonderstood the results?

I've attached the results. Just to compare and to exlude SAN iSCSI component, I did the same test on the local store. The same happen: linked clone are very slow at the moment writes are commited.

share_store_LINKED_VDI_IO_test.png

share_store_NOT_LINKED_VDI_IO_test.png

local_store_LINKED_VDI_IO_test.png

Version 1.1.0

'TEST SETUP ====================================================================

'Test Description

'Run Time

' hours minutes seconds

0 0 0

'Ramp Up Time (s)

0

'Default Disk Workers to Spawn

NUMBER_OF_CPUS

'Default Network Workers to Spawn

0

'Record Results

ALL

'Worker Cycling

' start step step type

1 1 LINEAR

'Disk Cycling

' start step step type

1 1 LINEAR

'Queue Depth Cycling

' start end step step type

1 32 2 EXPONENTIAL

'Test Type

NORMAL

'END test setup

'RESULTS DISPLAY ===============================================================

'Record Last Update Results,Update Frequency,Update Type

DISABLED,1,WHOLE_TEST

'Bar chart 1 statistic

Total I/Os per Second

'Bar chart 2 statistic

Total MBs per Second (Decimal)

'Bar chart 3 statistic

Average I/O Response Time (ms)

'Bar chart 4 statistic

Maximum I/O Response Time (ms)

'Bar chart 5 statistic

% CPU Utilization (total)

'Bar chart 6 statistic

Total Error Count

'END results display

'ACCESS SPECIFICATIONS =========================================================

'Access specification name,default assignment

VDI,NONE

'size,% of size,% reads,% random,delay,burst,align,reply

4096,100,20,100,0,1,4096,0

'END access specifications

'MANAGER LIST ==================================================================

'Manager ID, manager name

1,XXX

'Manager network address

'Worker

Worker 1

'Worker type

DISK

'Default target settings for worker

'Number of outstanding IOs,test connection rate,transactions per connection,use fixed seed,fixed seed value

10,DISABLED,1,DISABLED,0

'Disk maximum size,starting sector,Data pattern

6144000,0,0

'End default target settings for worker

'Assigned access specs

'End assigned access specs

'Target assignments

'Target

C: "OS"

'Target type

DISK

'End target

'End target assignments

'End worker

'Worker

Worker 2

'Worker type

DISK

'Default target settings for worker

'Number of outstanding IOs,test connection rate,transactions per connection,use fixed seed,fixed seed value

1,DISABLED,1,DISABLED,0

'Disk maximum size,starting sector,Data pattern

0,0,0

'End default target settings for worker

'Assigned access specs

'End assigned access specs

'Target assignments

'End target assignments

'End worker

'End manager

'END manager list

Version 1.1.0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solved!

Finally the problem comes from the parent image. The hardware version was v8. After upgrading to hardware version 10 and recompose the pool, the problem is gone.

Since View 5.2, there is a new disk type for Linked clone disk: "SE Sparse (Space-Efficient) disk". It supposed to be better for storage efficiency, particularly for storage reclamation but there is a better handling of writes IO with addressing alignment issues. This is the default disk type for Linked clone BUT only when parent image is on hardware version 9 or greater...

The results are now OK with the same VDI test. The %VMWAIT metric is now normal:

We can see the different disk type for delta disk between hardware version 8 (first) and 10 (second):