- VMware Technology Network

- :

- Cloud & SDDC

- :

- ESXi

- :

- ESXi Discussions

- :

- Re: consolidation failed - insufficient disk space

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

consolidation failed - insufficient disk space

Using ESXi 6.7, VCSA 6.7, Veeam Backup & Replication 11 - a Veeam vmware backup job with change block tracking enabled failed a few days ago on a host at a remote location. vmdk sesparse files have grown large since then and the original approach to the disk provisioning layout wasn't ideal. The datastore ran out of space which caused a vm to go offline. There was initially no snapshots found in the web interface snapshot manager but vcsa displays the "consolidation is needed" notice. 87GB has been freed up to be able to run the vm after relocating its 80GB C: vmdk to another datastore and relocating + registering vmx which freed up 12GB the vswp consumed. A disk consolidation attempt after creating a snapshot from the local esxi web interface while the vm is powered on showed 0% progress for ~20 minutes and then "insufficient disk space" failure. Running df -h or du -h -s . periodically while that was in progress showed no decrease in free space with the number always remaining ~87GB. “Delete all” succeeded and removed the snapshot entry from the snapshot manager but didn’t actually remove the sesparse files. Trying consolidation again from vcsa yielded same result with 0% progress for ~20 minutes followed by "insufficient disk space" failure. My understanding is that the sdelete + vmkfstools -K (punchzero) approach to reclaim any space on thin vmdk is not an option unless snapshots are first consolidated. Consolidation will be attempted again after hours with the vm powered off but I'm assuming it may still fail due to the delta size at this point. Any suggestions to resolve this other than migration to a new datastore?

[root@ESXi:/] df -h

Filesystem Size Used Available Use% Mounted on

VMFS-6 224.8G 128.5G 96.2G 57% /vmfs/volumes/datastore1

VMFS-6 7.3T 7.2T 87.5G 99% /vmfs/volumes/datastore2

datastore2 7.3TB capacity cannot be increased/expanded.

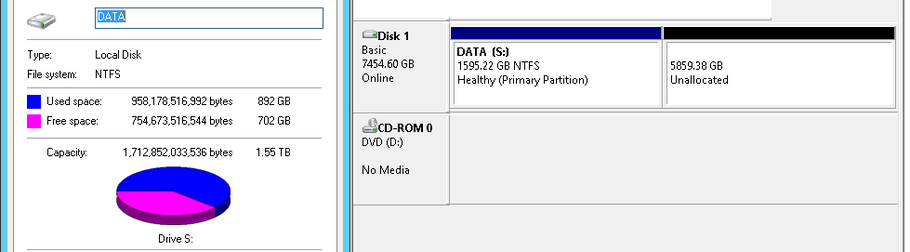

windows ntfs volume usage: 892GB on 1.5TB partition

vmdk thin provisioned at 7.28TB with 5.6TB actual disk usage in base vmdk

disk usage of largest sesparse file: 1.6TB

total disk usage by vmdk & sesparse determined from du -h -s . : 7.2T

datastore free space: 87GB

vmdk the windows disk is on:

-rw------- 1 root root 7.3M Jun 3 16:44 data_5-000001-ctk.vmdk

-rw------- 1 root root 1.6T Jun 3 17:04 data_5-000001-sesparse.vmdk

-rw------- 1 root root 411 Apr 17 05:32 data_5-000001.vmdk

-rw------- 1 root root 29.6G Jun 3 17:23 data_5-000002-sesparse.vmdk

-rw------- 1 root root 326 Jun 3 17:08 data_5-000002.vmdk

-rw------- 1 root root 7.3M Jun 3 16:44 data_5-000003-ctk.vmdk

-rw------- 1 root root 29.4G Jun 3 17:04 data_5-000003-sesparse.vmdk

-rw------- 1 root root 418 May 27 02:03 data_5-000003.vmdk

-rw------- 1 root root 55.4G Jun 3 17:04 data_5-000004-sesparse.vmdk

-rw------- 1 root root 403 Jun 3 15:34 data_5-000004.vmdk

-rw------- 1 root root 7.3T Jun 3 13:13 data_5-flat.vmdk

-rw------- 1 root root 558 May 27 22:58 data_5.vmdk

consolidation result:

contents of remaining .vmsd file in folder:

.encoding = "UTF-8"

snapshot.lastUID = "39"

snapshot.needConsolidate = "TRUE"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tektotket

Using storage vMotion to migrate the affected VM to another datastore usually fixed consolidation issues in my environment.

I too will like to know what other options are available. Hopefully one of our pros can jump in.

Please KUDO helpful posts and mark the thread as solved if answered

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the current scenario at the remote office no other datastore exists to use as a destination for consolidating via vmotion or vmkfstools -i.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has the Windows partition ever been resized, i.e. has the currently "Unallocated" space been used previously?

Are there other VM's on the datastore, which can - if required - be deleted, and later be restored from the backup to free up disk sapce?

Please run ls -lisa *.vmdk in the VM's folder and post the output.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding the windows drive unallocated space, I don't know the history but will research that. There are no other vm's or folders under /vmfs/volumes/datastore2 but one .sf file is large enough to consider whether it can be removed. Vmdk in the two datastore folders below are the only ones associated with the vm. The numeric _3 and _5 suffix that ended up in the base vmdk naming does not refer to the actual number of disks in the vm:

[root@ESXi:/vmfs/volumes/datastore2] ls -lisa

total 1861760

4 1024 drwxr-xr-t 1 root root 73728 May 28 23:08 .

73689129449936 0 drwxr-xr-x 1 root root 512 Jun 3 19:58 ..

4194308 126976 -r-------- 1 root root 129810432 Dec 1 2020 .fbb.sf

8388612 132096 -r-------- 1 root root 134807552 Dec 1 2020 .fdc.sf

33554436 263168 -r-------- 1 root root 268632064 Dec 1 2020 .jbc.sf

25165828 17408 -r-------- 1 root root 16908288 Dec 1 2020 .pb2.sf

12582916 1024 -r-------- 1 root root 65536 Dec 1 2020 .pbc.sf

16777220 1311744 -r-------- 1 root root 1342898176 Dec 1 2020 .sbc.sf

29360132 1024 drwx------ 1 root root 69632 Dec 1 2020 .sdd.sf

20971524 7168 -r-------- 1 root root 7340032 Dec 1 2020 .vh.sf

1668 128 drwxr-xr-x 1 root root 94208 Jun 3 17:08 data

[root@ESXi:/vmfs/volumes/datastore2] ls -alh

total 1861760

drwxr-xr-t 1 root root 72.0K May 28 23:08 .

drwxr-xr-x 1 root root 512 Jun 3 19:58 ..

-r-------- 1 root root 123.8M Dec 1 2020 .fbb.sf

-r-------- 1 root root 128.6M Dec 1 2020 .fdc.sf

-r-------- 1 root root 256.2M Dec 1 2020 .jbc.sf

-r-------- 1 root root 16.1M Dec 1 2020 .pb2.sf

-r-------- 1 root root 64.0K Dec 1 2020 .pbc.sf

-r-------- 1 root root 1.3G Dec 1 2020 .sbc.sf

drwx------ 1 root root 68.0K Dec 1 2020 .sdd.sf

-r-------- 1 root root 7.0M Dec 1 2020 .vh.sf

drwxr-xr-x 1 root root 92.0K Jun 3 17:08 data

[root@ESXi:/vmfs/volumes/datastore1/data] ls -lisa *.vmdk

3204 77630464 -rw------- 1 root root 85899345920 Jun 3 19:53 data_3-flat.vmdk

4197508 0 -rw------- 1 root root 555 Jun 3 16:06 data_3.vmdk

[root@ESXi:/vmfs/volumes/datastore2/data] ls -lisa *.vmdk

29362180 8192 -rw------- 1 root root 7634148 Jun 3 16:44 data_5-000001-ctk.vmdk

159385604 1684912128 -rw------- 1 root root 1738003193856 Jun 3 17:04 data_5-000001-sesparse.vmdk

163579908 0 -rw------- 1 root root 411 Apr 17 05:32 data_5-000001.vmdk

4196356 302080 -rw------- 1 root root 31817474048 Jun 3 19:42 data_5-000002-sesparse.vmdk

46139396 0 -rw------- 1 root root 326 Jun 3 17:08 data_5-000002.vmdk

113248260 8192 -rw------- 1 root root 7634148 Jun 3 16:44 data_5-000003-ctk.vmdk

104859652 23552 -rw------- 1 root root 31535931392 Jun 3 17:04 data_5-000003-sesparse.vmdk

109053956 0 -rw------- 1 root root 418 May 27 02:03 data_5-000003.vmdk

155191300 29773824 -rw------- 1 root root 59441000448 Jun 3 17:04 data_5-000004-sesparse.vmdk

167774212 0 -rw------- 1 root root 403 Jun 3 15:34 data_5-000004.vmdk

16779268 6003804160 -rw------- 1 root root 8004444650496 Jun 3 13:13 data_5-flat.vmdk

20973572 0 -rw------- 1 root root 558 May 27 22:58 data_5.vmdk

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding the windows S: drive partitioning question, there hasn't been resizing from windows. It's unknown why/how the actual vmdk disk utilization is so high.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to the output, the base/flat disk consumes ~5.6TB, so it looks like that at least 4TB of disk space is used by the "Unallocated" part.

There may be some tricks to try, and reduce the flat file's disk consumption, but they are risky.

Is there any chance to temporarily mount another disk/datastore to the host (this could be an NFS share on a system with sufficient free disk space) which would allow to migrate/clone the virtual disk, delete the snapshots, then zero out the Unallocated part, and finally migrate the virtual disk back to the original datastore?

Other than this, what may be an option, is to shut down the VM, run a backup, remove/delete the large virtual disk from the VM, then add a new, smaller virtual disk to the VM, and finally run a file level restore for your S: partition from your backup. I have no idea how long such a file level restore will take though.

Regarding the .sf files: Do not touch them, they are part of the VMFS file system.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just thinking of backup&restore. What should also work is to backup the VM using Veeam, then delete the complete VM from the disk, and restore it from the backup. This should be way faster than a file level restore, and will end up with consolidated snapshots, which should then also allow to zero out the "Unallocated" part, to reduce the space usage on the datastore.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The feedback is appreciated. No hardware resources are available at this remote office to mount internally or via nfs right now. The ESXi host reaches the Veeam server in another part of the country through 100Mb/50Mb broadband at ~50ms latency. The vm is in production use during daytime hours which complicates this. Procuring an internal sas or sata drive to add another datastore to the server as a destination for vmotion, vmkfs consolidation, or copying directly between ntfs may be what we do.

Followup update: the result of attempting consolidation is the same while vm is powered off as guessed. the other options will be reviewed.