- VMware Technology Network

- :

- Cloud & SDDC

- :

- ESXi

- :

- ESXi Discussions

- :

- Re: Unable to create Filesystem, please see VMkern...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unable to create Filesystem, please see VMkernel log for more details: Failed to create VMFS on device naa.6589cfc00000022d3b9872e459f4b72c:4

I use FreeNAS 11.2 to make iSCSI disk share.

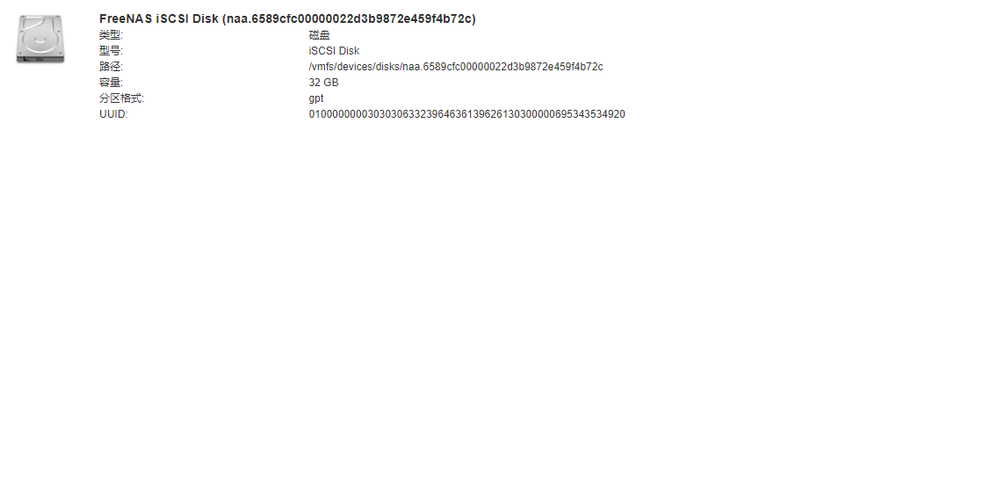

I config esxi's iSCSI settings,then I can see FreeNAS iSCSI Disk in esxi's Storage->Device. The esxi's version that I use is 6.7 update2.

I try to use the FreeNAS iSCSI Disk to create Vmfs Datastore.At last step,I click 'finish' , the following error occured.

Unable to create Filesystem, please see VMkernel log for more details: Failed to create VMFS on device naa.6589cfc00000022d3b9872e459f4b72c:4

And the /var/log/vmkernel.log :

2020-01-09T12:55:03.264Z cpu2:2143383 opID=347e7229)World: 11943: VC opID esxui-65b-b200 maps to vmkernel opID 347e7229

2020-01-09T12:55:03.264Z cpu2:2143383 opID=347e7229)NVDManagement: 1461: No nvdimms found on the system

2020-01-09T12:55:20.657Z cpu5:2143385 opID=ad32fa9a)World: 11943: VC opID esxui-a200-b20b maps to vmkernel opID ad32fa9a

2020-01-09T12:55:20.657Z cpu5:2143385 opID=ad32fa9a)LVM: 10410: Initialized naa.6589cfc00000022d3b9872e459f4b72c:5, devID 5e1722b8-bb3fa3b9-35c3-001018c01de0

2020-01-09T12:55:20.663Z cpu5:2143385 opID=ad32fa9a)LVM: 10504: Zero volumeSize specified: using available space (34339815936).

2020-01-09T12:55:20.678Z cpu5:2143385 opID=ad32fa9a)WARNING: Vol3: 3155: aa/5e1722b8-bf7b8be8-c4d8-001018c01de0: Invalid CG offset 65536

2020-01-09T12:55:20.678Z cpu5:2143385 opID=ad32fa9a)FSS: 2350: Failed to create FS on dev [5e1722b8-ac2c993e-4741-001018c01de0] fs [aa] type [vmfs6] fbSize 1048576 => Bad parameter

2020-01-09T12:55:23.663Z cpu5:2143067)LVM: 16760: One or more LVM devices have been discovered.

How could I sove thi problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Welcome to the Community,

According to the error message, and the log file, the iSCSI LUN seems to already contains partitions!? A VMFS datastore can only be crated on a new/blank LUN, so unless the LUN/the existing partitions contain required data, delete the existing partition table, and try to create a VMFS datastore again.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,thank you for your reply.

I delete the existing partition table,and try again.

Esxi creates VMFS partition successfully,but fails to create a VMFS datastore.

And the error message:

Unable to create Filesystem, please see VMkernel log for more details: Failed to create VMFS on device naa.6589cfc00000022d3b9872e459f4b72c:1

/var/log/vmkernel.log

2020-01-10T00:18:34.329Z cpu0:2099535)LVM: 16760: One or more LVM devices have been discovered.

2020-01-10T00:18:52.923Z cpu2:2143306 opID=217d23da)World: 11943: VC opID esxui-9930-b8ea maps to vmkernel opID 217d23da

2020-01-10T00:18:52.923Z cpu2:2143306 opID=217d23da)NVDManagement: 1461: No nvdimms found on the system

2020-01-10T00:21:16.814Z cpu1:2097694)DVFilter: 5964: Checking disconnected filters for timeouts

2020-01-10T00:22:11.912Z cpu7:2143354 opID=b3fe4a99)World: 11943: VC opID esxui-fc51-b907 maps to vmkernel opID b3fe4a99

2020-01-10T00:22:11.912Z cpu7:2143354 opID=b3fe4a99)NVDManagement: 1461: No nvdimms found on the system

2020-01-10T00:25:30.710Z cpu3:2143307 opID=c10466c5)World: 11943: VC opID esxui-c6f2-b93c maps to vmkernel opID c10466c5

2020-01-10T00:25:30.710Z cpu3:2143307 opID=c10466c5)LVM: 10410: Initialized naa.6589cfc00000022d3b9872e459f4b72c:1, devID 5e17c47a-f14ff86f-135c-001018c01de0

2020-01-10T00:25:30.716Z cpu2:2143307 opID=c10466c5)LVM: 10504: Zero volumeSize specified: using available space (34339815936).

2020-01-10T00:25:30.727Z cpu2:2143307 opID=c10466c5)WARNING: Vol3: 3155: aa/5e17c47a-f4a1f111-1174-001018c01de0: Invalid CG offset 65536

2020-01-10T00:25:30.727Z cpu2:2143307 opID=c10466c5)FSS: 2350: Failed to create FS on dev [5e17c47a-e3a4d532-e191-001018c01de0] fs [aa] type [vmfs6] fbSize 1048576 => Bad parameter

2020-01-10T00:25:33.714Z cpu0:2100152)LVM: 16760: One or more LVM devices have been discovered.

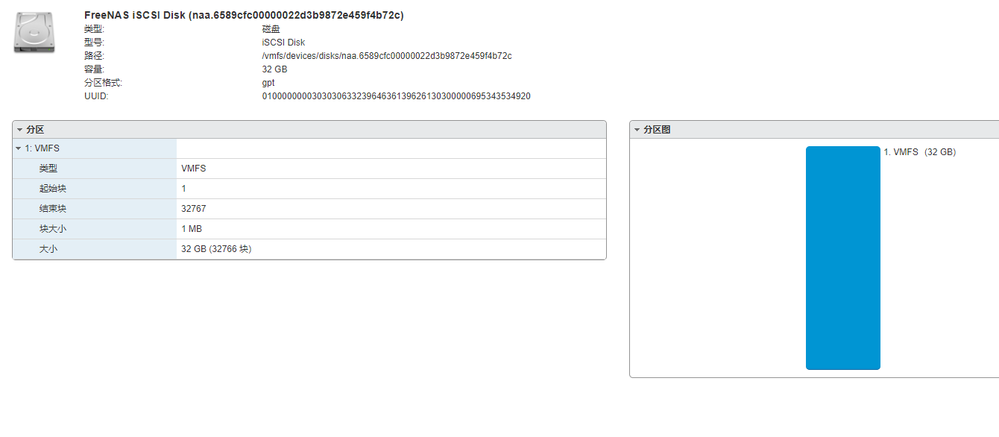

Please see the following pictures.

Before:

After:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey yangzhenginfo

this would happen if you have one or more extents in offline state, you can run # vmkfstools -q to verify this.

See if you re-create the LUN with a single extent and then expand the other extent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,thank you for your reply.

emmm,How to use command ' vmkfstools -q'?

I delete the old one,and create a new one.Still not work.

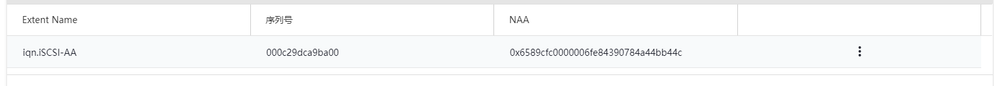

There is only one LUN with a single extent.Please see the pictutes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume that you've configured the FreeNAS iSCSI connection properly (e.g. MTU, Permissions, Path-Policiy, ...).

Please post the output for the following commands (copy&paste the text output, no screenshot please):

partedUtil getUsableSectors /vmfs/devices/disks/naa.6589cfc00000022d3b9872e459f4b72c

partedUtil getptbl /vmfs/devices/disks/naa.6589cfc00000022d3b9872e459f4b72c

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,I deleted Extent from FreeNAS,and create a new one today,so naa.6589cfc00000022d3b9872e459f4b72c was changed to naa.6589cfc0000006fe84390784a44bb44c.

I think I have configured the FreeNAS iSCSI connection properly.Because I can add the FreeNAS Disk to Win10,and use as a win10's local disk.

The following is command result:

[root@server3:~] partedUtil getUsableSectors /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c

34 1048575966

[root@server3:~] partedUtil getptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c

gpt

65270 255 63 1048576000

[root@server3:~]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Create a "gpt" type partition table (note that this command will remove all previously existing partitions on the given device)

partedUtil mklabel /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c gpt - Create a VMFS partition with the maximum size, based on output of the "partedUlti getUsableSectors ..." command

partedUtil setptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c gpt "1 2048 1048575966 AA31E02A400F11DB9590000C2911D1B8 0" - Verify that the partition has been created

partedUtil getptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c - Format the VMFS partition as a VMFS6 datastore with the name specified in the command line

vmkfstools -C vmfs6 -b 1m -S DatastoreName /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1 - rescan the ESXi host's storage (please note the upper-case "-V" in this command)

vmkfstools -V

André

PS: Removed verbose "-v" option in command 4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,is the command 'vmkfstools -v -C vmfs6 -b 1m -S iscsi01 /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1' right? Or something else wrong?The other commands you give me are OK.

Thank you for your patience.

[root@server3:~] partedUtil mklabel /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c gpt

[root@server3:~] partedUtil setptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c gpt "1 2048 10

48575966 AA31E02A400F11DB9590000C2911D1B8 0"

gpt

0 0 0 0

1 2048 1048575966 AA31E02A400F11DB9590000C2911D1B8 0

[root@server3:~] partedUtil getptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c

gpt

65270 255 63 1048576000

1 2048 1048575966 AA31E02A400F11DB9590000C2911D1B8 vmfs 0

[root@server3:~] vmkfstools -v -C vmfs6 -b 1m -S DatastoreName /vmfs/devices/disks/naa.6589cfc0000006fe8439

0784a44bb44c:1

Extra arguments at the end of the command line.

OPTIONS FOR FILE SYSTEMS:

vmkfstools -C --createfs [vmfs5|vmfs6|vfat]

-S --setfsname fsName

-Y --unmapGranularity #[bBsSkKmMgGtT]

-O --unmapPriority <none|low|medium|high>

-Z --spanfs span-partition

-G --growfs grown-partition

deviceName

-P --queryfs -h --humanreadable

-T --upgradevmfs

vmfsPath

-y --reclaimBlocks vmfsPath [--reclaimBlocksUnit #blocks]

OPTIONS FOR VIRTUAL DISKS:

vmkfstools -c --createvirtualdisk #[bBsSkKmMgGtT]

-d --diskformat [zeroedthick

|thin

|eagerzeroedthick

]

-a --adaptertype [deprecated]

-W --objecttype [file|vsan|vvol|pmem|upit]

--policyFile <fileName>

-w --writezeros

-j --inflatedisk

-k --eagerzero

-K --punchzero

-U --deletevirtualdisk

-E --renamevirtualdisk srcDisk

-i --clonevirtualdisk srcDisk

-d --diskformat [zeroedthick

|thin

|eagerzeroedthick

|rdm:<device>|rdmp:<device>

|2gbsparse]

-W --object [file|vsan|vvol]

--policyFile <fileName>

-N --avoidnativeclone

-X --extendvirtualdisk #[bBsSkKmMgGtT]

[-d --diskformat eagerzeroedthick]

-M --migratevirtualdisk

-r --createrdm /vmfs/devices/disks/...

-q --queryrdm

-z --createrdmpassthru /vmfs/devices/disks/...

-v --verbose #

-g --geometry

-x --fix [check|repair]

-e --chainConsistent

-Q --objecttype name/value pair

--uniqueblocks childDisk

--dry-run [-K]

vmfsPath

OPTIONS FOR DEVICES:

-L --lock [reserve|release|lunreset|targetreset|busreset|readkeys|readresv

] /vmfs/devices/disks/...

-B --breaklock /vmfs/devices/disks/...

OPTIONS FOR VMFS MODULE:

--traceConfig [0|1]

--dataTracing [0|1]

--traceSize <x> (MB)

vmkfstools -H --help

[root@server3:~]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems that the command doesn't like the verbose "-v" option, so I removed it in my previous reply in case others have similar issues.

You could actually even omit the block size "-b 1m" in the command line, because VMFS5, and later use a unified 1MB block size by default. However, this isn't relevant in this case.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I try to use some different commands. It shows 'unmapGranularity 1048576, unmapPriority default',does this message useful?

[root@server3:~] partedUtil mklabel /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c gpt

[root@server3:~] partedUtil setptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c gpt "1 2048 1048575966 AA31E02A400F11DB9590000C2911D1B8 0"

gpt

0 0 0 0

1 2048 1048575966 AA31E02A400F11DB9590000C2911D1B8 0

[root@server3:~] partedUtil getptbl /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c

gpt

65270 255 63 1048576000

1 2048 1048575966 AA31E02A400F11DB9590000C2911D1B8 vmfs 0

[root@server3:~] vmkfstools -C vmfs6 -S DatastoreName /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1

create fs deviceName:'/vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1', fsShortName:'vmfs6', fsName:'DatastoreName'

deviceFullPath:/dev/disks/naa.6589cfc0000006fe84390784a44bb44c:1 deviceFile:naa.6589cfc0000006fe84390784a44bb44c:1

Checking if remote hosts are using this device as a valid file system. This may take a few seconds...

Creating vmfs6 file system on "naa.6589cfc0000006fe84390784a44bb44c:1" with blockSize 1048576, unmapGranularity 1048576, unmapPriority default and volume label "DatastoreName".

Failed to create VMFS on device naa.6589cfc0000006fe84390784a44bb44c:1

Usage: vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/vml... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/naa... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/mpx.vmhbaA:T:L:P

Error: Invalid argument

[root@server3:~] vmkfstools -C vmfs6 -S DatastoreName /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1

create fs deviceName:'/vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1', fsShortName:'vmfs6', fsName:'DatastoreName'

deviceFullPath:/dev/disks/naa.6589cfc0000006fe84390784a44bb44c:1 deviceFile:naa.6589cfc0000006fe84390784a44bb44c:1

Checking if remote hosts are using this device as a valid file system. This may take a few seconds...

Creating vmfs6 file system on "naa.6589cfc0000006fe84390784a44bb44c:1" with blockSize 1048576, unmapGranularity 1048576, unmapPriority default and volume label "DatastoreName".

Failed to create VMFS on device naa.6589cfc0000006fe84390784a44bb44c:1

Usage: vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/vml... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/naa... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/mpx.vmhbaA:T:L:P

Error: Invalid argument

[root@server3:~] vmkfstools -C vmfs6 /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1

create fs deviceName:'/vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1', fsShortName:'vmfs6', fsName:'(null)'

deviceFullPath:/dev/disks/naa.6589cfc0000006fe84390784a44bb44c:1 deviceFile:naa.6589cfc0000006fe84390784a44bb44c:1

Checking if remote hosts are using this device as a valid file system. This may take a few seconds...

Creating vmfs6 file system on "naa.6589cfc0000006fe84390784a44bb44c:1" with blockSize 1048576, unmapGranularity 1048576, unmapPriority default and volume label "none".

Failed to create VMFS on device naa.6589cfc0000006fe84390784a44bb44c:1

Usage: vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/vml... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/naa... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/mpx.vmhbaA:T:L:P

Error: Invalid argument

[root@server3:~] vmkfstools -C vmfs6 -S aa01 /vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1

create fs deviceName:'/vmfs/devices/disks/naa.6589cfc0000006fe84390784a44bb44c:1', fsShortName:'vmfs6', fsName:'aa01'

deviceFullPath:/dev/disks/naa.6589cfc0000006fe84390784a44bb44c:1 deviceFile:naa.6589cfc0000006fe84390784a44bb44c:1

Checking if remote hosts are using this device as a valid file system. This may take a few seconds...

Creating vmfs6 file system on "naa.6589cfc0000006fe84390784a44bb44c:1" with blockSize 1048576, unmapGranularity 1048576, unmapPriority default and volume label "aa01".

Failed to create VMFS on device naa.6589cfc0000006fe84390784a44bb44c:1

Usage: vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/vml... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/naa... or,

vmkfstools -C [vmfs5|vmfs6|vfat] /vmfs/devices/disks/mpx.vmhbaA:T:L:P

Error: Invalid argument

[root@server3:~]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I found that,

When I use the FreeNAS iSCSI disk to create VMFS6 datastore,the error occured.

However,I use the same FreeNAS iSCSI disk to create VMFS5 datastore,everything is OK.

I really don't know why,can anyone help to explain why?thank you.

I found some differences between VMFS5 and VMFS6,but I can not explain why this error occured.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have same problem, I can create VMFS5 datastore but I can not VMFS6 datastore.

I have VMware ESXi, 6.7.0, 15160138 and Freenas 11.3-U1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried to create vmfs6 datastore with VMware ESXi, 6.5.0, 15256549 there is no problem on freenas 11.3.U1.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sounds like a FreeNAS problem. I'd ask there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think it is a vmware problem.

I can create vmfs6 on esxi 6.5 but I can not create vmfs6 on esxi 6.7 via iscsi.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think it is a vmware problem.

You're just guessing here. FreeNAS is nowhere to be found on this list, which means it's unsupported to begin with. There are significant differences between VMFS-5 and 6, so just because one works doesn't mean the other should.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Cemoka,

I know it has been a while since you posted this and maybe you already moved on.

Since I was running into the same problems as you are and this search is the only thread coming up I figured I'd leave a reply.

Like my fellow vExpert daphnissov suggested is that this problem is not in VMware ESXi. Apparently (don't ask me what exactly) in 6.7 your run the latest version of VMFS6, which has had some improvements and it doesn't like the way FreeNAS is dealing with block size and the spoofing of it rendering some weird error as if there is already data on the iSCSI LUN.

As you noticed, you can create VMFS5, but VMFS6 will fail.

Simply put, FreeNAS needs to be brought up to speed to handle the latest VMFS6 requests nicely.

Now, I don't know if you are new to FreeNAS (I am), so I made a mistake of using FILE based extents for the iSCSI sharing in FreeNAS, which means it is dumped either directly on the storage pool or inside a dataset depending on what you choose.

In this File based iSCSI extent, VMFS6 fails to match the logical block size given to the extent with the physical block size of the drive (correct me if I'm wrong here anyone).

You can fix this in FreeNAS by selecting the option "

Now, make sure the logical block size is set to 512 as I've found this the only working logical block size.

Adding the VMFS6 datastore works now but, here comes the catch, the datastore errors with UNMAP not supported by device.

This got me thinking again, because FreeNAS uses VAAI and should support UNMAP. This is where I found out I was still a newbie at FreeNAS!

You are NOT supposed to be using iSCSI with file based extents on pools or datasets for VMware!

To do this the right way, create a ZVOL, which is specifically created to support VAAI and works perfectly as a DEVICE iSCSI extent, perfect for a VMware ESXi datastore.

For more info see: https://www.ixsystems.com/documentation/freenas/11.2/storage.html#adding-zvols

I created a ZVOL with 16k block size (advanced settings), added it to a device based extent with logical block size 512 and tadaa!

All nice and dandy with UNMAP support!

Hope it helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Drocona!

I am playing with FreeNAS in my home lab and ran into this problem. I was reading that FreeNAS does not recommend NFS for VMware and mentions to use iSCSI. I probably should have read further as they may have referenced some how-to on setting up iSCSI with FreeNAS and vSphere 6.7. 😉

After encountering the same problems described here, your guidance on the Zvol got things working quickly for me. I am using FreeNAS as the features and flexibility are attractive, but with that flexibility comes complexity that requires understanding.

Yes, it helped!

Thanks again,

Tim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just wanted to share that I was having this same problem. I could not do VMFS 6 and was getting the same errors. I also use FreeNAS in my home lab (or what is now called TrueNAS Core).

I was getting this because I was setting up the Lun on TrueNAS wrong because I rarely have to do it. I was trying to create the LUN under Sharing --> Block Shares --> Extents and selecting File. What I should have been doing was selecting Storage --> Pools --> Next to the Zpool Name, click the menu ... --> Add zvol. After that is created, then I should go back to Sharing and setup the extent to point to the new Zvol Device.

I know this is an older post, but it was my first result and wanted to share what I found in case it helps someone else in the future!