- VMware Technology Network

- :

- Cloud & SDDC

- :

- ESXi

- :

- ESXi Discussions

- :

- Re: Monster VM only using half of host CPU resourc...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Monster VM only using half of host CPU resources

OK first the facts...

* ESXi 5.5 U2

* Host server(s) have 2 sockets with 8 cores each. That's 16 physical cores per server and 32 when you factor in hyper-threading.

* One VM has 16 vCPUs allocated w 64GB RAM

* vNUMA is enabled and coreinfo.exe shows the proper logical to physical layout.

Since this host primarily services only this one "monster" VM we kept the vCPU count at 16 as we did not want to overcommitt with hyperthreading given the single large VM scenario.

Given this configuration, the host commonly runs at half the CPU load relative to the VM (we are using VMware provided metrics, but the guest OS reports numbers largely in sync with VMware).

For example, the VM is at 90% for 20 minutes and CPU is only 40-50% on the host. During this time we see all physical cores in ESX being utilized within 10% of each other.

Throwing aside hyper threading, we were expecting one single VM to be more efficient with 16 vCPUs given the physical layout, but why is the VM at 90% when the host is at 40-50%?

Seems either our understanding of hyper-threading is wrong (meaning we need to add more vCPUs) or there is something else at work that prevents the VM from using more of the hosts resources.

Any ideas would be appreciated. We would like to unlock more of the host server CPU. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

You disable Hyper Threading on physical server side (BIOS) or no?

Will you try setting vCPU as 2 socket and 16 cores per socket? And check the performance.

Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems either our understanding of hyper-threading is wrong (meaning we need to add more vCPUs) or there is something else at work that prevents the VM from using more of the hosts resources.

A manually configured CPU limit on the VM would come to mind, but I doubt it's that.

What exact metrics are you monitoring on the ESXi host? Don't compare %CPU utilization. As the physical host has 32 threads, it's obvious half of them will stay idle with a single 16-vCPU VM. Check the host and VM Mhz usage metrics and how they match the hosts total Mhz capacity.

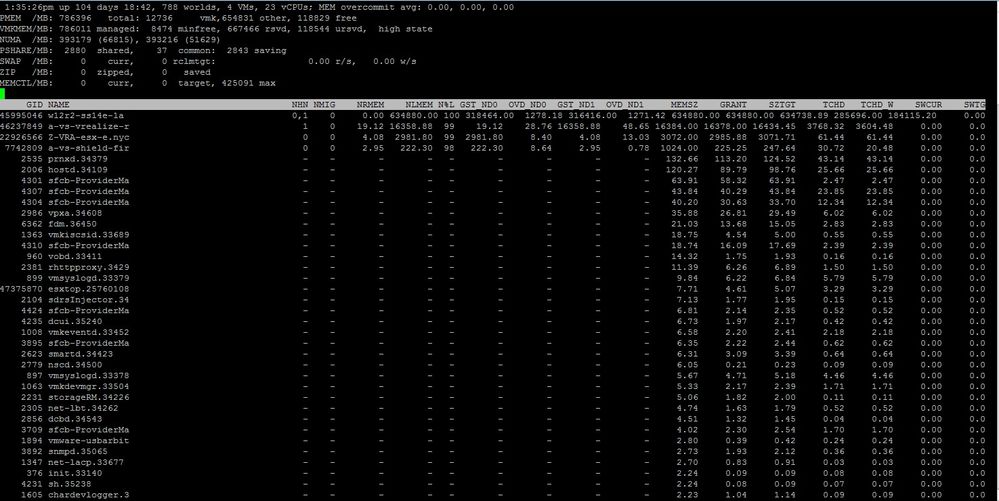

Also please post some screenshots from (r)esxtop to get a detailed picture:

In the CPU section, press 'V' (capital), press 'e' and enter the VM GID to expand per-vCPU metrics

Switch to the memory section ('m'), press 'f', press 'g' to include NUMA stats

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello there,

the ESXi stat reporting in the form of esxtop actually aggregates the logical cores into the "total usage" factor. If you take a look at the PCPU USED/PCPU UTIL metric you will see that the physical cores will be quite loaded with hyperthreaded cores helping them to push the ideal limit of 4 instructions per clock cycle which means some utilization also goes to these cores - I guess that this is why you see such a low utilization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response. Hyperthreading is enabled in BIOS.

Right now the VM is one virtual socket with 16 cores. Could go to 2/16 but we are concerned about doing as based on what we've read, you don't want to allocate more total vCPUS to a "monster VM" than there are physical cores (not logical from HT).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response. A few screen shots below.

As you'll see the cores track within 10% of each other. Trying to wrap my head around how Mhz is being measured 2x greater on the VM than the host.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

would you please share a screenshot containing the Guest OS task manager with all cores shown while the processor usage is peaking & esxtop with VM's world expanded at that time? Also, do you have Power Management set to High Performance inside your Guest OS? This is really quite an interesting issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please post Mhz usage screenshots from the advanced performance charts in the vSphere Client and not from vCOPS or somewhere else.

Right now the VM is one virtual socket with 16 cores.

Are you sure it's setup like this? I'm a bit surprised vNUMA is even enabled with a single socket.

Generally you should only configure sockets and not change the number of cores unless you really have to because of licensing restrictions. If you do then you should replicate your physical host hardware (e.g. use 2/8 for a 2 socket host).

Please read this article for more detailed explanation:

Does corespersocket Affect Performance? | VMware vSphere Blog - VMware Blogs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the catch! Let me get to a few points:

- You are instructing the ESXi's CPU Scheduler to keep all the 16 cores inside one socket and allocate the memory that way. My benchmarks that I will be presenting an article on my blog soon revealed that this configuration (1 Socket containing an N number of cores where N is greater than core count of one NUMA Node) brings the memory performance down by ~25%. You lose 15% memory Read & 20% Write Performance (throughput, GB/s), have 13% less DB Ops (100/s) and have 1/3 worse memory latency - this all can do that if you reveal Show Kernel Time in your Windows 2012 R2 machine's Task Manager's CPU screen, the ~40% CPU time overhead you see between your VM and ESXi host can be spent on Guest OS' Kernel which is waiting for the data to be manipulated in the memory. Hence the Windows OS seems busy, but the instructions passed down to the ESXi host are without any Guest OS Kernel Waits. This is my theory but it makes sense.

- In order to work around the above, if you do not want to commit all physical CPUs to this VM, try exposing the ESXi host's HyperThreading on the VM by introducing numa.vcpu.preferHT = TRUE to the VM's Advanced Options, see this KB:http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=200358...while keeping the 1 Socket x 16 Cores VM Configuration

- Does your Database Server really need all these Cores? You could try Going with 2x4 or 2x6 for starters and see the esxtop/guest OS CPU usage performance discrepancy.

Please report back as I'd really like to hear if the Core Per Socket config could be playing a part in the problem you are having.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed, in my bench-marking sessions I have found out that the more Sockets, the happier ESXi is with scheduling.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info! I'll ask for approval to make this change but may night be timely as the DBAs don't like downtime for maintenance ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand, Databases are not the thing you like to see down ![]() Try to have a small time window for yourself so that you can play around with the settings and then settle down with the one you find the most beneficial

Try to have a small time window for yourself so that you can play around with the settings and then settle down with the one you find the most beneficial ![]() I guess the DBAs can then rejoice that they have a better-performing server.

I guess the DBAs can then rejoice that they have a better-performing server.

Fingers crossed and good luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well that sheds some new light on the case - you could also try using Process Explorer from Sysinternals found here to analyze the Guest OS' processes closely and see what exactly is taking up a fair amount of CPU Time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Look, you wrote "one virtual socket with 16 cores", which was quite confusing considering the circumstances. You screenshot now says "16 virtual sockets with 1 core each".

With this the vNUMA layout of the VM is already optimal as we can see in the esxtop memory screenshot:

The VM resides on NUMA home node 0 and 1, with 32GB memory on each node and memory locality is 100% local. This means no remote memory access that could potentially impact memory throughput or latency (as long as the guest OS is also aware of the NUMA layout, which is the case as provided by your in-guest screenshot).

I'm not sure whether the preferHT parameter will actually help overall performance in this case because the memory layout is already optimal. It might improve CPU cache hit rate, but you will also be confined to one socket's CPU resources of 8 real cores and 8 HT threads, instead of 16 real cores from both sockets as is the case now.

What is PreferHT and When To Use It | VMware vSphere Blog - VMware Blogs

It is important to recognize though that by using this setting, you are telling vSphere you’d rather have access to processor cache and NUMA memory locality as priority, over the additional compute cycles. So there is a trade off.

Apart form this, your initial question wasn't about performance issues or improvements, but a difference in measurement values between VM and Host stats. As mentioned earlier, the %usage because the host includes HT threads (32*100% vs 16*100%), so compare the advanced performance charts in the vSphere Client for total Mhz usage. Not sure why vCOPS or whatever measures more Mhz than your physical host (Mhz capacity counts physical cores only, no HT threads).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In theory this would explain much. Recently there were reports of latency issues which can be observed from the guest OS metrics but NOT from vSphere/vROPs metrics. Very curious now and thanks for all the great info.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also here's the guest with ESXTOP side by side.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This CPU usage graph you posted is actually very accurate both inside the esxtop and the resmon. If you map the CPU0 inside guest OS to vcpu0 and NUMA Node's CPU0 (vcpu8) onwards you can see that the values match.

By this screenshot everything seems to be in order - I'm quite confused now :smileyshocked:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's where we've been ![]()

We had some CPU stress moments and the concern (based on vSphere metrics) was that the VM was saturating it's CPU, but the host still had plenty to give. I could see CPU % being off, but not Mhz.

It's all very strange to me

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's because you can't exactly look to 1:1 mapping of vCPUs to Physical Cores ( including their logical HyperThread helper) when usage is concerned. Of course when the Guest OS generates a workload (let's say, an instruction), the same instruction must be processed in the same fashion by the ESXi host's CPU Scheduler. The HyperThreaded cores help coalesce the instructions into one clock cycle, so the % utilization will differ depending on the workload for each VM running on top the ESXi host - but this is in the internals of VMKernel. There are many more factors like a single vCPU being scheduled on different Cores because of "niceness" and other variables.

The CPU% then gives you that you have some resources to go around on the ESXi host whereas the VM is pretty much stressed out.

Take a look at this article to understand the esxtop metrics further:Interpreting esxtop Statistics