- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSAN

- :

- VMware vSAN Discussions

- :

- VSAN 6.7.0 cache All Flash ratio

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VSAN 6.7.0 cache All Flash ratio

I have a small doubt on this.I was reading all the articles(Cormac,yellow bricks and TheBob),however i have small doubt on this.

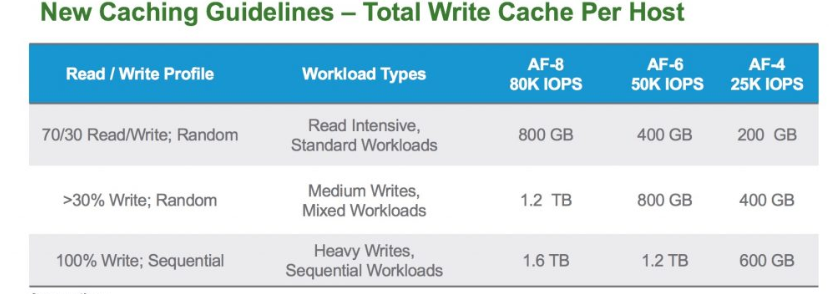

As per the given articles and cache chart,10% calculation is only for hybrid VSAN not for AF VSAN however it needs to be calculated based on expected/known workloads.

Designing vSAN Disk groups - All Flash Cache Ratio Update - Virtual Blocks

Extending All Flash vSAN Cache Tier Sizing Requirement

Basic questions for vSAN size disk selection

This is for my new setup of AF(5 ESXi hosts with VSAN)

VSAN cache All Flash ratio

Per ESXi host- 2 Disk Groups: Total capacity is 3,840 GB

6 X 480GB for capacity

2x 480 GB for cache

Doubts:-I dont know the customer workloads yet as this is new setup and we calculated(2* 480 GB cache disk per ESXi host)like this.

Is this cache sizing is ok?

If the customer is actively looking for(100 % write;sequential),then we can choose 2* 600 GB(2 DG,50 K IOPS) ?

Please advise,

Thank you,

Manivel R

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Manivel,

I would advise to always know at the very least rough (tested) metrics of the expected storage workload - even just the basics such as IOPS and their R/W ratio, IO size and typical patterns (e.g. bursty or prolonged heavy loads during certain activities). If the workload is already running on other non-vSAN infrastructure then there are tools available for looking at the current storage workload such as Live Optics or VMware flings such as IOInsight.

If the workload doesn't yet exist then you should really be doing basic estimates of the workload characteristics and load from the application(s) you are running whitepapers and/or user/admin experiences. Alternatively if you have similar test infrastructure available and can recreate the expected workload type (or a realistic proportion of it) and use that info for rough idea - ned to take a lot of things into account here and it can be hard to fully realistically simulate real environments.

Any particular reason for using such small capacity-tier devices? You would use less slots (and thus can expand later if required) and likely have better usage-balance with something a bit bigger (as that is less than 3 full size components per disk).

If there isn't a massive price difference between the same quality 480GB and 600GB, consider going with 600GB - your cache:capacity ratio is already very high and should be fine, but you also say you don't know the characteristics of the workload so err on the side of more as opposed to less.

Bob

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks much again Bob for your Inputs.

This is new Kubernetes setup and currently analysing the customer workloads to get an rough idea.

Our setup having 5 ESXi servers.Our VSAN storage policy will be RAID 5 with FTT-1.

We have many free disk slots on each server.The total capacity(management decided) of each ESXi server will be 3840 GB only.So we decided to go with 2 DGs for getting more performance/resiliency.

Components:- I got your point.If I go with 2nd approach(for example),then I can accommodate 3 full size components per disk instead of 1st approach.?

1st approach,

1st DG-->3 X 480GB for capacity

1x 480 GB for cache,else we need to replace the cache disk with 600 GB

2nd DG--> 3 X 480GB for capacity

1x 480 GB for cache,else we need to replace the cache disk with 600 GB

2nd approach:-

Only one DG--> 600 GB or 800 GB will be cache disk and 3 * 1 TB disks = 3TB will be Capacity disk.

Any more suggestions on this approach ? Please advise if anything is there.

Thank you,

Manivel R

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Honestly, I wouldn't recommend going below 600GB or 800GB even better. With the prices of SSDs so low now, it makes sense to go at least 800GB to future proof and extend the life of the disks a bit. You mentioned performance and RAID5 in the same sentence. RAID5 has an IO amplification for every write IO, just the way RAID 5 is, not a vSAN thing. If you truly want the best performance, I would recommend going with RAID1. Just remember that you can apply different policies to not only VMs, but also objects (VMDKs) for each VM.

One common mistake during initial design is that people size for current state not future proofing it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks much again for your Inputs.