- VMware Technology Network

- :

- Cloud & SDDC

- :

- vSphere Storage Appliance

- :

- vSphere™ Storage Discussions

- :

- Re: Unable to expand datastore after adding disk t...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'll try to be as thorough as I can. The short story is that we added a disk to the RAID-6 array in our PowerEdge server. Dell helped me to expand the virtual disk, but we can't seem to grow the datastore.

The datastore as it exists right now is 2TB. The new disk adds a 500GB capacity making it 2.5TB (or 2513.25 GB as the Dell iDRAC shows it).

When you look at the partition map, it shows several partition, all of the "Unknown" type.

So, this shows there is 500GB of free space on the VD. However, the datastore (obviously) shows a 2TB size.

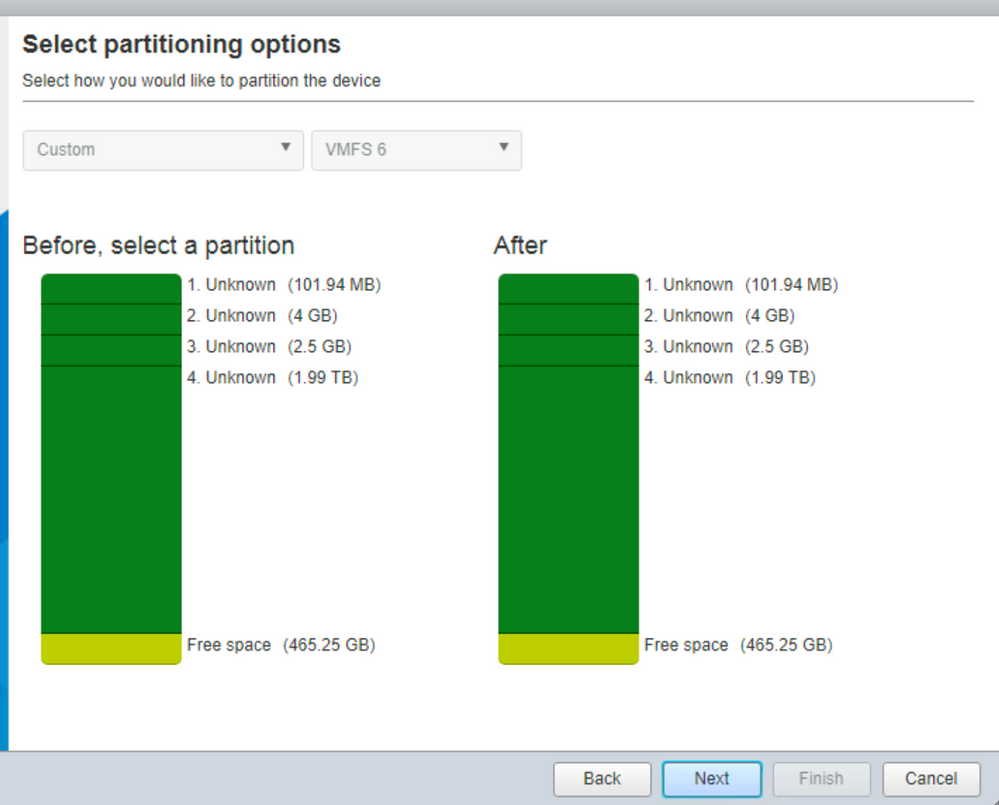

When we attempt to grow the datastore, we see the following:

Initially, Dell told me we couldn't grow the datastore because the formatted type is MBR (msdos). That is true. However, this was not something we chose when creating the datastore and, further, I think I've come to understand the when datastores are under 2TB ESXi purposely formats as MBR but then converts to GPT when we grow the datastore beyond 2TB. (I may have misunderstood the KB article.)

https://blogs.vmware.com/vsphere/2011/12/upgraded-vmfs-5-automatic-partition-format-change.html

The datastore is formatted VMFS-6. The ESXi version is 6.7 U1.

Can provide more if needed. Thanks.

- Mike

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with VMware's recommendation, as this will be a one-time thing, and you'll have a properly configured system in the future.

Not really sure what to do with this information.

If the support case with Dell is still open, it may be worth letting them know about these - in my opinion - incorrect pre-installations, so that they are aware of this, and can fix these issues.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I thought I'd add something.

I am fairly confident this server was upgraded from 6.5.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nuts. The VMFS version if 5, not 6.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the first time that I see such an unusual partition table!?

It looks like only some of the partitions, which are created by default are located on that VD, and ESXi is installed elsewhere (e.g. on a small USB/SD device).

I'm currently not sure whether the MBR to GPT conversion will work if a datastore is expanded from the command line (which may be the only option in this case).

Anyway please provide some details about the disks/partitions to see what may be possible. To get the required information enable SSH on the host and use e.g. putty to connect to it.

Then rezie the putty screen (to aviod line breaks), and run the following commands:

- ls -lisa /vmfs/devices/disks

- vmkfstools -P "/vmfs/volumes/<DatastoreName>"

- partedUtil getptbl "/vmfs/devices/disks/<deviceName from command 2>"

- partedUtil getUsableSectors "/vmfs/devices/disks/<deviceName from command 2>"

(for details about commands 2 - 4, see https://kb.vmware.com/kb/2002461)

Paste the commands' output to a reply post. Please copy&paste the text output rather than a screenshot of the putty screen.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the delay. The outputs are below and I'll attach a text file with the output in case the line-breaks are too much to deal with here.

I should report that I've spent about three hours on the phone with Dell ProSupport on this. Since this is an Essentials install, we are escalating to VMWare support and I should have a conversation with them tomorrow.

===

ls -lisa /vmfs/devices/disks/

total 4802006888

5 0 drwxr-xr-x 2 root root 512 May 9 16:42 .

1 0 drwxr-xr-x 16 root root 512 May 9 16:42 ..

148 15646720 -rw------- 1 root root 16022241280 May 9 16:42 mpx.vmhba32:C0:T0:L0

136 4064 -rw------- 1 root root 4161536 May 9 16:42 mpx.vmhba32:C0:T0:L0:1

138 255984 -rw------- 1 root root 262127616 May 9 16:42 mpx.vmhba32:C0:T0:L0:5

140 255984 -rw------- 1 root root 262127616 May 9 16:42 mpx.vmhba32:C0:T0:L0:6

142 112624 -rw------- 1 root root 115326976 May 9 16:42 mpx.vmhba32:C0:T0:L0:7

144 292848 -rw------- 1 root root 299876352 May 9 16:42 mpx.vmhba32:C0:T0:L0:8

146 2621440 -rw------- 1 root root 2684354560 May 9 16:42 mpx.vmhba32:C0:T0:L0:9

159 2635333632 -rw------- 1 root root 2698581639168 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49

151 104391 -rw------- 1 root root 106896384 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:1

153 4193280 -rw------- 1 root root 4293918720 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:2

155 2621440 -rw------- 1 root root 2684354560 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:3

157 2140564479 -rw------- 1 root root 2191938027008 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:4

149 0 lrwxrwxrwx 1 root root 20 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e -> mpx.vmhba32:C0:T0:L0

137 0 lrwxrwxrwx 1 root root 22 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e:1 -> mpx.vmhba32:C0:T0:L0:1

139 0 lrwxrwxrwx 1 root root 22 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e:5 -> mpx.vmhba32:C0:T0:L0:5

141 0 lrwxrwxrwx 1 root root 22 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e:6 -> mpx.vmhba32:C0:T0:L0:6

143 0 lrwxrwxrwx 1 root root 22 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e:7 -> mpx.vmhba32:C0:T0:L0:7

145 0 lrwxrwxrwx 1 root root 22 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e:8 -> mpx.vmhba32:C0:T0:L0:8

147 0 lrwxrwxrwx 1 root root 22 May 9 16:42 vml.0100000000303132333435363738393031496e7465726e:9 -> mpx.vmhba32:C0:T0:L0:9

160 0 lrwxrwxrwx 1 root root 36 May 9 16:42 vml.02000000006d094660497b3300225e0a8b0e490e49504552432048 -> naa.6d094660497b3300225e0a8b0e490e49

152 0 lrwxrwxrwx 1 root root 38 May 9 16:42 vml.02000000006d094660497b3300225e0a8b0e490e49504552432048:1 -> naa.6d094660497b3300225e0a8b0e490e49:1

154 0 lrwxrwxrwx 1 root root 38 May 9 16:42 vml.02000000006d094660497b3300225e0a8b0e490e49504552432048:2 -> naa.6d094660497b3300225e0a8b0e490e49:2

156 0 lrwxrwxrwx 1 root root 38 May 9 16:42 vml.02000000006d094660497b3300225e0a8b0e490e49504552432048:3 -> naa.6d094660497b3300225e0a8b0e490e49:3

158 0 lrwxrwxrwx 1 root root 38 May 9 16:42 vml.02000000006d094660497b3300225e0a8b0e490e49504552432048:4 -> naa.6d094660497b3300225e0a8b0e490e49:4

vmkfstools -P /vmfs/volumes/datastore1/

VMFS-5.81 (Raw Major Version: 14) file system spanning 1 partitions.

File system label (if any): datastore1

Mode: public

Capacity 2191775498240 (2090240 file blocks * 1048576), 560960897024 (534974 blocks) avail, max supported file size 69201586814976

Disk Block Size: 512/512/0

UUID: 5acb81a8-c4a14077-8493-801844eac296

Partitions spanned (on "lvm"):

naa.6d094660497b3300225e0a8b0e490e49:4

Is Native Snapshot Capable: YES

partedUtil getptbl /vmfs/devices/disks/naa.6d094660497b3300225e0a8b0e490e49

msdos

328083 255 63 5270667264

1 63 208844 222 0

2 208845 8595404 6 0

3 8595405 13838284 252 0

4 13838336 4294967294 251 0

partedUtil getUsableSectors /vmfs/devices/disks/naa.6d094660497b3300225e0a8b0e490e49

1 5270667263

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To me it looks like the issue already started with the host configuration/order. There's a Dell Utility partition on the disk (Partition 1 - Type 222), and most likely with it's creation, the partition table has been set to MBR.

A usual partition layout for an ESXi 6.5 host look like this:

1: systemPartition -> Bootloader Partition (4 MB)

5: linuxNative -> /bootbank (250 MB)

6: linuxNative -> /altbootbank (250 MB)

7: vmkDiagnostic -> First Diagnostic Partition (110 MB)

8: linuxNative -> /store (286 MB)

9: vmkDiagnostic -> Second Diagnostic Partition (2.5 GB)

2: linuxNative -> /scratch (4 GB)

In case of an installation on a disk drive, there will be an additional partition 3, which is a VMFS datastore.

For SD/USB/BOSS card installation there will be no VMFS partition on the boot device, but on another supported device (e.g. a HDD).

Your setup has some partitions on the boot device (looks like a 16GB SD card), but due to unknown reasons (at least for me), some partitions - in addition to the VMFS datasatore - are located on the HDD, which may make it impossible to convert the disk from MBR to GBP without reformatting it (maybe VMware support knows a way to do this).

This is the SD card's partition layout:

16,022,241,280 May 9 16:42 mpx.vmhba32:C0:T0:L0

4,161,536 May 9 16:42 mpx.vmhba32:C0:T0:L0:1

262,127,616 May 9 16:42 mpx.vmhba32:C0:T0:L0:5

262,127,616 May 9 16:42 mpx.vmhba32:C0:T0:L0:6

115,326,976 May 9 16:42 mpx.vmhba32:C0:T0:L0:7

299,876,352 May 9 16:42 mpx.vmhba32:C0:T0:L0:8

2,684,354,560 May 9 16:42 mpx.vmhba32:C0:T0:L0:9

This is the HDD's partition layout:

2,698,581,639,168 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49

106,896,384 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:1 (Dell Utility Partition - Type:222)

4,293,918,720 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:2

2,684,354,560 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:3

2,191,938,027,008 May 9 16:42 naa.6d094660497b3300225e0a8b0e490e49:4

Interstingly there are two 2.5 GB "vmkDiagnostic" partitions, one on the SD card, and one on the HDD.

Out of curiosity, I'd be interested in the output of the following commands.

partedUtil get "/vmfs/devices/disks/mpx.vmhba32:C0:T0:L0"

partedUtil getptbl "/vmfs/devices/disks/mpx.vmhba32:C0:T0:L0"

esxcli system coredump partition list

My recommandation at this point is to wait, and see what VMware support will say.

However, if this was my system, I'd most likely backup everything, delete/clean all the partition tables, and do a fresh installation. Then ensure that the HDD is GPT, and contains only the VMFS partition.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, Andre. I'll let you know what Dell says.

A couple of quick points I'll make right now and come back to you later with a little more detail (including the output of that command).

First, we purchased a new PowerEdge T440 for another site with VMWare installation. In that case, it has BOSS and there already was a default datastore in VSphere. Exactly where, I don't know. (Can you help me to find out?) I created a new datastore on the RAID and it's one partition labeled VMFS6. It's under 2TB, though, so it's maybe MBR? I'll check and let you know. (I don't recall that option in the setup...?)

Second, the server we're working on here is a replacement for an older one. We have talked about putting that back into service (it's on the network), connecting it to VCenter, and moving machines after hours. (There are 8 VMs.) It'll take a little while, but once we have the VMs on the other ESXi server, we'd be able to destroy this messed up datastore and then move the machines back. However, it makes me anxious to think about moving 8 critical VMs, even though it's what we did when we purchased the newer server.

Thanks so much for your help and patience. I should tip you if you have the option?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks so much for your help and patience. I should tip you if you have the option?

You are welcome, I'm glad if I can help.

First, we purchased a new PowerEdge T440 ...

With a BOSS card in the server, a Dell Utility partition may be configured on the BOSS card devices, but usually not on additional HDDs/RAID. So I assume that the VMFS partition that you've created is the only partition on the RAID VD, and it's likely GPT formatted. To find out, run eiter run partedUtil getptbl /vmfs/devices/disks/<device-name> from the command line, or simply check the settings in the ESXi Hosts's Web Client. Here's an example from my lab server:

However, it makes me anxious to think about moving 8 critical VMs, ...

If moving the VMs is possible - powered on, or off, deending on the hosts' CPUs - that will indeed be an option to avoid downtime. I wouldn't worry too much about issues with the migration, because it's pretty much a binary action, i.e. it will work, or it will not. A VM on the source host will only be removed, if the migration succeeds.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With a BOSS card in the server, a Dell Utility partition may be configured on the BOSS card devices, but usually not on additional HDDs/RAID. So I assume that the VMFS partition that you've created is the only partition on the RAID VD, and it's likely GPT formatted. To find out, run eiter run partedUtil getptbl /vmfs/devices/disks/<device-name> from the command line, or simply check the settings in the ESXi Hosts's Web Client. Here's an example from my lab server:

Yeah, I see that the datastore2 storage space (which is connected to the PERC) is one partition, 1.09TB formatted GPT.

There is another datastore, datastore1. This was there when I logged into ESXi for the first time. It is listed as "Dell BOSS" and 216GB is size (although it's only 1% full atm.) Not sure what's on it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like datastore1 has been created on the (240GB) BOSS card SSD(s), although this is not recommended according to the Dell EMC Boot Optimized Server Storage-S1 User's Guide

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow. When I got the server, ESXi was installed. I didn't set it up. I setup datastore2 where we have the VMs.

Not really sure what to do with this information.

VMWare punted and told us to move the VMs and raze the datastore. I'm willing to accept that and will work toward that goal.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with VMware's recommendation, as this will be a one-time thing, and you'll have a properly configured system in the future.

Not really sure what to do with this information.

If the support case with Dell is still open, it may be worth letting them know about these - in my opinion - incorrect pre-installations, so that they are aware of this, and can fix these issues.

André

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's been quite awhile, but I thought I should update you (and the thread) with what has happened.

Fortunately, we had a spare ESXi server, an older one that we could press into service. I'll call it "old ESXi" to make this easier to follow. It saved us; I am glad we weren't one of the unlucky folks who can't do this.

We brought that online. Interestingly, that one _also_ had partition issues on the datastore and it seemed to be preventing us from getting the full space allocated. Attempting to dismount and delete the datastore didn't work for various reasons mostly to do with something keeping active hooks on the datastore. I struggled with it for a while, but VMWare support suggested I just reinstall ESXi on that server. I did, bringing it up to the latest Dell OEM image. Once I did that, the datastore was clean with a single full partition. I then attached it to our vCenter install.

Over the next weekend, I moved all the VMs to that old ESXi server including vCenter. We don't pay for vMotion, so I couldn't do that. We brought each VM down and used the Migrate option. I used Clone to move vCenter. Once done, I powered up all VMs without trouble.

Next, we deleted the datastore on the "new ESXi server" and recreated it. That gave us a full datastore with VMFS6 at the full-size of the RAID. HooRAID! I did notice that the ESXi build on the old ESXi server was newer than the one of the new ESXi server. So, I took a quick side-trip to upgrade ESXi so the build numbers were at parity across the servers.

During the next weekend, we moved all the VMs and vCenter back to the new ESXi server.

So, it's all fixed.

I have to say that I was very impressed with VMWare support. I opened my ticket on _one_ issue, that of not being able to grab the extra storage space from the RAID. They could have just said, "Well, you can't do that. Sorry. Reinstall ESXi. l8rrrrrrrrr." But they stuck with me through this entire process and kept the ticket open until _all_ tasks were complete. Maybe I got lucky with the person assigned to my ticket, but I never had a sense that someone was behind the scenes prodding him to close the ticket. I really appreciated that.