- VMware Technology Network

- :

- Cloud & SDDC

- :

- ESXi

- :

- ESXi Discussions

- :

- Re: Intel X710 nic woes

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel X710 nic woes

We recently purchased 8 new HPE DL380 Gen10 servers. They came with two 10Gbe ports embedded on the motherboard and two 10Gbe ports on a PCI card. All of these use the Intel X710 controller. Before I purchased these servers I had no idea how many problems these particular nics have given people. Here is a sampling of issues:

Install ESXi 6.5 on R730 with Intel X710

https://lonesysadmin.net/2018/02/28/intel-x710-nics-are-crap/

http://www.v-strange.de/index.php/15-vmware/239-vsphere-6-5-with-intel-x710-network-adapter

I have two DAC cables hooked from this server to my Juniper switch. In the ESXi console I select both of these nics for my management network, give it an IP address, mask, gateway, and dns server. I reboot the host and during the boot process pings to the management interface start working. Once the host is up and running for about 30 seconds, the pings stop. If I remove one of the nics from the management network, pings start again. In other words, when two nics are connected for the management network, it will not work. I have upgraded the driver and firmware on the HPE Ethernet 10Gb 2-port 562SFP+ Adapter (it uses the Intel X710 controller) thinking it might help. We are now sitting at driver version 1.5.8 and firmware 10.2.5. The server is running HPE customized ESXi 6.5 Update 1

Do you think the problem I am experiencing (two nics for the management network will not work) is caused by this controller?

Are there other things I can check that might be causing this behavior?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

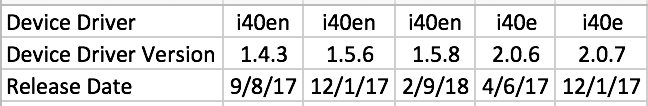

driver version 1.5.8 is not the newest. Install version 2.0.7

Most likely the problem is this.

VMware Compatibility Guide - I/O Device Search

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are two different device drivers for this nic card, the i40en and the i40e. The latest version of the driver for the i40en is 1.5.8 and it came out later than the 2.0.7 i40e driver

I am not even sure of the difference between the i40en and the i40e. This KB suggests that using the native mode driver is the way to go.

Does the "n" in i40en suggest it is the native mode driver?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VMware even plans to leave only native drivers, so to some extent you are right. But if there is a problem then to solve it you need to use all the features, including the use of linux drivers. Given that in some cases it helped.

Since you are dealing with the Intel X710, you may need this document

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did try the 2.0.7 version of the i40e driver and it did not work. I am now at level 2 support at HPE to see if they can fix this

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I've had issues with those NICs as well but not as to what you are describing.

Can you give us some information on how your vSwitch or Distributed switch (if you're using this) is configured in vSphere?

How is the NIC teaming configured on the virtual switch? Please make sure the NIC teaming setting is the same when configuring the virtual switch AND also the Management VMKernel.

What configuration are the NICs on the juniper switch? Are these standalone ports or are they bundled somehow?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Found this a couple weeks ago which has some details/workarounds for the issue if it's any help:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Figure out the issue. We had jumbo frames enabled on the two switchports being used for the nics on the management network. When you assign these nics in the ESXi console to the management network, it makes a vSwitch with an MTU of 9000 which is correct. What it does not do is also make the kernel port with an MTU of 9000. The kernel port still had an MTU of 1500 and that was the issue. You cannot see that from the ESXi console you have to login via the web interface.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spoke too soon. There are two spots that nics in an active/active setup need to be configured, in the port group and the vSwitch. As soon as I make the two nics on the management network active/active and then reboot the host connectivity is lost. Pings to the IP address of the management interface start part way through the host booting up. Once fully started up, about 30 seconds later pings stutter and then stop completely. Below is the setup for the port group. vSwitch, and kernel. I have a 5 year old IBM server running ESXi 6.0 that is plugged into the same switch. It has two 10Gb Emulex nics in active/active for the management network and it is working fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You've probably moved on from this issue, but these cards are problematic in general. I just tried to put them in some servers running ESXi 6.0 (latest) and had major issues getting iSCSI connections stable. My hosts would stay running but the driver would crash and cause the storage to go into an all paths down state. I read several posts from other users that have them and say that the problem is only fixed in the 1.7 driver which is technically only supported on ESXI 6.7 and above. I swapped the cards for Intel's X520's which use the ixgbe driver instead of the i40en and all my problems vanished. If you can, I'd recommend using a different brand/modle CNA.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree as well. I ended up dumping the X710's we had on our servers and went with qlogic's.

We had the X520 and never had an issue. When the X710 came out we said the good just got better! WE were very wrong. The x710's were just problem after problem and issue after issue on ESXi.

We ended up dumping on the X710's and buying qlogics at the time. No issues on those ever.. no matter what model we buy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am the original poster. We dumped these cards as well and installed new 10gig nic cards with a different chipset (qlogic/broadcom). New cards are working well

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Guys,

hi there, just stumbled across this, resolution is crazy 😉 don't use vmk0. check this out Where did my host go.... - Virtual Ramblings

This is still valid as per April 2019, even with latest firmware on the x710 and latest driver on ESXi. Yay 😉

Create a new vKernel, assign management role to it, give it a dummy ip, connect to that ip manually via browser, remove old vmk0 management, change dummy ip to original ip. Boom. No more network chaos. I can't believe this but that solved a huge headache we had for quite a time. All the workarounds with TSO and so on - all not necessary when just not using vmk0.

Best regards

Joerg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sadly I ran across this while installing ESXi7.0 U3i. Been pulling my hair out for the last week and a half and Dell insists it's not the hardware, well it kinda is.

What's so strange to me is that the NIC's worked with ESXI6.7U3. Created a new vmk1 and 🤞 so far so good! Thank you for providing this workaround @joergriether .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are very welcome. An indeed that one gave me a huge headache 🙂

Thanks

Joerg