- VMware Technology Network

- :

- Cloud & SDDC

- :

- ESXi

- :

- ESXi Discussions

- :

- Re: Really poor network throughput between VM's.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Really poor network throughput between VM's.

I have been racking my brain over this for the better part of two weeks. I seem to be getting really poor throughput between my Windows 2008 R2 VMs. I have read over several articles mentioning various tweaks to the VMXNET3 driver and the tcp stack. ( Disabling Autotune, Disable TSO, RSS, so on and so forth.) I run a customized 2008 R2 image for the norm. However I decided some testing was in order. I grabbed me a eval copy of 2008 R2 from M$ and deployed me some machines. Both physical and virtual. Lemme run down my environment.

Physical Boxes:

Cisco UCS B200 x2 ( Sit in the same Chassis)

Vanilla install of 2008 R2

2 10GB Cisco VIC interfaces

2 300GB 15k SAS in Raid1

Throughput tests

Test file: 10GB junk file created with fsutil.

Between 2 physical B200's

Iperf test

Ran several tests with Parallel streams. Consistent 10GB Summerized throughput. ip

iperf -c xx.xx.xx.xx -P 10 -i 1 -p 5001 -w 9000.0K -f m -t 10

SMBv2 transfer

Virtuals

ESXi 5.5

2 vCPU

4GB Ram

Updated vmxnet3

Same file size 10GB created with fsutils

Between 2 VM;s.

IPERF (same as above )

A huge difference in transfer rates. The VMXnet3 adapter has been "optimized" based on what Vmware claims is the best settings with Windows 2008 R2.

On the physical side, both servers are deployed from the same service profile so the settings are identical.

Really beating my head against the wall on this one. Can anyone maybe provide a little insight or a new direction to go down?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as iperf test gos, this doesn't really look like a fair comparison to base conclusions on; still considering that the difference isn't that huge.

2vCPUs pushing over 8Gbps compared to a physical host with at least 8 times more cores isn't bad and iperf on Windows is quite inefficient. Spin up some Linux VMs and run iperf there, I get 2-4 times more throughput (on the same host without physical limitations) with way less CPU overhead with iperf on Linux.

Use a TCP window size like 32-64k with iperf. "-w 9000" appears like you're confusing layer 2 Ethernet jumbo frame size with TCP window size.

And also, was this test between two VMs on the same or different hosts?

You physical host exceeds the bandwidth of a single physical NIC, so is there some LACP/etherchannel in place there? If yes, are you IP-hash load balancing/LACP on the vSwitch where the VM is attached to as well? Otherwise a single vNIC will never be able to exceed the bandwidth provided by a single physical NIC.

As for your file copy test goes this might not be the result of networking performance but rather related to different storage subsystems.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your correct on the window size. I was assuming iperf would take advantage of Jumbo frames. My mistake.

The 2nd test was ran on two VMs. I ran it with the VMs on the same host and different hosts. The results were the same.

As you suggested, I removed the window sizeof 9000. Ran it will the standard 64k window size. Results appeared to be consistent with the previous.

bin/iperf.exe -c XX.XX.XX.XX -P 10 -i 1 -p 5001 -w 64.0K -f m -t 10

------------------------------------------------------------

Client connecting to XX.XX.XX.XX, TCP port 5001

TCP window size: 0.06 MByte

------------------------------------------------------------

[252] local 55.179.144.78 port 49448 connected with XX.XX.XX.XX port 5001

[228] local 55.179.144.78 port 49445 connected with XX.XX.XX.XX port 5001

[244] local 55.179.144.78 port 49447 connected with XX.XX.XX.XX port 5001

[236] local 55.179.144.78 port 49446 connected with XX.XX.XX.XX port 5001

[212] local 55.179.144.78 port 49443 connected with XX.XX.XX.XX port 5001

[204] local 55.179.144.78 port 49442 connected with XX.XX.XX.XX port 5001

[196] local 55.179.144.78 port 49441 connected with XX.XX.XX.XX port 5001

[220] local 55.179.144.78 port 49444 connected with XX.XX.XX.XX port 5001

[188] local 55.179.144.78 port 49440 connected with XX.XX.XX.XX port 5001

[180] local 55.179.144.78 port 49439 connected with XX.XX.XX.XX port 5001

[ ID] Interval Transfer Bandwidth

[204] 0.0- 1.0 sec 99.2 MBytes 832 Mbits/sec

[212] 0.0- 1.0 sec 98.9 MBytes 829 Mbits/sec

[180] 0.0- 1.0 sec 99.8 MBytes 837 Mbits/sec

[244] 0.0- 1.0 sec 98.7 MBytes 828 Mbits/sec

[220] 0.0- 1.0 sec 102 MBytes 856 Mbits/sec

[252] 0.0- 1.0 sec 101 MBytes 846 Mbits/sec

[236] 0.0- 1.0 sec 96.5 MBytes 809 Mbits/sec

[196] 0.0- 1.0 sec 98.5 MBytes 827 Mbits/sec

[228] 0.0- 1.0 sec 97.9 MBytes 821 Mbits/sec

[188] 0.0- 1.0 sec 98.5 MBytes 826 Mbits/sec

[SUM] 0.0- 1.0 sec 991 MBytes 8312 Mbits/sec

As for the LACP question. I'm still fairly wet behind the ears with Cisco UCS. My uplinks from my Fab's are in a LACP port channel. 4 uplinks per Fab. The vNics deployed to my hosts are not in a port channel within UCS. . Therefore, I have the port group for these vm's configured on the vDS based on virtual port id.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The 2nd test was ran on two VMs. I ran it with the VMs on the same host and different hosts. The results were the same.

[SUM] 0.0- 1.0 sec 991 MBytes 8312 Mbits/sec

To reiterate my previous statement, 8Gbps doesn't seem bad at all. That you achieve the same speed between VMs on the same host most likely means that's the limit of what iperf on Windows can deliver in your case.

I'm willing to bet that you will achieve higher throughput with iperf on Linux, for which it was built for. For reference, I'm able to get 25+Gbps between two Linux VMs with vmxnet3 on the same host/port group. (Yes, 25Gbps. Even if a vmxnet3 emulates a 10Gbe link, throughput is not artificially capped without the physical signaling limitation).

The vNics deployed to my hosts are not in a port channel within UCS. . Therefore, I have the port group for these vm's configured on the vDS based on virtual port id.

I'm don't have experience with UCS networking but seeing as your physical Windows host exceeds 10Gbps, I can only guess that there is some sort of channeling in place there (try disabling one of the links and compare that).

For an apples-to-apples comparison you need to channel the vSwitch uplinks as well because with anything except the route based on IP-hash policy, every VM vNIC will be mapped to a single active physical uplink only.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with you that 8Gbps is fantastic between the two VM's. My problem lies with SMB file transfers between the two VM's.

The two physical machines achieve the transfer rates I'd expect. My two VMs however, seem to not get up there.

From my understanding, the vNic's attached to my hosts have a dynamic pinning to the uplinks within the UCS Fabs. In fact, if you look at be Uplink DV, the nics register as 40GBe nics due to the port channel on the fabric. Same for the physical hosts. If that makes any sense. I'm still trying to make sense of it as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For your file copy speeds I don't think you should investigate into network performance any further right now, iperf if proof of that.

As mentioned before, what's more interesting is the storage system where the file is written to or read from. Do you physical Windows boxes and VMs run on the same storage backend and backed by the same set of disks? You might want to conduct some IO performance testing with IOmeter and/or take a look at r(esxtop) IO statistics:

https://communities.vmware.com/thread/197844

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me run a few tests. I ran some tests with ATTO and got favorable results.

My 2 phsyical boxes are local 15k SAS in a RAID1.

The VMs are on SAN storage. I completely forgot about factoring in those disk speeds. I'll report back with my findings!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

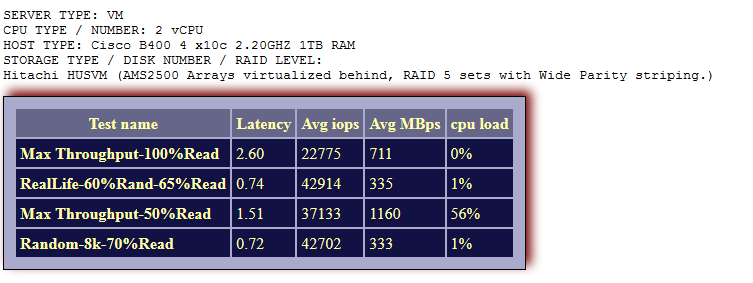

Well the results are in.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That looks quite good, now compare it with results of your physical hosts. Also check a local file copy operation not traversing the network.

A local SAS15k RAID1 of two disks achieving over 1GB/s in transfer almost seems to good to be true to me though. Write-back cache of the local disk controller is a possibility but I'd expect it to be saturated quickly with a 10GB file. Have you checked the actual total time instead of relying on the file copy window speed indicator?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have.

Physical boxes 10gb file transfers via SMB in a few seconds.

Virtual takes a few minutes.