- VMware Technology Network

- :

- Cloud & SDDC

- :

- Enterprise Strategy & Planning

- :

- Enterprise Strategy & Planning Discussions

- :

- Re: New !! Open unofficial storage performance th...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

New !! Open unofficial storage performance thread

Hello everybody,

the old thread seems to be sooooo looooong - therefore I decided (after a discussion with our moderator oreeh - thanks Oliver -) to start a new thread here.

Oliver will make a few links between the old and the new one and then he will close the old thread.

Thanks for joining in.

Reg

Christian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| Test name (System 2TB) | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 16.26 | 3678 | 114 | 6% |

| RealLife-60%Rand-65%Read | 12.07 | 4704 | 36 | 28% |

| Max Throughput-50%Read | 34.52 | 1741 | 54 | 5% |

| Random-8k-70%Read | 11.92 | 4826 | 37 | 28% |

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 19.04 | 3136 | 98 | 1% |

| RealLife-60%Rand-65%Read | 16.88 | 3320 | 25 | 25% |

| Max Throughput-50%Read | 14.21 | 4254 | 132 | 1% |

| Random-8k-70%Read | 17.25 | 3200 | 25 | 1% |

| |||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CaptainFlannel,

Here is what I am getting. I am using a VNXe3100 with 10 300GB SAS disks in two RAID5 arrays. I am using iSCSI with a 512GB VMFS. I have not turned on jumbo frames yet nor have I set up multipath I/O, just using a single 1GB ethernet port. The VM is a Windows 2003 R2 server with 4 GM memory and 2 vCPUs.

I am troubled by the real world performance numbers, where your VNXe clearly outperforms mine. The random numbers seem low to me also. What I find interesting is that the Max throughput on my box is the only number that is much better than yours. I wonder why?

Best Regards.

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 18.04 | 3348 | 104 | 13% |

| RealLife-60%Rand-65%Read | 31.82 | 1681 | 13 | 0% |

| Max Throughput-50%Read | 18.78 | 3254 | 101 | 12% |

| Random-8k-70%Read | 30.30 | 1730 | 13 | 0% |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting to compare. At the moment I am just looking at my tests performed on our 😧 drive tests. With that our numbers are similar, however with our 12 disks in a single RAID10 it looks like we are getting increased I/O with the 50% Read Tests. Is your RAID5 two different Volumes? or a single? I would have really thought the RAID10 compared to RAID5 would have much different numbers with similar amount of disks used.

Actually in further looking at your numbers the Read IO seems very similar, but when writes are involved I do see the increased IO available in the RAID10.

Interesting why your 100% read is a little faster. What kind of networking equipment are you using.

Message was edited by: captainflannel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| Test name Physical | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 1.01 | 56453 | 1764 | 2% |

| RealLife-60%Rand-65%Read | 25.42 | 2221 | 17 | 1% |

| Max Throughput-50%Read | 13.22 | 4539 | 141 | 19% |

| Random-8k-70%Read | 17.65 | 3131 | 24 | 1% |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My company has spent a significant amount of time on iSCSI / VMWare benchmarks over the last few months.

Using a Dell R710 connected to an MD3220i with 500GB 7.2K drives in the first shelf and 600GB 15K drives in the second shelf.

We originally only had the 7.2K drives and configured them with RAID6 and RAID10 (equal number of disks).

Max Throughput-100%Read and Random-8k-70%Read tests were the same - approximately 128MB/s and 135MB/sec throughput respectively even with round robin configured.

RAID6 on these drives for RealLife-60%Rand-65%Read and Random-8k-70%Read throughput was about 8.7 and 8.8 MB/sec

RAID10 was 17 and 15 MB/sec.

RAID10 on the 15K drives was basically double the 7.2K at 31 and 33 MB/sec (we did briefly see 37 and 42 but are unable to repeat it).

The most interesting thing we discovered was that the iops need to be optimized for this array when using round robin.

The command is esxcli nmp roundrobin setconfig --type "iops" --iops=3 --device (your lun ID).

Once this command was run against our LUN the Max Throughput-100%Read and Random-8k-70%Read tests hit the limit of the NIC's, if we have 3 x 1Gbit NICs we get over 300 and 315 MB/sec.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been using a beta tool for testing storage IO on our esxi boxes and our storage array and getting some pretty good results that are a little more real world oriented then the 4 tests run here. I've run mixed workloads of Exchange 2003/2007, SQL, OLTP, Oracle, and various other tests from VM's in tandem as to emulate real world heavy transactional loads that can simulate impact of prolonged storage IO workloads instead of just single tests that try to achieve a "best result". Speak with your VMWare rep about the Storage IO Analyzer beta which has a very good set of workloads you can run from a VM to simulate multiple workloads. One caveat is that I've found that windows VM's are just not efficient enough to really tax a real Tier 1 storage system. Running 1 workload from each attached host tends to work better at least in my case when trying to truly hammer our boxes. I've managed to garner 80k IOPS from several VM's running in parallel with max throughput rates of around 1.3 GBps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting, when switched our iops from the default 1,000 to 3 we definetly see an increase in the maxthroughput tests. Significant MBps and IOPS increases.

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 11.02 | 5283 | 165 | 1% |

| RealLife-60%Rand-65%Read | 17.19 | 3241 | 25 | 25% |

| Max Throughput-50%Read | 11.37 | 5339 | 166 | 1% |

| Random-8k-70%Read | 17.57 | 3139 | 24 | 1% |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

captainflannel,

I let the VNXe autoconfigure the pools and it created two RAID 5 (4+1) groups under the performance pool, one hot spare and one unused drive. From what I understand, the data store uses all 10 of the drives. As far as a switch is concerned, I am using a Cisco SGE2000 which is a small business product that has 24 GB ports.

I tried using a NFS datastore, but the performance was very poor, the numbers were about 4 times less on the real world test. I've been working with EMC Tech Support for almost two months, but have not really made any progress. Did you try NFS and is so, how were your numbers?

Best Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am currently on paternity leave until the 13/08/2011.

If you require assistance, please call our helpdesk on 1300 10 11 12 begin_of_the_skype_highlighting 1300 10 11 12 end_of_the_skype_highlighting.

Alternatively, email service@anittel.com.au

Regards,

James Ackerly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First off I just wanted to thank everyone for their contribution to this thread. It helped me immensely when planning my virtualization project and I really appreciate all the time people spend benching their storage. It really pushed me in the Equallogic direction, and based on my performance in the new setup, I'm very glad indeed.

So my setup consists of a PS400VX-600, 2 stacked PowerConnect 6248s, and three R610 ESXi hosts (the Dell show, basically). My SAN is configured with jumbos end-to-end, flow control ON, STP and unicast control disabled on the switches. Each host has four active links to the SAN, sadly the PS4000 is limited to two active links but I less worried about that now after looking at the numbers.

Firstly, I benched the array by using an RDM from my B2D box using Dell's MPIO initator:

SERVER TYPE: Dell NX3100

CPU TYPE / NUMBER: Intel 5620 x2 24GB RAM

HOST TYPE: Server 2008 64bit

| Access Specification Name | IOPS | MBps (binary) | Avg. Response Time (ms) |

|---|---|---|---|

| Max Throughput-100%Read | 6706 | 209 | 17 |

| RealLife-60%Rand-65%Read | 4298 | 33.5 | 22.5 |

| Max Throughput-50%Read | 7956 | 248 | 14 |

| Random-8k-70%Read | 4232 | 33 | 23.5 |

Then I got brave and configured one of my ESXi hosts with the Dell MEM plug-in for iSCSI, using the software VMware iSCSI initiator and threw a bare-bones Win2k8R2 Guest on there:

| Access Specification Name | IOPS | MBps | Avg.Response Time (ms) |

|---|---|---|---|

| Max Throughput-100%Read | 7163 | 223 | 8.3 |

| RealLife-60%Rand-65%Read | 4516 | 35 | 11.4 |

| Max Throughput-50%Read | 6901 | 215 | 8.4 |

| Random-8k-70%Read | 4415 | 34 | 11.9 |

So I was quite pleased with that, but then I noticed I only had two active links to the storage, but I've got 4 links on my host - so I upped the membersessions to 4 as outlined here: http://modelcar.hk/?p=2771

| Acess Specification Name | IOPS | MBps (binary) | Avg. Response Time (ms) |

|---|---|---|---|

| Max Throughput-100%Read | 7195 | 224 | 8.3 |

| RealLife-60%Rand-65%Read | 4375 | 34 | 11.9 |

| Max Throughput-50%Read | 7713 | 241 | 7.6 |

| Random-8k-70%Read | 4217 | 32.9 | 12.3 |

Gave me a pretty good bost on my sequential, but lessened my random-ish workloads and increased my latency a little bit, not sure which I'll go with... But overall very pleased so far!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ferie, tilbake 25/7

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

EMC VNX5500, 200gb fast cache 4x100 efd raid1)

Pool of 25x300gb 15k disks

Cisco UCS blades

Acess Specification Name IOPS MBps (binary) Avg. Response Time (ms)

Max Throughput-100%Read------ 16068 --- 502 ----- 1.71

RealLife-60%Rand-65%Read----- 3498 ---- 27 ----- 10.95

Max Throughput-50%Read-------- 12697 ---- 198 ---- 0.885

Random-8k-70%Read---------------- 4145 ----- 32.38 --- 8.635

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

qwerty22, here's an NFS datastore on a VNXe3300, is this comparable to what you saw on your VNXe3100?

SERVER TYPE: HP DL360 G5

CPU TYPE / NUMBER: Intel X5450 x2 32GB RAM

Host Type: Windows 2008 R2 64bit / 1vCPU / 4GB RAM / ESXi 4.1

STORAGE TYPE / DISK NUMBER / RAID LEVEL: VNXe3300 / 21x600GB SAS 15k / RAID 5

| Access Specification | IOPs | MB/s | Avg IO response time |

| Max Throughput 100% read | 3428 | 107 | 18 |

| RealLife-60% Rand/60% Read | 596 | 5 | 101 |

| Max Throughput 50% read | 3183 | 99 | 19 |

| Random-8k 70% Read | 562 | 4 | 107 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

iSCSI results

SERVER TYPE: HP DL360 G5

CPU TYPE / NUMBER: Intel X5450 x2 32GB RAM

Host Type: Windows 2008 R2 64bit / 1vCPU / 4GB RAM / ESXi 4.1

STORAGE TYPE / DISK NUMBER / RAID LEVEL: VNXe3300 / 21x600GB SAS 15k / RAID 5

| Access Specification | IOPs | MB/s | Avg IO response time |

| Max Throughput 100% read | 3502 | 109 | 17 |

| RealLife-60% Rand/60% Read | 3738 | 29 | 14 |

| Max Throughput 50% read | 5783 | 181 | 10 |

| Random-8k 70% Read | 3602 | 28 | 15 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Andy0809, Yes that is close to what I was seeing with NFS, very poor IOPs and high latency. I understand that EMC has found and corrected the NFS issue and a new software release has been posted to correct the problem. I am out of the office currently so I haven't had the opportunity to install and test it, but at least one other person has and has reported back numbers similar to iSCSI. Best Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Performed the upgrade to the latest VNXe Software Release and performed our tests again to see if any imporvement for iSCSI. Was not expecting to see much, but we do see a big improvement in 100% read performance from the previous tests.

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 8.84 | 6768 | 211 | 12% |

| RealLife-60%Rand-65%Read | 17.89 | 3116 | 24 | 27% |

| Max Throughput-50%Read | 10.90 | 5580 | 174 | 1% |

| Random-8k-70%Read | 17.47 | 3177 | 24 | 2% |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 15.40 | 3885 | 121 | 22% |

| RealLife-60%Rand-65%Read | 14.73 | 3584 | 28 | 0% |

| Max Throughput-50%Read | 12.86 | 4741 | 148 | 22% |

| Random-8k-70%Read | 13.53 | 3811 | 29 | 0% |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 15.02 | 4001 | 125 | 18% |

| RealLife-60%Rand-65%Read | 18.33 | 2132 | 16 | 53% |

| Max Throughput-50%Read | 10.71 | 5622 | 175 | 20% |

| Random-8k-70%Read | 11.76 | 2907 | 22 | 59% |

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 8.45 | 7119 | 222 | 22% |

| RealLife-60%Rand-65%Read | 15.68 | 2423 | 18 | 55% |

| Max Throughput-50%Read | 9.75 | 6000 | 187 | 25% |

| Random-8k-70%Read | 11.71 | 2918 | 22 | 61% |

| Test name | Latency | Avg iops | Avg MBps | cpu load |

|---|---|---|---|---|

| Max Throughput-100%Read | 8.58 | 7013 | 219 | 22% |

| RealLife-60%Rand-65%Read | 17.93 | 2200 | 17 | 53% |

| Max Throughput-50%Read | 10.07 | 5806 | 181 | 24% |

| Random-8k-70%Read | 11.67 | 2914 | 22 | 61% |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have only tested with iSCSI, no NFS in our enviroment at the moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

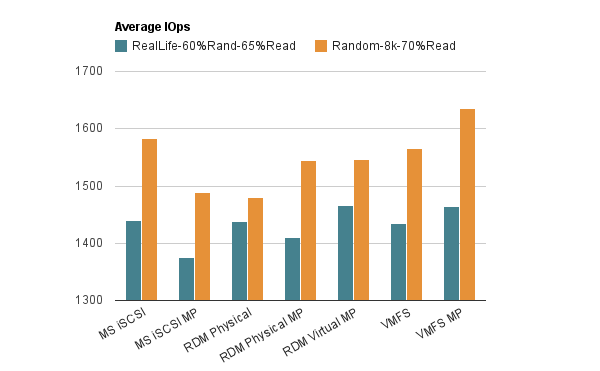

I made several tests with different configurations and here are the results. Check also my post about the tests on my blog.

-Henri