- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Feature this Article

Virtual Routers

Virtual Routers

I have been doing some work on virtual routers, mostly with the Cisco CSR 1000v and Nexus 1000v. Both have interfaces to VMware. My main discussion has been on how Virtual Routers will interact with the cloud.

Summary – Racks that used to hold 6 machines now hold hundreds of virtual machines, instability is exploding and there is a need for routed interfaces. Putting a Cloud Virtual Router in the chassis with the virtual machines is one solution to this issue.

In the past few years we have seen the words cloud and computing pop up more and more. Most recently we started seeing trends towards what could be called cloud virtual routers. I know, I know, the term “virtual router” means different things to different people and every router vendor out there has their own definition. I added “cloud” to hopefully distinguish between the two.

A Cloud Virtual Router or CVR for short, is a router that exists within a hypervisor. The concept is not new, we have been running routing daemons on computing platforms for years and since the introduction of hypervisors, people have been running them there. It is also a well known fact that Juniper Networks utilizes a PC based control plane in their very first router, the M40.

The need for a CVR has been growing due to the density of blade servers and the growing number of virtualized machines you can run on a single box. If each box has an uplink for each machine (or even vlans, etc) going to a TOR (Top of Rack) Switch and back to your core, the amount of noise you will see in your network if you use an IGP (or STP, etc) will be massive, and useless.

You could deploy TOR Routers, but to get the density necessary would be very expensive. When I speak of routers I mean routers, not routing switches, to me there is a difference.

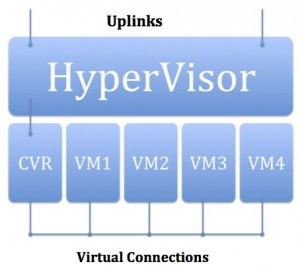

So a CVR becomes a real and reasonable option within the cloud space and it lays out like this (kept small for explanatory purposes):

Essentially in a system with 4 Virtual Machines (which could be multi-tenant) you have an 5th which is in reality a router. Each VM links to the router via a virtual link and the router links to the TOR Switch using multiple links. This saves ports on the TOR and hides instability from your network.

In this scenario, when a link flaps on a VM, it is handled by the CVR before being announced to the core. You can have all of the flexibilities of backup machines, failover, link detection, etc handled by the CVR. You could deploy 2 CVRs for redundancy.

So what do you use for the router? You have quite a few options, there are open source routing project such as Quagga. There are commercial solutions from Cisco such as the Nexus 1000-v. And many other options. Feel free to comment on this story if you have options to add.

TL;DR – Racks that used to hold 6 machines now hold hundreds of virtual machines, instability is exploding and there is a need for routed interfaces. Putting a Cloud Virtual Router in the chassis with the virtual machines is one solution to this issue.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is an awsome blog, but I have few doubts and I hope I will get a reply.

Lets say we have a C7000 HP Chassis and have 16 blades all running ESXi. Can I restrict all routing traffic from the Chassis by using the method explained above.

I think we need a CVR on each ESX host to limit the traffic withion that ESX host. If a VM from another host (lets say blade2) need to communicate to a VM on another host on the same chassis ( Say Blade 3), the traffic will still have to go out and come back. right?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Cheride,

From reading up on the specs of the C7000 it appears to have a backplane that allows for interconnection between blades including switches. I don't know a lot about HP hardware (nor any server hardware really) but from the description it would appear that you would be able to run some sort of a virtual router on a minimum of two of the blades and then send all traffic through those blades, just like you would with the add on blade switch.

If I focus for a minute on the CSR 1000v, we would have to look at the performance on the specific hardware you are using to figure out how many you would need to support the forwarding you expect. I have not seen any public performance numbers for the CSR 1000v, so you would either have to ask for those, have someone do testing for you or do the testing in house.

Here is the current spec sheet for the CSR 1000v http://www.cisco.com/en/US/prod/collateral/routers/ps12558/ps12559/data_sheet_c78-705395.html

It requires ESXi 5 to run.

Now, just thinking out loud here:

If you were to run one virtual router per blade, I believe you would have better connectivity inter-blade then you would intrablade hence you would be able to take all of the traffic from a single blade, send it through the virtual router on the same blade and then through the backplane over to another virtual router or a integrated switch and out to the network.

If all of this is being done for one company, you have more flexibility, if you have multi-tennant requirements then there is more to look at.

Hopefully I did not go too off topic here, if you have more questions feel free to ask.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Thanks for your reply. I have zero knowledge with CSR 100v, but will look in to it soon.

C7000 do have a back plane and we can limit the traffic NOT to GO out if all my VM's ( all Vm's on 16 ESX hosts) are on the same VLAN. The cisco switches that are used on the chassis are layer2 switches and not layer3.

In our environment, easch ESX host is having multiple VLAN's, hence most of the VM's has to go out to the LAYER3 router to get back to another VM ( belongs to another VLAN) in the same chassis. I'm not sure having a router on each ESX host is a good idea.

I'm speaking without the knowledge of CSR 100v, I may be wrong. Anyways, Thanks for the reply.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Again,

There is not a lot of information about the CSR 1000v out there, I have asked Cisco for more information but I think the product is too new for there to be a lot of docs out there. One thought is that running a virtual router on the chassis where all of the vlans that would end up doing L3 go would allow you to keep the traffic within the chassis, which is part of the concept.

You could also do this with some sort of free/open source router runnning on a VM that runs BGP/OSPF or whatever your routing protocol of choice is and just sends the traffic where it needs to go.

Not being a systems person I don't how much traffic normally goes between hosts on the same chassis vs external hosts. My focus is routing, top of rack switches/routers and such. The division of this equipment continues to blur as does dev/ops.